Stanford CS25: V3 I Retrieval Augmented Language Models

Summary

TLDR本次讲座深入探讨了增强型语言模型(Retrieval-Augmented Generation, RAG)的前沿发展和面临的挑战。演讲者首先回顾了语言模型的历史和发展,强调了RAG在提高语言理解和生成能力方面的重要性。随后,他详细分析了RAG的工作原理、优势以及存在的问题,如幻觉、属性和时效性。演讲者还讨论了如何通过改进检索器和生成器的协同工作来优化RAG系统,并对未来的研究方向提出了展望,包括多模态性和系统端到端的优化。

Takeaways

- 🎓 讲座嘉宾是Contextual AI公司的CEO,同时也是斯坦福大学符号系统系的兼职教授,他在机器学习和自然语言处理(NLP)领域有着深厚的背景和专业经验。

- 📈 语言模型的时代已经到来,但目前企业级应用仍面临准确性、幻觉、归因和数据更新等挑战。

- 🔍 检索增强(Retrieval Augmentation)是一种新兴的研究方向,通过结合外部记忆(如文档数据库)来增强语言模型的能力。

- 🌐 通过外部检索,语言模型可以实现‘开卷考试’式的运作,无需将所有信息记忆在参数中,提高了效率和灵活性。

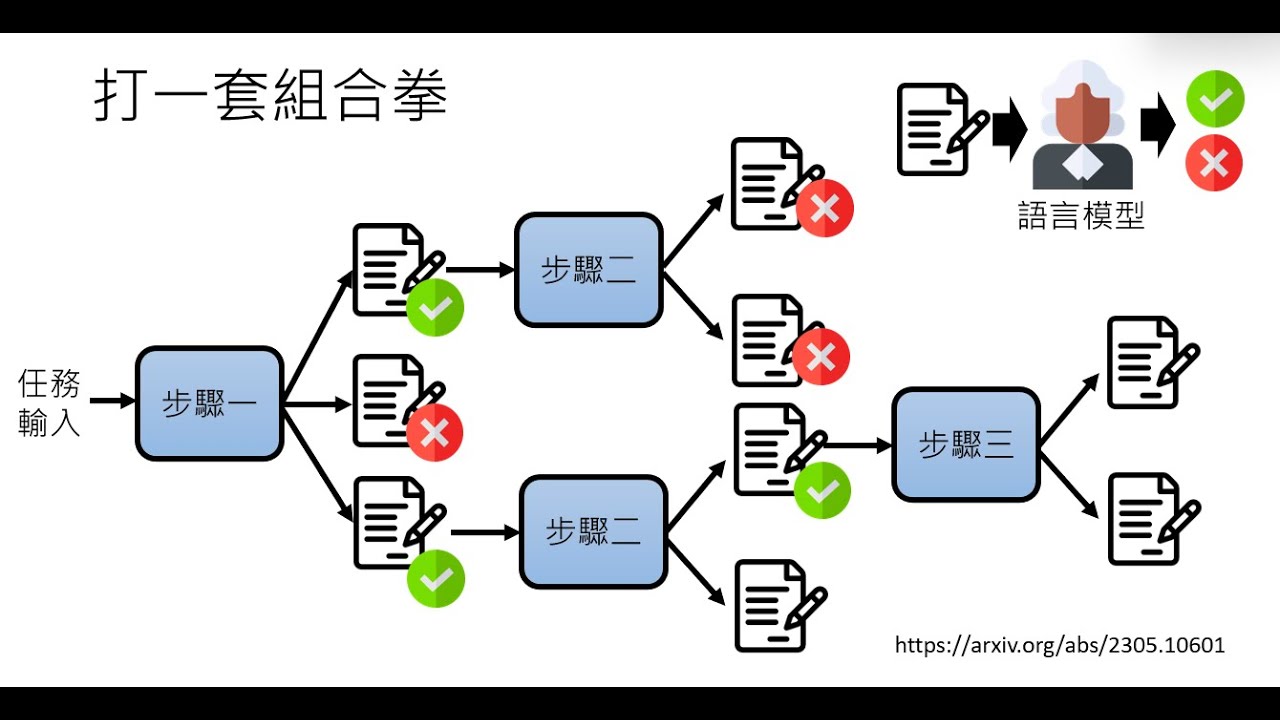

- 🔄 RAG(Retrieval-Augmented Generation)是一种结合了检索器和生成器的架构,可以在生成文本时动态地检索相关信息。

- 📚 讲座中提到了一些具体的技术如TF-IDF、BM25、DPR等,这些技术在文档检索和信息检索领域有着广泛的应用。

- 🔧 在构建检索增强型语言模型时,需要考虑如何优化整个系统,包括检索器、生成器和查询编码器等组件。

- 🔍 讲座强调了评估检索增强系统的有效性,需要考虑训练数据、任务类型和更新策略等多方面因素。

- 🌟 未来研究方向包括多模态检索、系统级优化、以及如何更好地控制和测量语言模型的‘幻觉’现象。

- 🚀 讲座提出了对现有语言模型的一些创新思考,包括使用增强检索来提高效率、生成更好的训练数据、以及探索新的硬件和软件架构。

Q & A

什么是语言模型?

-语言模型是一种用于计算一系列词汇出现概率的模型,它可以用来预测下一个词或者生成文本。语言模型在自然语言处理领域有着广泛的应用,如机器翻译、语音识别等。

语言模型的历史有多久了?

-语言模型的概念并不是近几年才有的,实际上这个概念已经有几十年的历史了。最早的神经网络语言模型可以追溯到1991年。

什么是RAG(Retrieval-Augmented Generation)?

-RAG是一种结合了检索和生成的模型,它通过检索相关信息来辅助生成任务,如文本生成。这种模型通常包含一个检索器(Retriever)和一个生成器(Generator),检索器负责从大量数据中检索相关信息,生成器则基于这些信息生成响应或文本。

RAG模型如何解决语言模型的静态问题?

-RAG模型通过引入外部记忆(如文档数据库)来解决语言模型的静态问题。这样,模型可以访问最新的信息,而不必局限于训练时的数据,从而保证了信息的时效性和准确性。

RAG模型如何减少生成过程中的幻觉问题?

-RAG模型通过检索真实世界的数据作为上下文信息来减少幻觉问题。这样,生成的内容可以基于真实可靠的信息,减少了模型凭空捏造信息的可能性。

在RAG模型中,如何理解参数式(parametric)和非参数式(non-parametric)的区分?

-参数式方法指的是模型通过其参数(例如神经网络的权重)来存储和处理所有知识。而非参数式方法则是指模型通过外部数据(如检索到的文档)来辅助决策和生成过程,而不是仅仅依赖于模型内部的参数。

什么是Tfidf?

-Tfidf(Term Frequency-Inverse Document Frequency)是一种用于信息检索和文本挖掘的常用加权技术。它通过计算一个词在文档中的频率(TF)以及该词在所有文档中出现的频率的逆(IDF),来评估一个词对于文档的重要性。

BM25是什么?

-BM25是一种基于概率检索框架的排名函数,用于估计文档与用户查询的相关性。它是目前信息检索领域中最广泛使用的排名算法之一。

在RAG模型中,如何理解闭卷考试(closed book)和开卷考试(open book)的比喻?

-闭卷考试比喻的是传统的语言模型,它需要将所有知识记忆在模型的参数中。而开卷考试比喻的是RAG模型,它允许模型在生成回答时访问外部信息,就像在考试时可以查阅书籍和笔记一样。

RAG模型中的检索器(Retriever)和生成器(Generator)是如何协同工作的?

-在RAG模型中,检索器首先根据输入的问题或上下文检索出相关的信息或文档,然后将这些信息作为上下文提供给生成器。生成器基于这些上下文信息生成回答或文本。两者的协同工作使得模型能够结合内部参数和外部信息来完成任务。

RAG模型在实际应用中有哪些优势?

-RAG模型结合了检索和生成的优势,可以提供更加准确和时效的信息,减少生成内容的幻觉问题。此外,它还可以通过外部记忆来更新和修订信息,使得模型能够适应不断变化的数据和知识。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Augmentation of Data Governance with ChatGPT and Large LLMs

Stream of Search (SoS): Learning to Search in Language

Trying to make LLMs less stubborn in RAG (DSPy optimizer tested with knowledge graphs)

Shane Legg (DeepMind Founder) - 2028 AGI, Superhuman Alignment, New Architectures

Ilya Sutskever | The birth of AGI will subvert everything |AI can help humans but also cause trouble

【生成式AI導論 2024】第4講:訓練不了人工智慧?你可以訓練你自己 (中) — 拆解問題與使用工具

5.0 / 5 (0 votes)