Trying to make LLMs less stubborn in RAG (DSPy optimizer tested with knowledge graphs)

Summary

TLDR本视频探讨了如何通过结合外部信息和内部知识来提高大型语言模型的准确性。研究发现,尽管RAG(Retrieval-Augmented Generation)模型可以提高准确性,但其效果依赖于模型的信心和提示技术。视频还讨论了使用不同的提示技术如何影响语言模型遵循外部知识,并介绍了如何通过实体链接器来验证信息,减少模型的幻觉现象。此外,还展示了如何通过优化提示和集成知识图谱来改进语言模型的检索能力,以及如何通过DSP(Data-Structure Pipeline)优化器和自定义的提示模板来提高模型遵循知识图谱数据的能力。

Takeaways

- 🧠 研究显示,大型语言模型在处理外部信息和内部知识时,倾向于依赖自己的知识,特别是当它们对外部信息的正确性不够自信时。

- 🔍 研究比较了GPD 4、GPD 3.5和Mistral 7B三个模型,发现GPD 4在使用外部信息时最为可靠,其次是GPD 2.5,Mistral 7B排在第三位。

- 📈 研究指出,尽管RAG(Retrieval-Augmented Generation)可以提高准确性,但其有效性取决于模型的自信度和提示技术。

- 🤖 观众的评论启发了研究,即语言模型可能认为自己的内部知识比外部知识更正确,这影响了它们对问题的回答。

- 🔄 研究中使用了不同的提示技术,如Lan chain和Lalex,这些技术可以影响语言模型如何遵循外部知识。

- 🛠️ 提出了使用名为ThePi的框架来自动调整提示,以改善语言模型遵循外部知识的能力。

- 🔗 实体链接是验证信息的一种方法,通过将文本中的词语映射到知识图中的实体,并验证答案的有效性。

- 📚 知识图谱可以提供验证答案的依据,通过链接事实回到默认的知识图谱来确认信息的有效性。

- 🔧 在LLM(Large Language Models)系统中添加实体链接器可以检查答案的正确性,并帮助过滤语言模型产生的虚构信息。

- 📈 通过在DSP(Data-Seeking Pipeline)RAG管道中集成实体类型检查和知识图谱数据,可以提高输出的准确性。

- 📝 优化程序可能不总是可靠的,有时需要手动调整提示,以确保语言模型严格遵循知识图谱中的外部知识。

Q & A

为什么大型语言模型(LLMs)在提供知识图谱数据时仍然可能无法正确整合信息?

-根据视频脚本,LLMs可能倾向于依赖自己的内部知识,即使外部知识来源被认为更准确。这可能是由于模型对外部知识的信任度不足,或者由于模型的自信程度和提示技术的影响。

视频提到的研究是如何比较不同语言模型在处理外部信息时的可靠性的?

-该研究比较了GPD 4、GPD 3.5和Mistral 7B三个模型。研究发现,GPD 4在使用外部信息时是最可靠的模型,其次是GPD 2.5,最后是Mistral 7B。所有模型在自信外部知识不够准确时,都倾向于坚持自己的知识。

什么是RAG系统,它在提高语言模型准确性方面的作用是什么?

-RAG系统是一种检索增强的生成模型,它可以通过结合外部知识源来提高语言模型的准确性。然而,其有效性取决于模型的自信程度和提示技术。

视频脚本中提到的ThePi框架是什么,它如何帮助改善LLMs的流程?

-ThePi是一个模块化的框架,用于改进LLMs的流程。它包含一个优化器,该优化器应用自举技术来创建和完善示例,这个过程可以自动创建基于特定指标自我改进的提示。

实体链接在LLMs系统中的作用是什么?

-实体链接是一个通过将文本中的词语映射和识别到知识图中的实体来验证信息的过程。它不仅可以帮助检查答案的正确类型,还可以帮助过滤LLMs在幻觉时编造的信息。

如何通过实体链接者改进LLMs的输出?

-通过在LLMs系统中添加实体链接者,可以验证答案的类型,并确保答案与知识图中的信息一致,从而减少幻觉并提高输出的准确性。

在设计自定义的DS Py RAG管道时,为什么要整合知识图谱数据?

-整合知识图谱数据可以提供更全面的信息,因为知识图谱通过连接数据点来组织信息。这有助于语言模型基于精炼的查询从向量数据库中检索相关信息,并最终通过结合向量数据库和知识图谱的元数据来增强答案。

DS Py RAG管道中使用的两个指标是什么,它们如何工作?

-DS Py RAG管道中使用的两个指标是:检查答案的实体类型是否与实体链接者中的类型匹配,以及评估答案是否与知识图谱中的数据一致。如果答案的实体类型匹配或为是非类型答案(不需要实体类型),则分数增加一;然后评估答案是否与知识图谱中的上下文一致。

为什么即使提供了地面真实情况,优化程序可能也无法成功地使语言模型坚持外部知识?

-即使提供了地面真实情况,由于语言模型自身的不可预测性和自我导向的提示管道可能不够可靠,优化程序可能无法成功地使语言模型坚持外部知识。

手动调整提示与自动化提示管道相比,在使语言模型坚持外部知识方面有哪些优势?

-手动调整提示可以更具体地指导语言模型严格遵循知识图谱中的地面真实情况,尤其是在内部模式与外部知识存在冲突时。这可以减少幻觉,并提供与知识图谱数据完全一致的答案。

视频脚本中提到的“graph RAG”是什么,它与DS Py RAG有何不同?

-视频脚本中提到的“graph RAG”是接下来要探讨的主题,尽管脚本中没有详细说明,但可以推测它可能是一种不同的RAG系统,用于处理知识图谱和向量数据库的组合,与DS Py RAG相比,它可能有不同的优化和处理方法。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

【生成式AI】ChatGPT 可以自我反省!

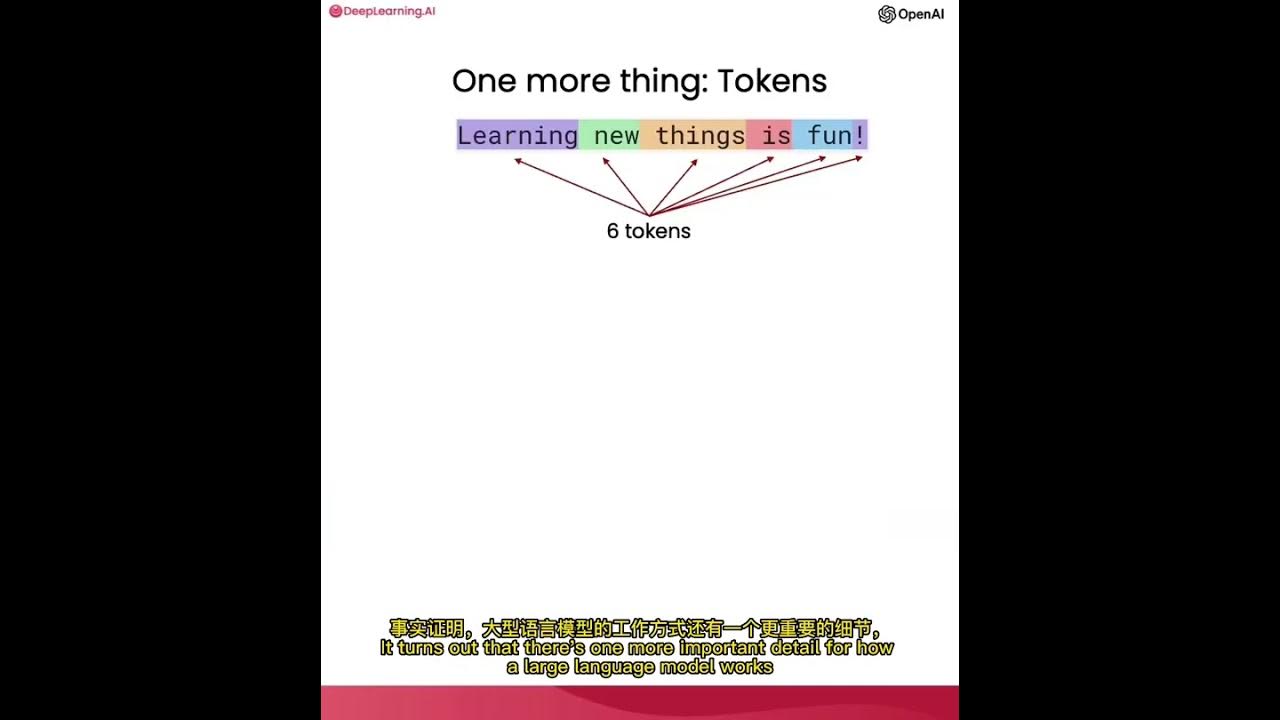

使用ChatGPT API构建系统1——大语言模型、API格式和Token

Ilya Sutskever | This will all happen next year | I totally believe | AI is come

【人工智能】万字通俗讲解大语言模型内部运行原理 | LLM | 词向量 | Transformer | 注意力机制 | 前馈网络 | 反向传播 | 心智理论

大语言模型微调之道1——介绍

【生成式AI導論 2024】第5講:訓練不了人工智慧?你可以訓練你自己 (下) — 讓語言彼此合作,把一個人活成一個團隊 (開頭有芙莉蓮雷,慎入)

5.0 / 5 (0 votes)