Stream of Search (SoS): Learning to Search in Language

Summary

TLDR本视频脚本探讨了训练语言模型对搜索过程的影响,旨在教授语言模型如何搜索和回溯,以实现自我改进。基于Transformer的模型在规划任务中存在错误累积和前瞻性挑战等问题,这些问题源于模型在有效搜索和回溯方面的限制。研究展示了通过将搜索过程表示为搜索流,语言模型可以被训练进行搜索和回溯。以倒计时游戏为灵感的搜索问题,要求将输入数字与算术运算结合以达到目标数字,提供了一个具有挑战性的搜索问题。通过使用符号规划器和启发式函数创建的训练数据集,训练语言模型在多样性数据集上的表现优于仅在最优解上训练的模型。此外,通过优势诱导策略对齐和专家迭代进行微调后,搜索流模型展现出了增强的搜索和规划能力。研究结果表明,基于Transformer的语言模型可以通过搜索学习问题解决,并自主改进其搜索策略。

Takeaways

- 🤖 训练语言模型以学习搜索和回溯,可以提高模型的自我改进能力。

- 🚀 基于Transformer的模型在规划任务中存在错误累积和前瞻性挑战。

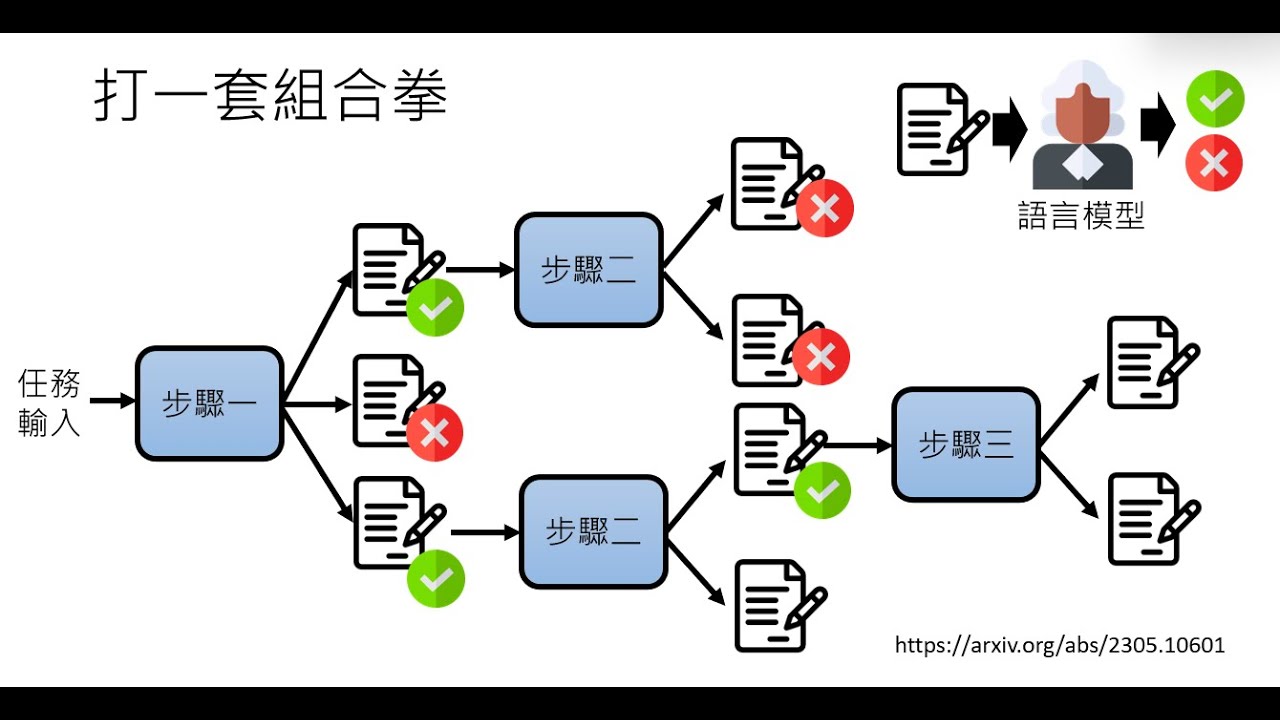

- 🔍 通过将搜索过程表示为搜索流,语言模型可以被训练进行搜索和回溯。

- 🎯 使用启发式函数创建的训练数据集,可以帮助模型学习多样化的搜索策略。

- 📈 与仅在最优解上训练的模型相比,基于搜索流训练的模型在预测正确解的路径上表现更好。

- 🧠 通过优势策略对齐和专家迭代进行微调,可以增强模型的搜索和规划能力。

- 🔬 研究表明,基于Transformer的语言模型可以通过搜索学习问题解决,并自主改进搜索策略。

- 📚 通过马尔可夫决策过程(MDP)对问题空间进行建模,定义了状态、动作、转换函数和奖励函数。

- 📊 通过比较不同的搜索策略,发现模型能够与多种符号策略对齐,而不是仅依赖于训练数据中的单一策略。

- 🛠️ 利用强化学习策略进行微调,模型能够解决之前无法解决的问题,并发现新的搜索策略。

- ✅ 训练语言模型以提高准确性,也有助于发现新的搜索策略,这表明模型能够灵活地使用不同的搜索策略。

- 🏆 该研究展示了通过内部搜索机制使语言模型能够处理复杂问题的可能性,并强调了让模型了解问题解决过程而不仅仅是最优解的重要性。

Q & A

在训练语言模型时,为什么需要让模型学会搜索和回溯?

-训练语言模型学会搜索和回溯是为了提高模型在规划任务中的表现,解决诸如错误累积和前瞻性挑战等问题,这些问题源于模型在有效搜索和回溯方面的限制。

Transformer-based模型在规划任务中面临哪些挑战?

-Transformer-based模型在规划任务中面临的挑战包括错误累积和前瞻性问题,这些问题使得模型难以有效地进行搜索和回溯。

如何通过训练提高语言模型的搜索能力?

-通过将搜索过程表示为搜索流,定义探索、回溯和剪枝等组件,并将其应用于受倒计时游戏启发的搜索问题,可以训练语言模型进行搜索和回溯。

倒计时游戏在训练语言模型中的作用是什么?

-倒计时游戏提供了一个具有挑战性的搜索问题,要求将输入数字与算术运算结合以达成目标数字,这需要模型进行规划、搜索和回溯,从而增强模型的问题解决能力。

在训练数据集中,为什么只包含57%能导致解决方案的搜索轨迹?

-包含非最优和有时不成功的搜索轨迹可以更全面地训练模型,使其能够学习到更多样化的搜索策略,并提高模型在面对复杂问题时的适应性和灵活性。

如何评估语言模型在生成正确解决方案轨迹方面的准确性?

-通过检查生成的轨迹中是否包含从初始状态到目标状态的正确路径来衡量准确性。

在训练过程中,模型是如何与不同的搜索策略对齐的?

-通过分析模型解决正确问题的策略和访问的状态,可以评估模型与不同搜索策略的对齐情况。例如,模型与使用总和启发式函数的深度优先搜索(DFS)策略具有最高的相关性。

为什么训练模型时使用搜索轨迹比仅使用最优解更有效?

-使用搜索轨迹训练模型可以提高在未知输入上的准确性,因为这种方法使模型能够学习到多种搜索策略,并在训练过程中发现新的搜索策略。

如何使用强化学习策略来提高模型的问题解决能力?

-通过使用专家迭代(STAR)和优势引导策略对齐(OPA)等强化学习策略,可以对模型进行微调,以提高其在验证集上的性能,并解决之前无法解决的问题。

在强化学习策略中,如何设计奖励函数来引导模型的学习过程?

-奖励函数基于正确性和轨迹长度来设计,以指导模型学习过程,鼓励模型生成更正确、更高效的解决方案。

研究结果表明,训练语言模型进行搜索可以带来哪些好处?

-研究结果表明,训练语言模型进行搜索可以使模型自主地采用各种搜索策略,解决以前未解决的问题,并发现新的搜索方法,从而提高其问题解决能力。

在研究中,哪些外部因素对模型的训练和改进起到了支持作用?

-研究得到了Gabriel Poian、Jacob Andreas、Joy Hui、Yuya Dangov、Jang Eric Zelikman、Jan Philip Franken和C. Jong等人的宝贵讨论和支持,同时得到了斯坦福大学人类中心人工智能、Google High Grant和NSF Expeditions Grant的资助。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Trying to make LLMs less stubborn in RAG (DSPy optimizer tested with knowledge graphs)

【生成式AI導論 2024】第4講:訓練不了人工智慧?你可以訓練你自己 (中) — 拆解問題與使用工具

Understand DSPy: Programming AI Pipelines

使用ChatGPT API构建系统1——大语言模型、API格式和Token

GPT-4o 背後可能的語音技術猜測

[ML News] Jamba, CMD-R+, and other new models (yes, I know this is like a week behind 🙃)

5.0 / 5 (0 votes)