4 Principle of Optimality - Dynamic Programming introduction

Summary

TLDRThis video offers an insightful introduction to dynamic programming, explaining its fundamental concepts, differences from greedy methods, and comparisons with techniques like memoization and tabulation. It highlights how dynamic programming solves optimization problems by considering all possible solutions and selecting the optimal one. Through an in-depth Fibonacci example, the video demonstrates how dynamic programming can reduce time complexity by storing intermediate results to avoid redundant calculations. It emphasizes the differences between top-down and bottom-up approaches, making it clear that dynamic programming is a powerful tool for solving complex problems efficiently.

Takeaways

- 😀 Dynamic Programming (DP) is an optimization technique that evaluates all possible solutions to pick the optimal one, often using recursive formulas or iteration.

- 😀 Greedy methods and Dynamic Programming both aim to solve optimization problems, but they differ in their strategies. Greedy methods make one-time decisions, while DP makes decisions at every stage.

- 😀 DP problems typically involve breaking a problem into smaller subproblems and storing solutions to avoid redundant calculations, while greedy methods follow a predefined procedure.

- 😀 The **Principle of Optimality** in Dynamic Programming states that a problem can be solved by taking a sequence of optimal decisions, making it different from the one-time decisions in greedy algorithms.

- 😀 **Memoization** is a top-down approach in DP that caches results of subproblems to avoid redundant calculations, reducing time complexity from exponential (O(2^n)) to linear (O(n)).

- 😀 **Tabulation** is a bottom-up approach in DP, where the solution is built iteratively by filling up a table based on smaller subproblem solutions.

- 😀 Memoization and tabulation are two main techniques in Dynamic Programming, with memoization using recursion and tabulation using iteration. Both techniques aim to optimize the time complexity of solving DP problems.

- 😀 In the Fibonacci example, the naive recursive approach leads to an exponential time complexity (O(2^n)), but memoization optimizes it to O(n) by storing previously calculated values.

- 😀 The **Fibonacci series** example demonstrates how memoization stores intermediate results in an array to prevent repeated function calls, drastically reducing the number of computations.

- 😀 The **Tabulation** method solves problems iteratively by filling out a table from the smallest subproblems to the final solution, making it more efficient than recursion in terms of memory and time.

- 😀 Both memoization and tabulation are powerful methods for solving DP problems. Choosing between them depends on the problem and whether recursion or iteration is preferred for implementation.

Q & A

What is the main purpose of dynamic programming?

-The main purpose of dynamic programming is to solve optimization problems by finding the best solution through exploring all possible options, considering each stage of the problem sequentially.

How does dynamic programming differ from the greedy method?

-Dynamic programming and the greedy method both solve optimization problems, but they differ in approach. The greedy method follows a predefined strategy that guarantees an optimal solution, while dynamic programming explores all possible solutions and selects the best one, often at the cost of greater time complexity.

What does the principle of optimality in dynamic programming state?

-The principle of optimality states that a problem can be solved by making a sequence of decisions that lead to the optimal solution. Each decision considers previous ones, ensuring that the overall solution remains optimal.

What is the main difference between memoization and tabulation in dynamic programming?

-Memoization uses a top-down approach, where recursion is used with results stored to avoid recalculating values. In contrast, tabulation follows a bottom-up approach, where an iterative process fills in a table based on previous computed values.

Can you explain how memoization improves the efficiency of the Fibonacci function?

-In a recursive Fibonacci function, many calls are repeated, leading to exponential time complexity. Memoization stores the results of previous function calls, ensuring that each value is calculated only once, reducing the time complexity from exponential to linear.

What is the time complexity of the recursive Fibonacci function without memoization?

-The time complexity of the recursive Fibonacci function without memoization is O(2^n), since it involves redundant calculations of the same Fibonacci terms multiple times.

How does tabulation differ from recursion in dynamic programming?

-Tabulation, or the bottom-up approach, solves the problem iteratively by filling a table from the base case upwards, whereas recursion (top-down) breaks the problem down into subproblems and solves them recursively.

What does the term 'bottom-up approach' refer to in dynamic programming?

-The 'bottom-up approach' in dynamic programming refers to solving a problem iteratively, starting from the simplest subproblems and building up to the final solution. This approach is used in tabulation.

Why is tabulation generally preferred over memoization in dynamic programming?

-Tabulation is generally preferred because it avoids the overhead of recursion and the call stack, making it more efficient in terms of both time and space. It is also less prone to issues like stack overflow, which can occur in deep recursive calls.

What is the significance of the 'sequence of decisions' in dynamic programming?

-The sequence of decisions in dynamic programming refers to the approach of solving a problem by breaking it down into smaller subproblems, where each decision made is optimal based on the previous ones. This stepwise decision-making process ensures that the overall solution is optimal.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Apriori Algorithm | Association Rule Mining | Finding Frequent Itemset | 2023 | Simplilearn

Routing Pada Jaringan Komputer | Materi Informatika SMP kelas 8 Elemen JKI | Kurikulum merdeka

Management Concept - Definition, Meaning, management | what is management | Management in hindi

100+ Computer Science Concepts Explained

BOX PLOT, HISTOGRAM, DOT PLOT STATISTIKA KELAS 10

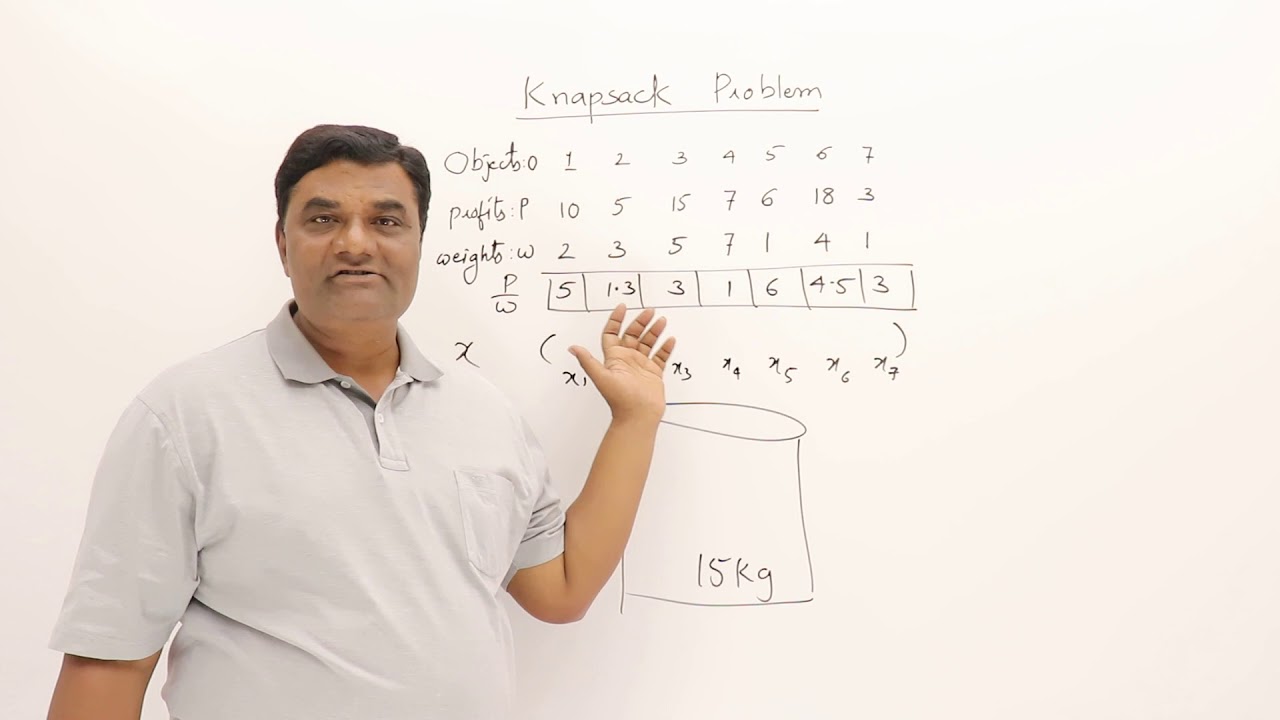

3.1 Knapsack Problem - Greedy Method

5.0 / 5 (0 votes)