Konsep Algoritma KNN (K-Nearest Neigbors) dan Tips Menentukan Nilai K

Summary

TLDRThe video presents an introduction to the K-Nearest Neighbors (KNN) algorithm, a supervised learning technique used for classification and regression. It explains how KNN determines the class of a new data point based on the classes of its nearest neighbors using distance metrics like Euclidean and Manhattan distances. The presenter emphasizes the importance of selecting the right value for K and discusses the algorithm's advantages, such as simplicity and ease of interpretation, alongside its computational costs and sensitivity to distance metrics. Practical applications include loan approval and medical diagnosis, making KNN a versatile tool in machine learning.

Takeaways

- 😀 KNN (K-Nearest Neighbors) is a supervised learning algorithm used for classification tasks in machine learning.

- 📊 The algorithm classifies data points based on the majority class of their nearest neighbors.

- 🧮 Determining the optimal value of 'k' is crucial, as it significantly affects the algorithm's accuracy.

- 📈 KNN does not require a training phase; it stores all data points and computes distances during classification.

- 🔍 Various distance metrics, such as Euclidean and Manhattan distance, can be used to measure similarity in KNN.

- 🌐 KNN can be applied in practical scenarios, such as loan approval or disease diagnosis based on features like age and health indicators.

- 🔄 The choice of 'k' should ideally be odd to avoid ambiguity when classes are balanced.

- 💡 KNN is simple to implement and interpret, making it accessible for beginners in machine learning.

- ⚠️ One of the main drawbacks of KNN is its computational intensity, especially with large datasets.

- 🔑 Proper selection and tuning of 'k' can lead to improved performance and accuracy in predictions.

Q & A

What is K-Nearest Neighbors (KNN)?

-K-Nearest Neighbors (KNN) is a supervised learning algorithm used for classification and regression that classifies data points based on their proximity to other data points in the training dataset.

What are the main types of machine learning mentioned in the script?

-The main types of machine learning mentioned are supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

How does KNN determine the class of a new data point?

-KNN determines the class of a new data point by finding the K nearest neighbors in the training data and assigning the class based on the majority class of those neighbors.

What are some distance metrics used in KNN?

-Some distance metrics used in KNN include Euclidean distance and Manhattan distance.

Why is the choice of K important in KNN?

-The choice of K is important because it significantly affects the accuracy and performance of the KNN algorithm. A small K can lead to sensitivity to noise, while a large K may oversimplify the model.

What is the significance of using odd values for K?

-Using odd values for K helps to avoid ambiguity in classification when two classes are equally represented among the nearest neighbors.

Can KNN be used for regression tasks?

-Yes, KNN can be used for regression tasks by predicting the value of a new data point based on the average (or weighted average) of the values of its nearest neighbors.

What are some potential applications of KNN mentioned in the script?

-Potential applications of KNN mentioned include determining loan eligibility based on age and loan amount, sizing clothing based on height and weight, and classifying diseases such as diabetes.

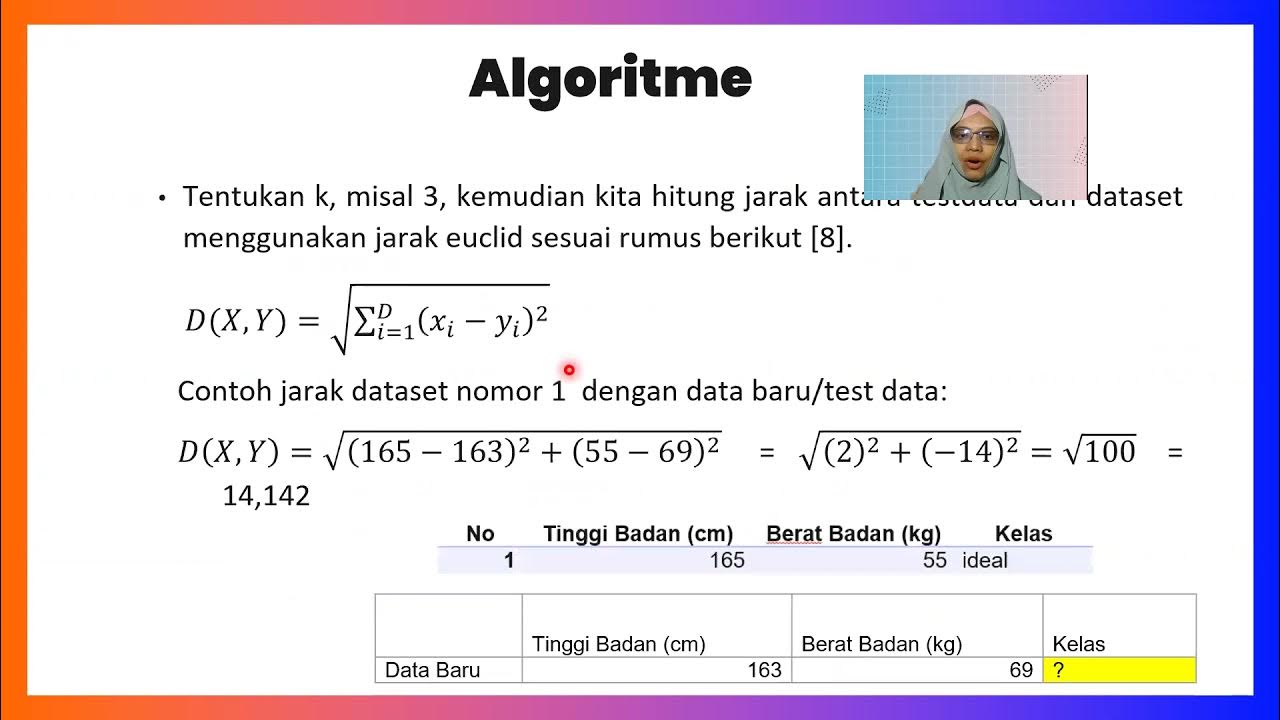

What is the process to classify a new data point using KNN?

-To classify a new data point using KNN, first determine the value of K, then calculate the distances from the new data point to all training data points, sort them by distance, and finally determine the class based on the majority class of the K nearest neighbors.

What are some strengths and weaknesses of KNN?

-Strengths of KNN include its simplicity and ease of interpretation. Weaknesses include high computational cost due to distance calculations, and it does not learn from the data, which can lead to performance issues if K is not chosen correctly.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

K Nearest Neighbors | Intuitive explained | Machine Learning Basics

K-nearest Neighbors (KNN) in 3 min

Lecture 3.4 | KNN Algorithm In Machine Learning | K Nearest Neighbor | Classification | #mlt #knn

K-Nearest Neighbors Classifier_Medhanita Dewi Renanti

All Learning Algorithms Explained in 14 Minutes

Image Recognition Using KNN

5.0 / 5 (0 votes)