YOLOv7 | Instance Segmentation on Custom Dataset

Summary

TLDRIn this tutorial, Arohi demonstrates how to perform instance segmentation using the YOLO v7 model. She guides viewers through setting up the environment with specific Python, Torch, and CUDA versions, cloning the YOLO v7 and Detectron 2 repositories, and installing necessary modules. Arohi then explains the process of converting a custom dataset into the COCO dataset format required by YOLO v7, registering the dataset, and configuring the model for training. The video concludes with a demonstration of the instance segmentation model's output, highlighting the need for more training epochs to improve mask accuracy.

Takeaways

- 😀 The video is a tutorial on performing instance segmentation using the YOLO v7 model.

- 🔧 The presenter uses specific software versions: Python 3.8, PyTorch 1.8.1, CUDA 11.1, and demonstrates on an RTX 3090 GPU.

- 🌐 The tutorial relies on a GitHub repository for the YOLO v7 model and requires cloning it to access the necessary files.

- 🔗 A secondary GitHub repository for Detectron 2 is also necessary, as YOLO v7 is built upon Detectron 2 modules.

- 📂 The video walks through the process of setting up the environment by installing Detectron 2 and additional required modules.

- 📁 The presenter creates a 'datasets' folder within the YOLO v7 directory to organize the dataset for the instance segmentation task.

- 🎓 The dataset used is not in COCO format, so the video includes a step to convert annotation files into the COCO dataset format.

- 🛠️ A custom function is written to facilitate the conversion of the dataset into the COCO format, which is essential for YOLO v7 to process the data.

- 🔄 The video demonstrates the registration of the dataset within the YOLO v7 framework, a critical step for the model to utilize the data.

- 🏁 The final steps include training the model with a custom configuration file and testing the instance segmentation on new images.

Q & A

What is the main topic of the video?

-The main topic of the video is performing instance segmentation using the YOLO v7 model.

What are the key features of the YOLO v7 model for instance segmentation?

-The YOLO v7 model for instance segmentation predicts both bounding boxes and segmentation masks around the detected objects.

What are the software versions and hardware specifications mentioned in the video?

-The video mentions using Python 3.8, PyTorch 1.8.1, CUDA 11.1, and a GPU specification of RTX 3090.

Why is the Detectron 2 repository important for setting up YOLO v7?

-Detectron 2 is important because it provides the base modules required for YOLO v7, and YOLO v7 relies on Detectron 2 for its functionality.

How does the video guide the user to clone the YOLO v7 GitHub repository?

-The video instructs the user to clone the YOLO v7 GitHub repository to access the necessary files and folders for the instance segmentation project.

What is the process for preparing the dataset for the YOLO v7 model?

-The process involves downloading the dataset, unzipping it, and placing it in a 'datasets' folder within the YOLO v7 directory. The dataset needs to be in COCO dataset format, so a function is written to convert the annotation files into this format.

How does the video describe the structure of the COCO dataset format?

-The COCO dataset format includes information about images, categories of objects, and annotations detailing the objects in each image, such as the class name and bounding box coordinates.

What is the purpose of the function written in the video to convert annotation files?

-The purpose of the function is to convert the dataset's annotation files from their original format into the COCO dataset format required by the YOLO v7 model.

How does the video suggest registering the dataset with the YOLO v7 model?

-The video suggests registering the dataset by using a function within a loop that calls 'dataset_catalog.register' with the necessary arguments to link the dataset to the YOLO v7 model.

What configuration changes are made in the video for training the YOLO v7 model?

-The video shows creating a new configuration file in a specific folder, adjusting the dataset paths, specifying the number of classes, and setting the number of GPUs to be used for training the model.

How does the video demonstrate testing the trained YOLO v7 model?

-The video demonstrates testing the model by running a custom 'my_demo.py' script, using the trained weights, a configuration file, and an input image to perform instance segmentation and display the results.

What is the significance of the threshold value mentioned in the video?

-The threshold value is significant as it determines the confidence level at which the model's predictions are considered valid, affecting the accuracy of the instance segmentation masks.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

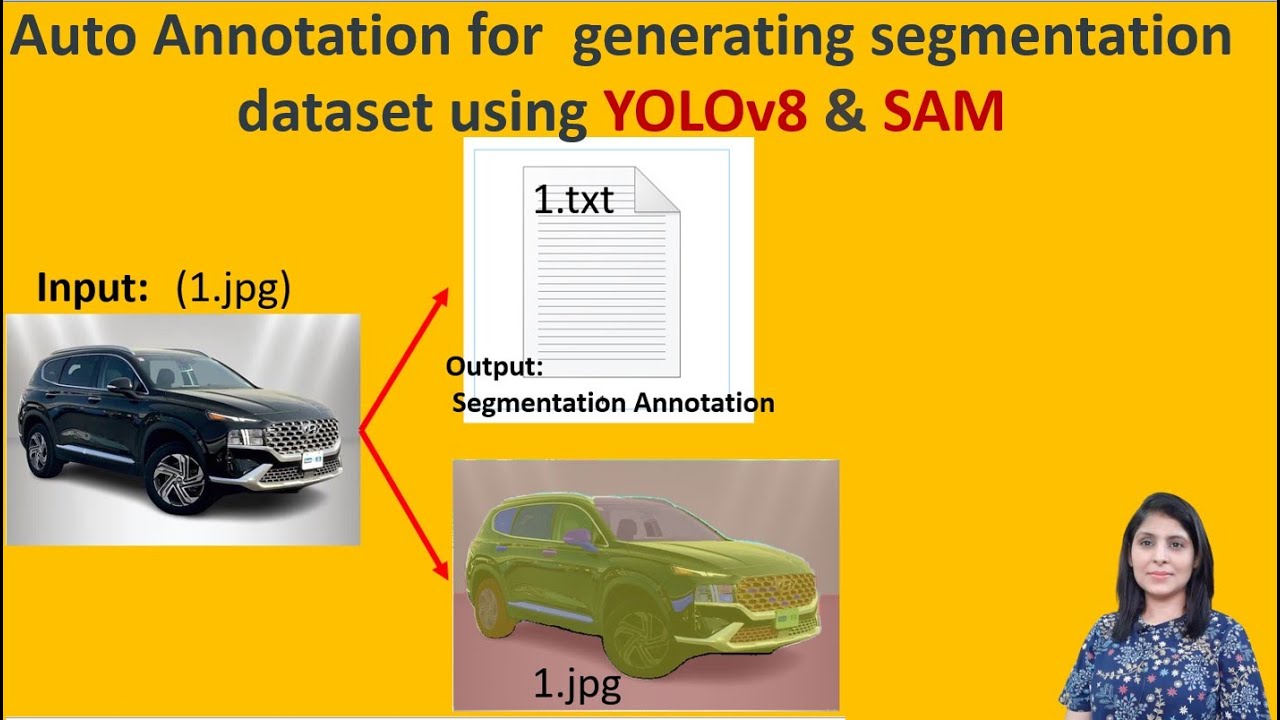

Auto Annotation for generating segmentation dataset using YOLOv8 & SAM

Auto Image Segmentation using YOLO11 and SAM2

Automatic number plate recognition (ANPR) with Yolov9 and EasyOCR

YOLO World Training Workflow with LVIS Dataset and Guide Walkthrough | Episode 46

YOLO-World: Real-Time, Zero-Shot Object Detection Explained

Image classification + feature extraction with Python and Scikit learn | Computer vision tutorial

5.0 / 5 (0 votes)