Auto Image Segmentation using YOLO11 and SAM2

Summary

TLDRIn this video, B Hammed demonstrates how to perform auto image segmentation using Meta AI's Sam 2 model and YOLOv11 for object detection. He explains how to automate segmentation tasks without manual annotations by combining these powerful models. The process includes training YOLOv11 for object detection, followed by using the Sam 2 model to segment the detected objects. The tutorial is particularly useful for those looking to enhance computer vision projects with advanced, automated segmentation techniques, without the need for custom training or data annotation.

Takeaways

- 😀 The video introduces how to perform auto image segmentation using Meta's Sam 2 model and YOLO v11 for object detection.

- 😀 The script explains that traditional manual image segmentation can be avoided by using auto image segmentation with pre-trained models.

- 😀 The Sam 2 model helps in creating segmentation masks automatically for objects in an image without the need for custom model training.

- 😀 YOLO v11 is used for object detection, creating bounding boxes around objects in an image, which is then passed to the Sam 2 model for segmentation.

- 😀 The process includes first performing object detection with YOLO v11 and then passing the bounding box results to the Sam 2 model to create a mask.

- 😀 Sam 2 is a segmentation model that generates accurate segmentation masks for detected objects in an image after object detection has been completed.

- 😀 Users are guided through a Google Colab notebook setup to run this process with custom data, using the ALR litics framework for segmentation.

- 😀 The video covers data preparation, including downloading datasets from the Robo Universe platform in YOLO v11 format for object detection.

- 😀 The training process for YOLO v11 is explained, with an emphasis on GPU usage to speed up model training, and evaluation metrics like mean average precision (mAP).

- 😀 Once object detection is completed, the Sam 2 model is used to create segmentation masks, enhancing the detection results with fine-grained segmentation.

Q & A

What is the focus of this video?

-The video focuses on performing auto image segmentation using Meta AI's SAM 2 model and the YOLOv11 detection model. The tutorial shows how to automatically segment images without the need for manual annotation or training custom models.

How does the segmentation process work in this tutorial?

-In this tutorial, the process starts with object detection using the YOLOv11 model, which detects objects and creates bounding boxes. These detection results are then passed to the SAM 2 model, which segments the objects by creating a mask over the detected area.

What is the SAM 2 model used for in this video?

-The SAM 2 model is used for performing automatic segmentation of objects in an image. It generates masks to segment out specific objects without the need for manually annotated data.

What is the role of YOLOv11 in this process?

-YOLOv11 is used for object detection, where it identifies and locates objects in an image by creating bounding boxes. These detection results are then passed to the SAM 2 model to perform segmentation on the detected objects.

What kind of dataset is used in this tutorial?

-The tutorial uses a custom dataset, which is based on brain tumor images. It includes various classes like Goma, meningioma, glioma, and others, and is available for download from the ROBO universe platform.

How can one obtain the dataset for this tutorial?

-To obtain the dataset, you need to create an account on ROBO.com and access the Universe platform. From there, you can search for the dataset and download it in YOLOv11 format for object detection tasks.

What is the purpose of using Google Colab in this tutorial?

-Google Colab is used to run the entire process in a cloud-based environment with access to GPU resources. The notebook allows users to download the dataset, train the models, and perform the object detection and segmentation tasks.

What is the importance of using a GPU for training the model?

-Using a GPU accelerates the training process, making it faster and more efficient, especially when working with large datasets or complex models like YOLOv11 and SAM 2.

What happens after training the YOLOv11 model?

-After training the YOLOv11 model, the detection results are used as input for the SAM 2 model, which performs the segmentation by creating masks over the detected objects in the image.

Can the SAM 2 model be used for other kinds of object detection tasks?

-Yes, the SAM 2 model can be applied to any object detection task, as long as the object detection step (such as with YOLOv11) is performed first. SAM 2 can then generate segmentation masks for the detected objects in the image.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

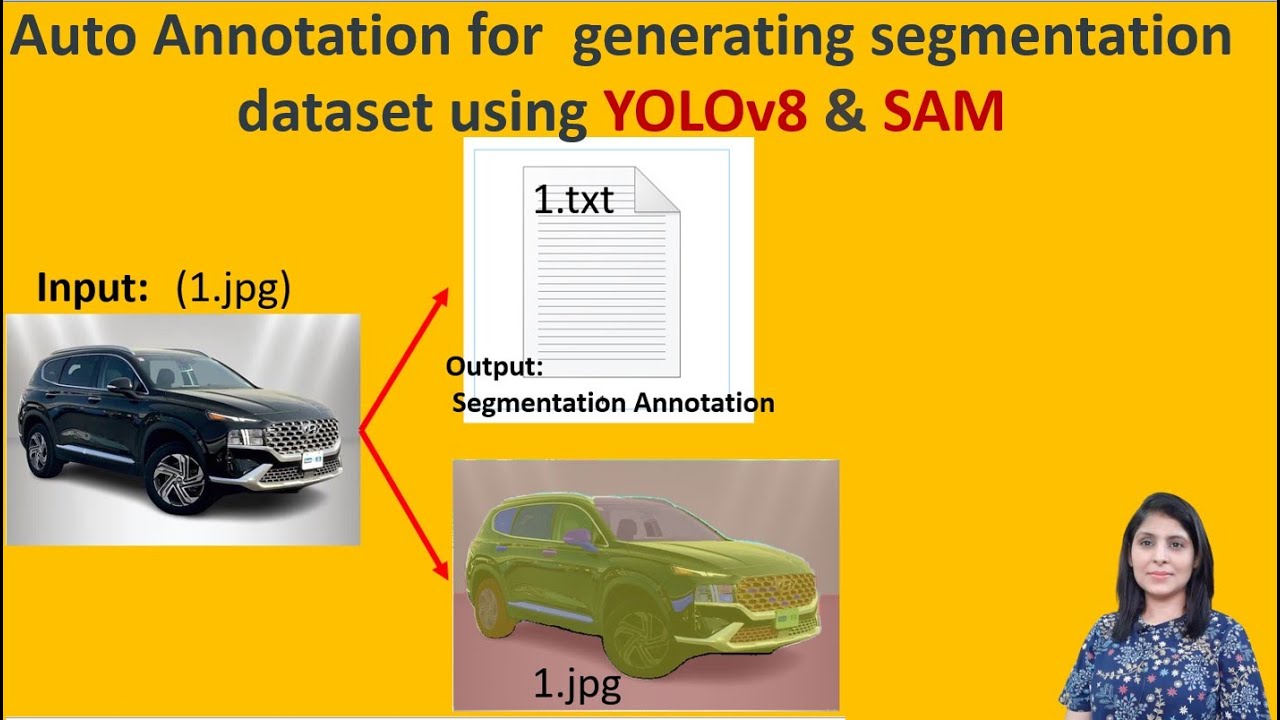

Auto Annotation for generating segmentation dataset using YOLOv8 & SAM

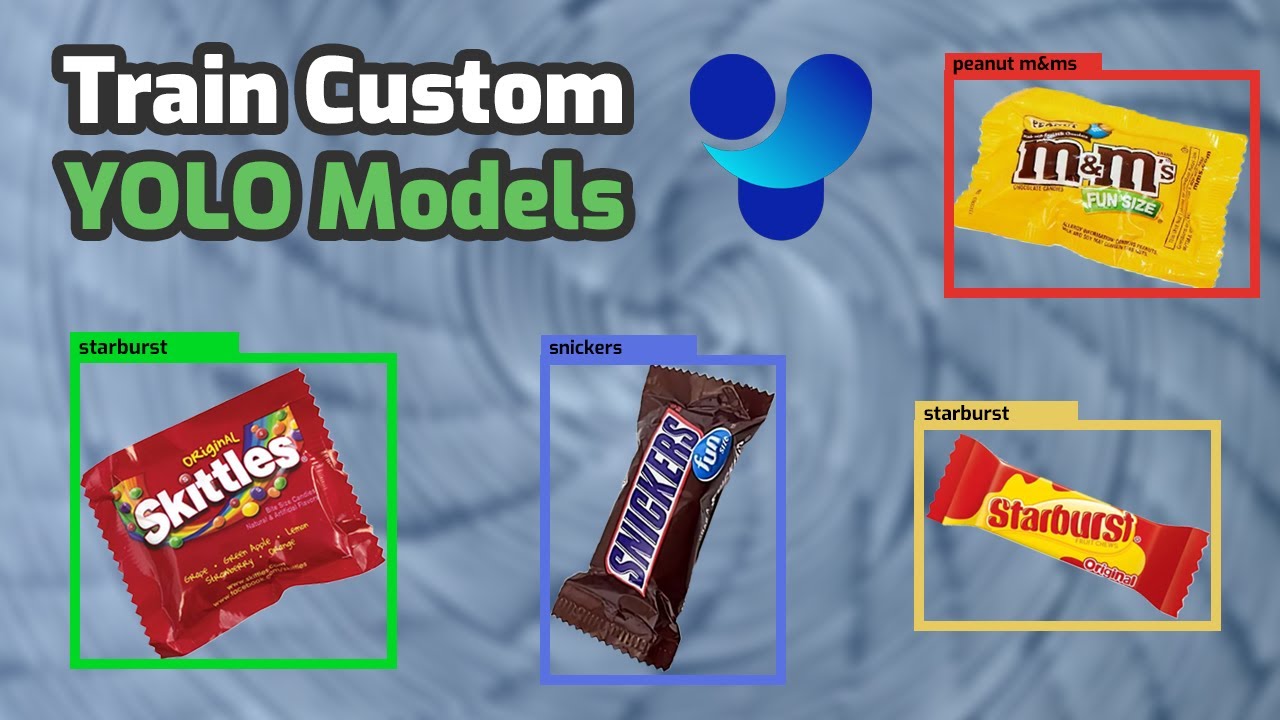

How to Choose the Best Computer Vision Model for Your Project

How to Train YOLO Object Detection Models in Google Colab (YOLO11, YOLOv8, YOLOv5)

26 - Denoising and edge detection using opencv in Python

PaliGemma by Google: Train Model on Custom Detection Dataset

Tutorial Geobia for ArcGIS

5.0 / 5 (0 votes)