Part 1- End to End Azure Data Engineering Project | Project Overview

Summary

TLDRThis video tutorial offers a comprehensive guide to a real-time data engineering project using Azure technologies. It covers the end-to-end process, from data ingestion with Azure Data Factory to transformation with Azure Databricks, and storage in Azure Data Lake. The project demonstrates the Lake House architecture, including bronze, silver, and gold data layers, culminating in analysis with Azure Synapse Analytics and reporting with Power BI. It also addresses security and governance with Azure Active Directory and Azure Key Vault, providing a complete understanding of building and automating a data platform solution.

Takeaways

- 📊 This video covers a complete end-to-end data engineering project using Azure technologies.

- 🔧 The project demonstrates how to use various Azure resources like Azure Data Factory, Azure Synapse Analytics, Azure Databricks, Azure Data Lake, Azure Active Directory, Azure Key Vault, and Power BI.

- 💻 The use case involves migrating data from an on-premise SQL Server database to the cloud using Azure services.

- 🏞️ The project implements the lakehouse architecture, which includes organizing data into bronze, silver, and gold layers in Azure Data Lake.

- 🚀 Azure Data Factory is used to connect to the on-premise SQL Server and copy data into Azure Data Lake Gen2.

- 🔄 Azure Databricks is utilized for data transformation tasks, converting raw data into curated formats stored in different layers.

- 📚 Azure Synapse Analytics is employed to replicate the database and tables from the on-premise SQL Server and load the curated data for further analysis.

- 📈 Power BI is used for creating reports and visualizations from the data stored in Azure Synapse Analytics.

- 🔒 Security and governance are managed using Azure Active Directory and Azure Key Vault for identity management and storing sensitive information.

- 🧩 The project is structured into multiple parts: environment setup, data ingestion, data transformation, data loading, data reporting, and end-to-end pipeline testing.

Q & A

What is the main focus of the video?

-The main focus of the video is to demonstrate a complete end-to-end data engineering project using Azure technologies.

What is the purpose of using Azure Data Factory in this project?

-Azure Data Factory is used for data ingestion, connecting to the on-premise SQL Server database, copying tables, and moving the data to the cloud.

What is the role of Azure Data Lake in the project?

-Azure Data Lake is used as a storage solution to store the data copied from the on-premise SQL Server database by Azure Data Factory.

How does Azure Databricks contribute to the project?

-Azure Databricks is used for data transformation, allowing data engineers to write code in SQL, PySpark, or Python to transform the raw data into a more curated form.

What is the concept of lake house architecture mentioned in the script?

-Lake house architecture refers to the organization of data in layers within the data lake, such as bronze, silver, and gold layers, each representing different levels of data transformation.

What transformations occur in the bronze layer?

-The bronze layer in the data lake holds an exact copy of the data from the data source without any changes in format or data types, serving as the source of truth.

What is the purpose of the silver and gold layers in the data lake?

-The silver layer is for the first level of data transformation, such as changing column names or data types, while the gold layer is for the final, most curated form of data after all transformations are completed.

How does Azure Synapse Analytics relate to the on-premise SQL Server database?

-Azure Synapse Analytics serves a similar purpose to the on-premise SQL Server database, allowing the creation of databases and tables to store and manage the transformed data.

What is the role of Power BI in the project?

-Power BI is used for data reporting, allowing data analysts to create various types of reports and visualizations based on the data loaded into Azure Synapse Analytics.

What security and governance tools are mentioned in the script?

-Azure Active Directory for identity and access management, and Azure Key Vault for securely storing and retrieving secrets like usernames and passwords are mentioned as security and governance tools.

What is the main task of data engineers in automating the data platform solution?

-The main task of data engineers is to automate the entire data platform solution through pipelines, ensuring that any new data added to the source is automatically processed and reflected in the end reports.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

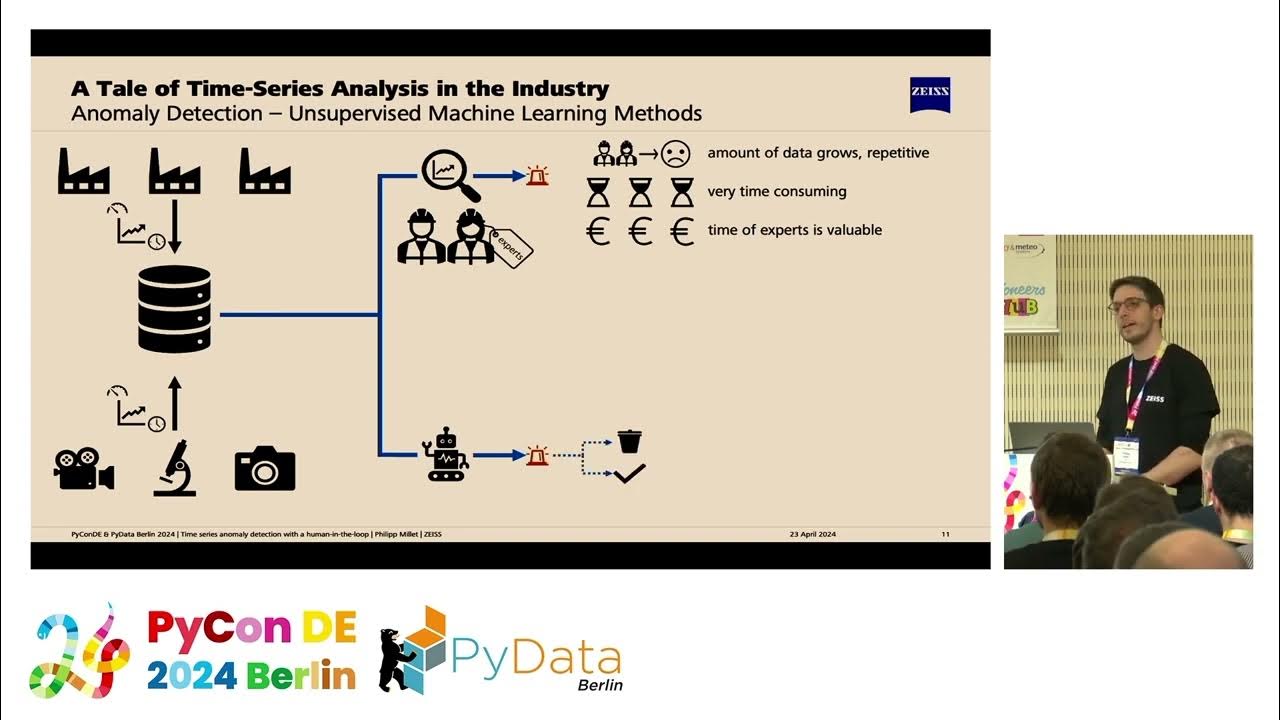

Time series anomaly detection with a human-in-the-loop [PyCon DE & PyData Berlin 2024]

Machine Learning & Data Science Project - 1 : Introduction (Real Estate Price Prediction Project)

Olympic Data Analytics | Azure End-To-End Data Engineering Project | Part 2

DMF Package API Import pattern calls

Azure DevOps Boards for Project Managers / Analyst (VSTS/ TFS) for beginners - Step by Step.

"Azure Synapse Analytics Q&A", 50 Most Asked AZURE SYNAPSE ANALYTICS Interview Q&A for interviews !!

5.0 / 5 (0 votes)