Optimisasi Statistika - Kuliah 6 part 2

Summary

TLDRThe video delves into the step-by-step process of the Descent Algorithm, explaining its iterative nature for optimizing functions. It begins by detailing the determination of initial values, calculating gradients, and finding optimal step sizes (lambda) to minimize a given function. Through practical examples, the video highlights how to compute values, update steps, and check for convergence. The presenter walks through various iterations, demonstrating when to stop based on convergence criteria, providing clear guidance on optimizing functions efficiently. The content is practical for those looking to understand or apply this optimization technique.

Takeaways

- 😀 The process begins by determining the initial value of X1 (or X), setting a starting point for the algorithm.

- 😀 The next step is to calculate the direction (S) for the first iteration, which involves finding the gradient of the function.

- 😀 To find the direction, the function's derivatives with respect to X1 and X2 must be calculated and then evaluated at the initial point.

- 😀 The direction is then negated to obtain the search direction for optimization.

- 😀 The next key step is determining the optimal step size (lambda), which is used to update the values of X1 and X2.

- 😀 The function's first derivative with respect to lambda is set to zero to find the optimal lambda value.

- 😀 Once lambda is found, the new values for X1 and X2 are updated using the optimal lambda.

- 😀 After updating X1 and X2, the algorithm checks if the new values represent an optimal point by calculating the gradient at that point.

- 😀 If the gradient is not zero, the algorithm continues to the next iteration with the updated values of X1 and X2.

- 😀 The process repeats until the algorithm converges, meaning the changes between iterations are sufficiently small or meet a predefined tolerance for convergence.

Q & A

What is the first step in the algorithm described in the transcript?

-The first step is to determine the initial value of X1 (or X at step 1), which is often written as 'ep' to avoid confusion with the variable X1 at later steps.

What does the algorithm require after determining the initial value of X1?

-The algorithm then requires determining the direction, denoted as 'si', which involves calculating the gradient of the function fx by taking its derivative and multiplying it by -1.

How is the gradient 'si' calculated?

-The gradient 'si' is calculated by finding the derivative of the function fx with respect to X1 and X2, then evaluating these derivatives at the initial point X1, X2, and multiplying by -1.

What is the purpose of calculating the optimal step size, denoted as lambda*?

-The purpose of calculating the optimal step size (lambda*) is to determine the rate of change that minimizes the function, ensuring the algorithm converges to an optimal solution.

How is the optimal lambda value determined?

-The optimal lambda value is determined by finding the derivative of the function with respect to lambda and setting it equal to 0, which identifies the value of lambda that minimizes the function.

What happens if the gradient at a given point is not zero?

-If the gradient at a given point is not zero, the algorithm continues iterating to find the next value, moving toward a point where the gradient is zero, indicating an optimal solution.

What is the significance of checking if the gradient equals zero?

-Checking if the gradient equals zero is significant because it confirms whether the current point is an optimal solution. If the gradient is not zero, the algorithm must continue iterating.

Why is the algorithm not converging after the second iteration in the transcript?

-The algorithm is not converging after the second iteration because the gradient at the new point is still not zero, meaning the algorithm has not yet found the optimum point.

What method is suggested for determining when to stop the algorithm?

-The algorithm can stop when the difference between the current and previous function values is smaller than a set tolerance, or when the changes in the variables X1 and X2 become very small.

What is the role of the convergence criterion in the algorithm?

-The convergence criterion helps determine when the algorithm has sufficiently minimized the function. It ensures that the algorithm terminates once changes in the variable values are sufficiently small, indicating that an optimal point has been reached.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Gradient Descent, Step-by-Step

Numerical Example| Learn Cuckoo Search Algorithm Step-by-Step Explanation [3/4] ~xRay Pixy

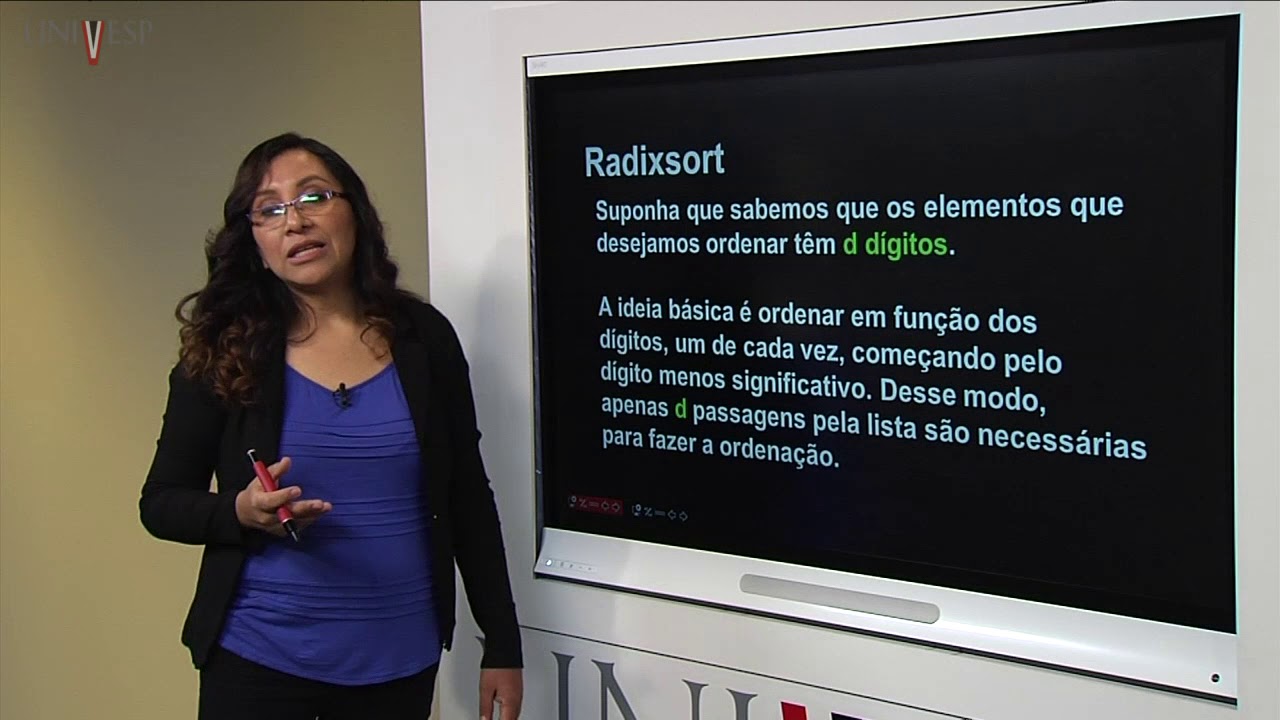

Projeto e Análise de Algoritmos - Aula 10 - Ordenação em tempo linear

Gradient descent simple explanation|gradient descent machine learning|gradient descent algorithm

Feedforward Neural Networks and Backpropagation - Part 1

Penjadwalan Produksi (PPC week 10 - sesi 3)

5.0 / 5 (0 votes)