Visualizing CNNs

Summary

TLDRThis lecture delves into visualization methods for understanding convolutional neural networks (CNNs), focusing on the analysis of kernels, filters, and activations. It discusses how the first layers of various CNN models detect edges and textures, resembling Gabor filters, and how higher layers capture more abstract features. Techniques such as dimensionality reduction on feature vectors, neuron activation visualization, and occlusion experiments are explored to reveal the inner workings of CNNs, providing insights into their feature learning and decision-making processes.

Takeaways

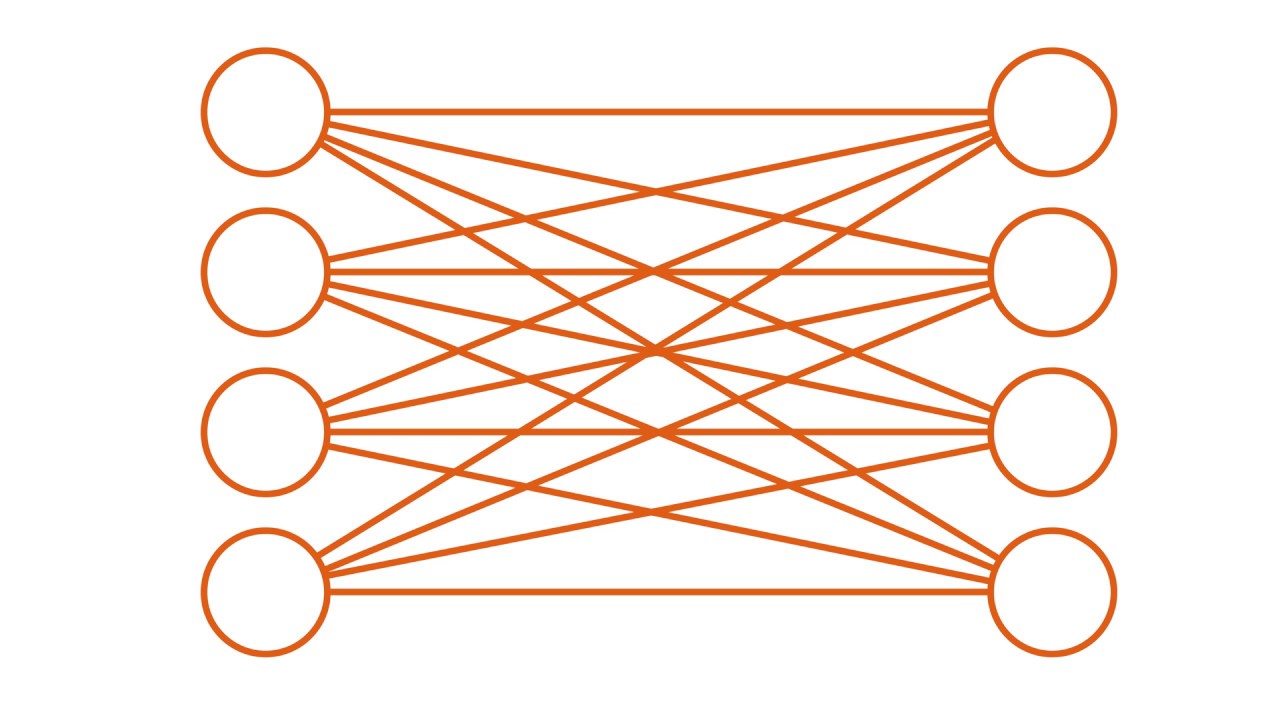

- 🔍 The lecture discusses different visualization methods for understanding how Convolutional Neural Networks (CNNs) process images, focusing on the filters, activations, and representations within the network.

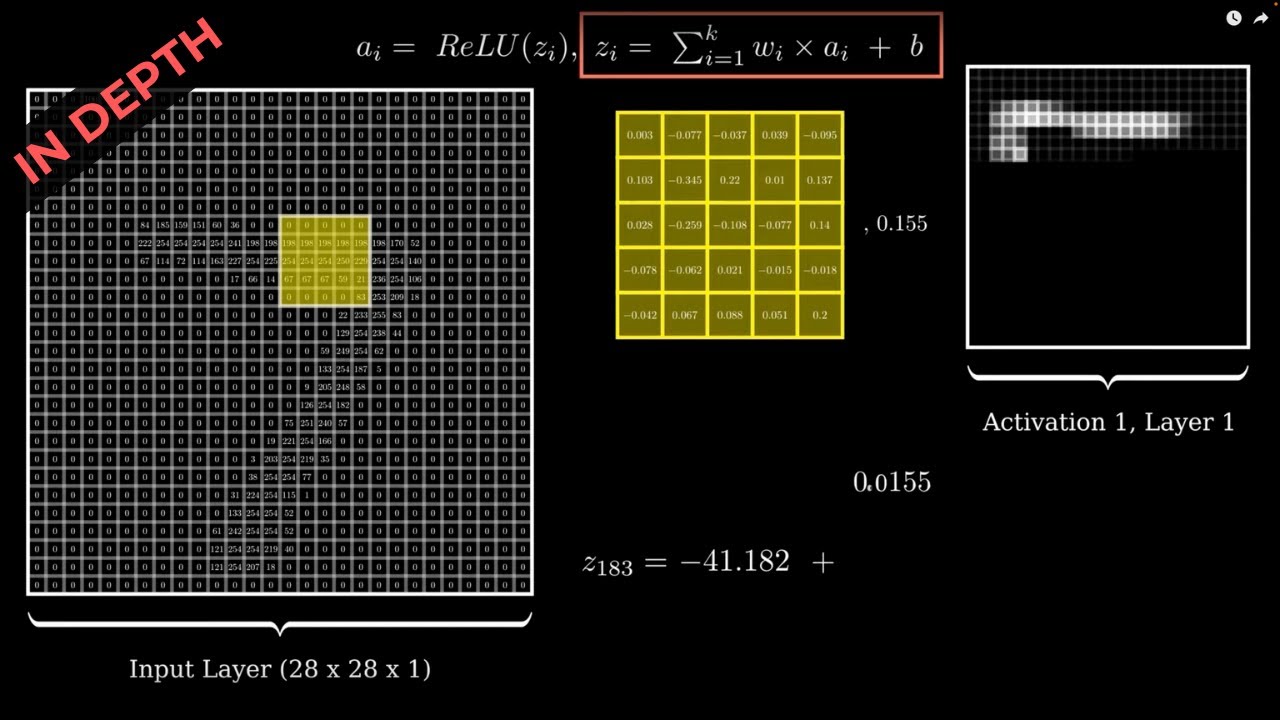

- 👀 Visualizing the filters or kernels in the first convolutional layer of CNNs like AlexNet reveals that they tend to capture oriented edges, color-based edges, and higher-order variations, which are similar across various models like ResNet, DenseNet, and VGG.

- 🌟 Filters in the first layer of CNNs are often Gabor-like, detecting edges and textures in various orientations and colors, which is consistent across different models and datasets.

- 📈 Higher layers of CNNs are more challenging to visualize due to the complexity and variety of features they learn, which may not be as interpretable as the first layer's edge detection.

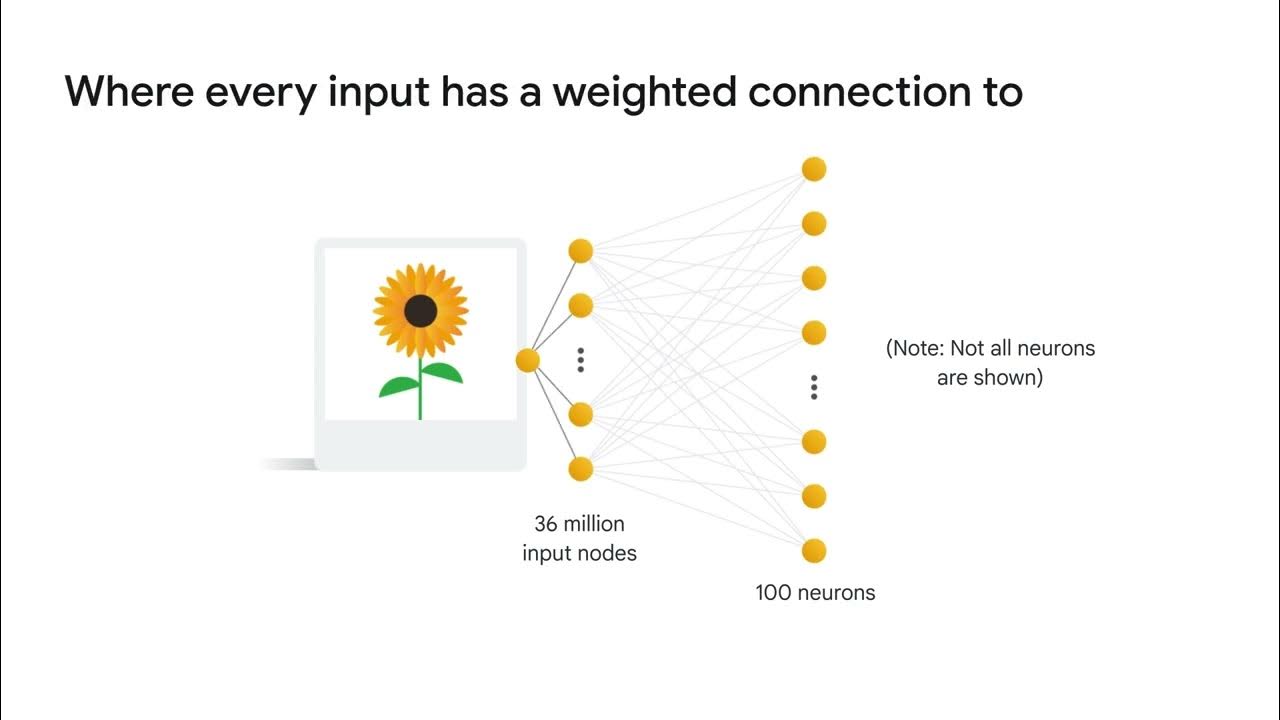

- 📊 Dimensionality reduction techniques like PCA and t-SNE can be applied to the output of the fully connected layer (e.g., FC7 in AlexNet) to visualize the representation space learned by the CNN, showing that different classes are well-separated.

- 📝 The penultimate layer's feature vectors from CNNs like AlexNet can capture semantic information about images, with similar objects grouping together in the reduced dimensional space.

- 👨🏫 The script references the work of Zeiler and Fergus, which is foundational in visualizing and understanding what CNNs learn from image data.

- 🔬 Occlusion experiments involve covering parts of an image to see how it affects the CNN's prediction, providing insights into which parts of the image the model relies on for classification.

- 🤖 The script suggests that each neuron in a CNN learns to fire for specific features or artifacts in the images, contributing to the model's overall understanding and classification capabilities.

- 📚 The lecture recommends further reading and resources, including lecture notes from CS231n at Stanford and a deep visualization toolkit demo by Jason Yosinski, for a deeper understanding of CNN visualization techniques.

- 🛠️ The methods covered in the lecture are 'don't disturb the model' approaches, meaning they utilize the trained model without altering it to gain insights into its learned representations and decision-making process.

Q & A

What is the primary focus of the lecture on visualization methods in CNNs?

-The lecture focuses on visualizing different kernels or filters in a CNN, activations in a particular layer, and other methods such as understanding what the CNN has learned through various visualization techniques.

How many filters does the first convolutional layer of AlexNet have, and what is their size?

-The first convolutional layer of AlexNet has 96 filters, each with a size of 11x11.

What is a Gabor-like filter fatigue, and why is it called so?

-Gabor-like filter fatigue refers to the observation that the filters in the first convolutional layer of various CNN models across different datasets tend to have very similar structures, detecting edges and patterns in a similar manner, hence the term 'fatigue' implying it's the same across models.

What is the purpose of visualizing the filters of higher layers in CNNs?

-Visualizing filters of higher layers can help understand the features that the CNN has learned. However, it is generally not as interesting or interpretable as visualizing the first layer, especially in datasets with a wide variety of classes.

What is the role of the penultimate layer (e.g., fc7 in AlexNet) in CNNs?

-The penultimate layer, such as fc7 in AlexNet, provides a high-dimensional representation of the input image. This layer's output is used for classification, and visualizing these representations can help understand how different classes are separated in the feature space.

How can one visualize the high-dimensional space of feature vectors from a CNN's penultimate layer?

-Dimensionality reduction techniques such as Principal Component Analysis (PCA) or t-SNE can be used to project the high-dimensional feature vectors into a two-dimensional space for visualization.

What does the visualization of the first convolutional layer's filters across different models and datasets suggest about the initial learning of CNNs?

-The visualization suggests that the first layer of CNNs learns to detect low-level image features such as edges, color gradients, and textures, which is similar across different models and datasets.

What is the significance of visualizing which images maximally activate a particular neuron in a CNN?

-This visualization helps in understanding what specific features or artifacts in the images are being captured by individual neurons, providing insights into the learning process of the CNN.

What are occlusion experiments in the context of CNN visualization?

-Occlusion experiments involve covering parts of an image and observing the effect on the CNN's predictions. This method helps identify which parts of the image are most critical for the CNN's decision-making process.

How do occlusion experiments help in understanding the CNN's focus during image classification?

-By occluding different parts of an image and observing changes in the predicted probability, occlusion experiments reveal which pixels or regions the CNN relies on to make its classification, indicating its focus area.

What is the recommended approach for further understanding of the visualization methods discussed in the lecture?

-The lecture recommends reviewing the lecture notes of CS231n, exploring a deep visualization toolkit demo video by Jason Yosinski, and studying more about t-SNE as a dimensionality reduction technique through the provided links.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

What is a convolutional neural network (CNN)?

Simple explanation of convolutional neural network | Deep Learning Tutorial 23 (Tensorflow & Python)

Convolutional Neural Networks

Neural Networks Part 8: Image Classification with Convolutional Neural Networks (CNNs)

TUGAS PEMROSESAN CITRA DIGITAL (RESUME TENTANG KONVOLUSI)

Convolutional Neural Networks from Scratch | In Depth

5.0 / 5 (0 votes)