Simple explanation of convolutional neural network | Deep Learning Tutorial 23 (Tensorflow & Python)

Summary

TLDRThis script offers a simplified explanation of Convolutional Neural Networks (CNNs), ideal for beginners. It illustrates how CNNs can recognize patterns like handwritten digits and complex images by using filters to detect features like edges and shapes. The script clarifies the concept of feature maps, the role of ReLU for non-linearity, and the importance of pooling to reduce dimensions and computation. It also touches on the self-learning capability of CNNs to adjust filters during training, making it an intuitive and powerful tool for computer vision tasks.

Takeaways

- 🧠 Convolutional Neural Networks (CNNs) are designed to recognize patterns in images, such as handwritten digits, by using a grid of numerical values representing pixel intensities.

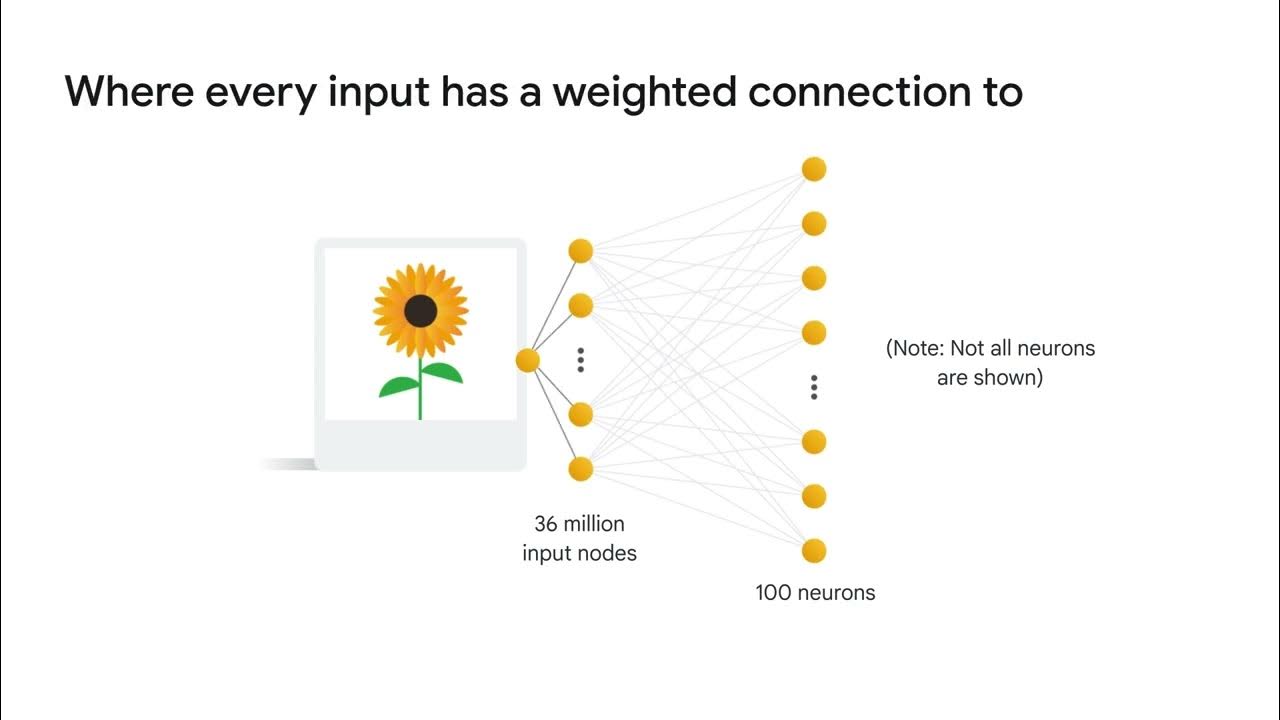

- 🔍 Traditional neural networks struggle with image recognition due to their inability to handle variations in image positioning and the immense computational load for large images.

- 🌟 CNNs utilize filters or kernels to detect specific features within an image, such as edges or shapes, by applying a convolution operation that scans the image in a sliding window fashion.

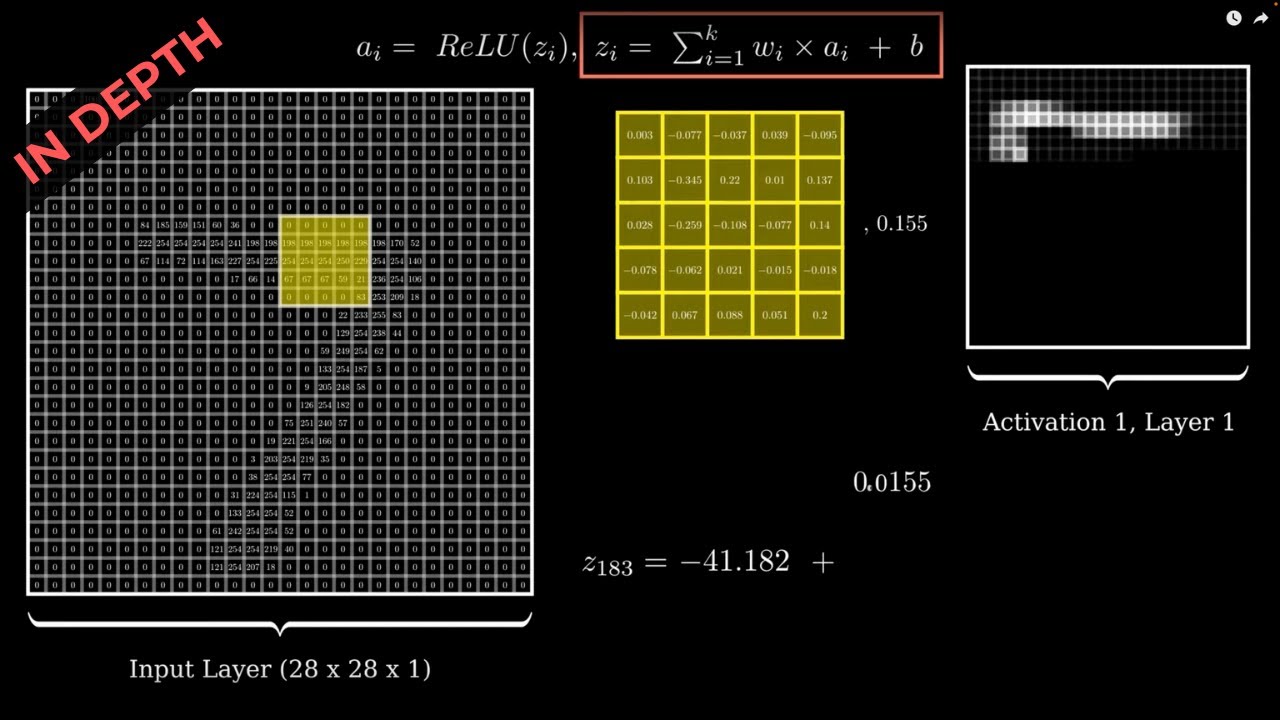

- 🔑 The convolution operation involves multiplying the filter values with the corresponding image section and summing them up to create a feature map, which highlights areas of the image that match the filter's pattern.

- 👀 Human brains recognize images by detecting features like eyes, nose, and ears, which is similar to how CNNs use different filters to identify features in images.

- 📈 CNNs reduce computational complexity through parameter sharing, where the same filter parameters are applied across the entire image, and through pooling layers that reduce the spatial dimensions of the feature maps.

- 🔄 Pooling layers, such as max pooling, help in making the CNN invariant to small translations and distortions in the image by selecting the most prominent features within a region.

- 🔧 The use of ReLU (Rectified Linear Unit) activation function introduces non-linearity into the CNN, which is essential for solving complex pattern recognition tasks.

- 🤖 CNNs learn the optimal filters during the training phase through backpropagation, without the need for manual filter selection, allowing the network to automatically adapt to the features present in the training data.

- 🔄 Data augmentation techniques, such as rotating or scaling images, can be used to increase the robustness of CNNs to variations like rotation and scaling in the input images.

- 📚 The script is an educational resource provided by Daval Patel, who offers tutorials on data science, machine learning, Python programming, and career guidance on his YouTube channel.

Q & A

What is the main issue with using a grid of numbers to represent an image for a computer?

-The main issue is that it is too hard-coded and sensitive to shifts or variations in the image. For example, a slight shift in the position of a handwritten digit can change the representation, causing the computer to fail in recognizing the digit.

Why is a dense neural network not efficient for handling larger images like the one of a koala?

-A dense neural network would require an enormous number of weights to be calculated between the input and hidden layers, leading to a high computational cost that is impractical for large images with many pixels and RGB channels.

How do convolutional neural networks (CNNs) address the issue of local features in images?

-CNNs use filters or convolution operations to detect local features in images. These filters act as feature detectors that can identify patterns regardless of their position in the image, thus addressing the issue of locality.

What is the purpose of a feature map in CNNs?

-A feature map is the result of applying a convolution operation with a filter. It highlights areas in the image where the specific feature the filter is designed to detect is present, effectively capturing the presence of that feature throughout the image.

How does the stride parameter affect the size of the feature map?

-The stride determines the step size the filter moves across the image. A larger stride results in a smaller feature map because fewer positions are covered by the filter.

What is pooling, and what are its benefits in CNNs?

-Pooling is an operation that reduces the dimensions of a feature map, typically by taking the maximum (max pooling) or average (average pooling) value within a certain window. It benefits CNNs by reducing computational load, mitigating overfitting, and making the model more tolerant to variations and distortions.

How does the ReLU (Rectified Linear Unit) activation function introduce non-linearity into a CNN?

-The ReLU activation function introduces non-linearity by setting all negative values in the feature map to zero, while keeping positive values unchanged. This simple operation allows the model to learn complex patterns in the data.

What is the role of the fully connected layer in a CNN after the convolution and pooling layers?

-The fully connected layer serves as the classification part of the CNN. It takes the flattened output from the convolution and pooling layers and uses it to make predictions about the image, handling the variety in inputs to classify them effectively.

How does a CNN learn the filters during the training process?

-During training, a CNN uses backpropagation to adjust the filters based on the training data. The network starts with random filters and learns the optimal filter values through the training process to effectively detect features in the images.

What is data augmentation, and how does it help in training CNNs to handle variations like rotation and scaling?

-Data augmentation is a technique where new training samples are artificially created by applying transformations like rotation, scaling, and translation to the existing data. This helps the CNN to learn to recognize features under various conditions and improves its ability to generalize across different image variations.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What is a convolutional neural network (CNN)?

ANN vs CNN vs RNN | Difference Between ANN CNN and RNN | Types of Neural Networks Explained

Convolutional Neural Networks

Convolutional Neural Networks from Scratch | In Depth

Convolutional Neural Networks Explained (CNN Visualized)

Deep Learning(CS7015): Lec 1.4 From Cats to Convolutional Neural Networks

5.0 / 5 (0 votes)