1 Principal Component Analysis | PCA | Dimensionality Reduction in Machine Learning by Mahesh Huddar

Summary

TLDRThis video tutorial offers a comprehensive guide to Principal Component Analysis (PCA), a pivotal technique in machine learning for dimensionality reduction. The presenter elucidates PCA through a simple example, demonstrating how to transform a two-dimensional dataset into a one-dimensional form. Key steps include calculating the mean of features, constructing the covariance matrix, determining eigenvalues and eigenvectors, and finally computing the principal components. The video concludes with a geometric interpretation of PCA, illustrating how data points are projected onto the principal components, providing viewers with a clear understanding of the concept.

Takeaways

- 📊 Principal Component Analysis (PCA) is a technique used for dimensionality reduction in machine learning.

- 🔢 PCA is applied when you want to convert higher dimensional data into lower dimensional data.

- 📈 The first step in PCA is calculating the mean of the features in the dataset.

- 🧮 The covariance matrix is calculated next, which helps understand the relationship between variables.

- 📋 The formula for calculating covariance involves the difference between each data point and the mean, squared and summed.

- 🔑 Eigenvalues and eigenvectors are calculated from the covariance matrix, with eigenvalues representing variance along the principal components.

- 🌟 The largest eigenvalue is selected to determine the direction of the first principal component.

- 📝 Eigenvectors corresponding to the largest eigenvalues are used to form the principal components.

- 📉 The principal components are calculated by projecting the original data onto the eigenvectors.

- 📚 PCA reduces the dimensionality of the data while attempting to preserve as much variance as possible.

- 🎯 The geometrical interpretation of PCA involves projecting data points onto new axes (principal components) that are aligned with the directions of maximum variance.

Q & A

What is the primary purpose of Principal Component Analysis (PCA) in machine learning?

-Principal Component Analysis (PCA) is primarily used for dimensionality reduction in machine learning. It helps in converting higher dimensional data into lower dimensional data.

How does PCA reduce a dataset from two dimensions to one?

-PCA reduces a dataset from two dimensions to one by calculating the mean of the features, constructing a covariance matrix, determining the eigenvalues and eigenvectors of the matrix, and then projecting the data onto the eigenvector corresponding to the largest eigenvalue.

What is the first step in applying PCA to a dataset?

-The first step in applying PCA to a dataset is to calculate the mean of each feature. This involves adding all the values of a feature and dividing by the number of examples.

What is a covariance matrix and how is it used in PCA?

-A covariance matrix is a square matrix that shows the covariance between different features in a dataset. In PCA, it is used to determine the directions of maximum variance in the data, which are then used to project the data onto lower dimensions.

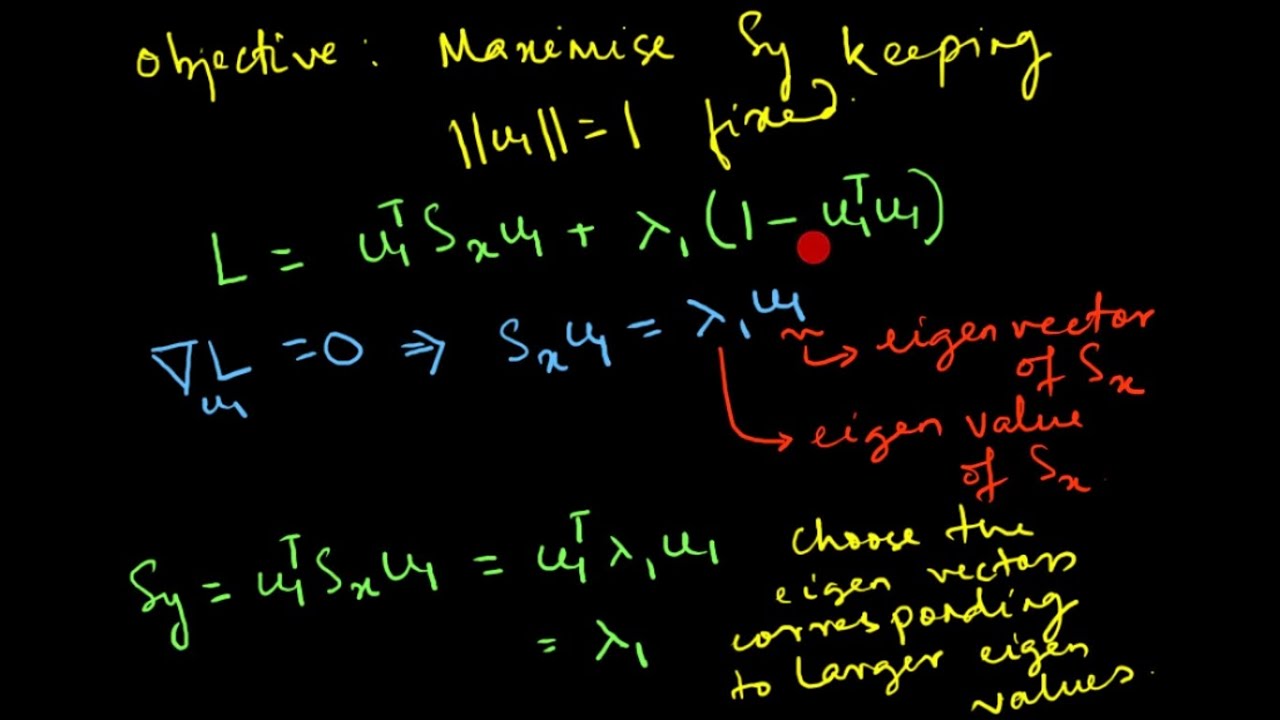

How are eigenvalues and eigenvectors calculated in the context of PCA?

-Eigenvalues and eigenvectors are calculated by solving the equation that involves the covariance matrix (S) and the identity matrix (I). The equation is set to zero, and the solutions for the eigenvalues (Lambda) and corresponding eigenvectors (U) are found.

Why are the eigenvectors corresponding to the largest eigenvalues important in PCA?

-Eigenvectors corresponding to the largest eigenvalues are important in PCA because they represent the directions of maximum variance in the data. These directions are used to project the data into a lower-dimensional space while retaining most of the original data's variability.

What does it mean to normalize an eigenvector in PCA?

-Normalizing an eigenvector in PCA means dividing each component of the eigenvector by its magnitude to make the length of the vector equal to one. This step ensures that the principal components are on a comparable scale and can be interpreted more easily.

How are principal components calculated from the eigenvectors and original data?

-Principal components are calculated by taking the transpose of the eigenvector and multiplying it with the original data matrix, with each data point's values adjusted by subtracting the mean of the corresponding feature.

What is the geometrical interpretation of PCA?

-The geometrical interpretation of PCA involves projecting the data points onto the new axes (eigenvectors) that represent the directions of maximum variance. This projection reduces the dimensionality of the data while attempting to preserve as much of the original data's variability as possible.

How does PCA help in data visualization?

-PCA helps in data visualization by reducing the number of dimensions of the data, making it easier to plot and interpret. By projecting the data onto the first few principal components, complex, high-dimensional data can be visualized in two or three dimensions.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

PCA Algorithm | Principal Component Analysis Algorithm | PCA in Machine Learning by Mahesh Huddar

Principal Component Analysis (PCA) : Mathematical Derivation

StatQuest: PCA main ideas in only 5 minutes!!!

Python Exercise on kNN and PCA

Lec-46: Principal Component Analysis (PCA) Explained | Machine Learning

10.3 Probabilistic Principal Component Analysis (UvA - Machine Learning 1 - 2020)

5.0 / 5 (0 votes)