Lec-46: Principal Component Analysis (PCA) Explained | Machine Learning

Summary

TLDRIn this lecture, the instructor explains Principal Component Analysis (PCA), an unsupervised learning algorithm used for dimensionality reduction. The explanation begins by comparing PCA to Linear Discriminant Analysis (LDA), highlighting its role in simplifying complex data by reducing features. The process includes calculating means, covariance matrices, and eigenvalues, followed by finding eigenvectors to generate principal components. The instructor uses an example involving house prices to make the concepts more relatable, emphasizing the importance of reducing dimensions to avoid model complexity. The lecture also covers the mathematical steps involved, guiding students to understand and apply PCA effectively.

Takeaways

- 😀 PCA (Principal Component Analysis) is an algorithm used for dimensionality reduction, primarily in unsupervised learning.

- 😀 Unlike supervised learning methods like LDA, PCA works with unlabeled data and focuses on reducing input data dimensions.

- 😀 Dimensionality reduction helps simplify models by reducing the number of features, improving accuracy, and lowering complexity.

- 😀 Increasing the number of features in a model may initially improve accuracy but eventually causes complexity and decreases performance.

- 😀 In PCA, you calculate the mean of each feature and use it to find the covariance matrix, which represents the relationships between the features.

- 😀 The covariance matrix is calculated using the variance of individual features and their covariance, which shows how features vary together.

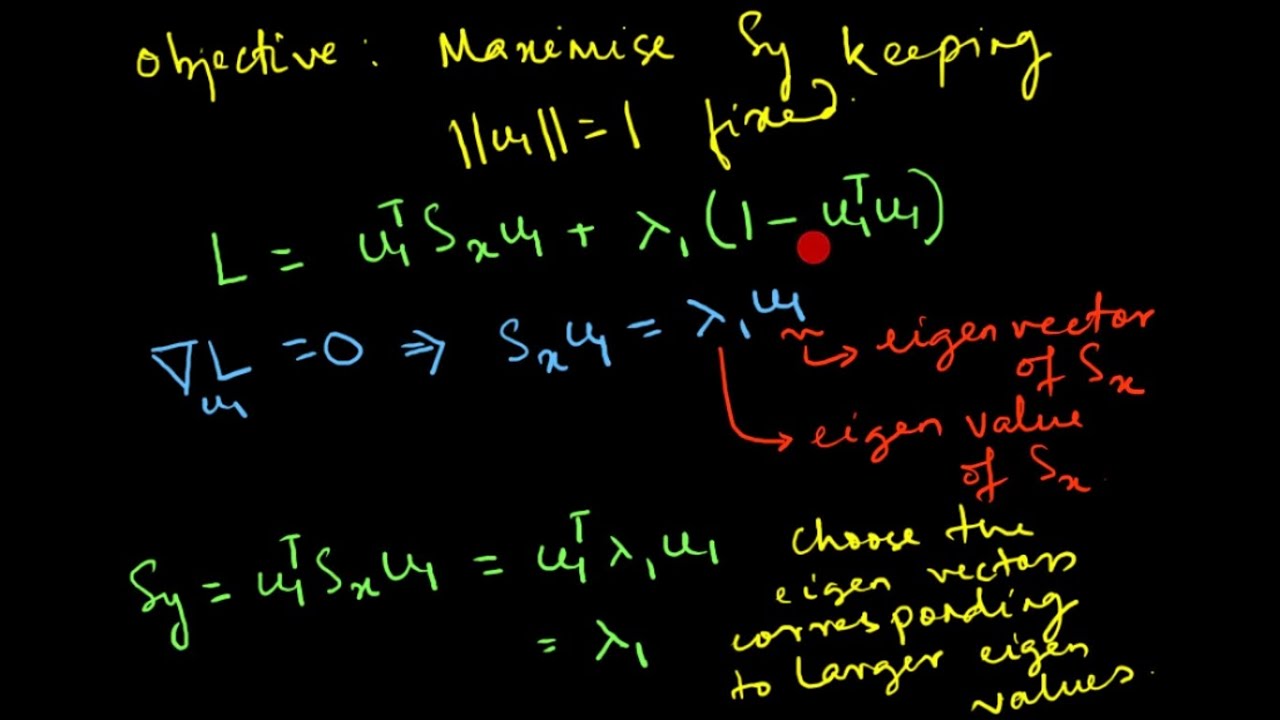

- 😀 Eigenvalues and eigenvectors play a crucial role in PCA, as they help determine which components (features) contribute the most variance to the data.

- 😀 To calculate the eigenvalues, the formula involves finding the determinant of a matrix derived from the covariance matrix.

- 😀 When performing PCA, the eigenvalue with the largest value is the most significant, indicating the principal component that should be kept.

- 😀 After calculating the eigenvalues, eigenvectors are used to form new components, which reduce the data's dimensionality without losing much information.

- 😀 Once the principal components are obtained, they are used for data prediction and modeling, replacing the original features for a simplified analysis.

Q & A

What is the primary purpose of Principal Component Analysis (PCA)?

-The primary purpose of PCA is dimensionality reduction. It simplifies a dataset by reducing the number of features while retaining as much information as possible.

In which type of machine learning is PCA typically used?

-PCA is typically used in unsupervised learning, where the data does not have labeled outputs, and the goal is to extract meaningful features from the input data.

How does PCA help in improving model accuracy?

-By reducing the number of features, PCA helps reduce the complexity of the model, preventing overfitting and improving prediction accuracy.

How is PCA similar to Linear Discriminant Analysis (LDA)?

-Both PCA and LDA aim to reduce dimensionality, but PCA is used in unsupervised learning, while LDA is used in supervised learning. PCA reduces dimensions based solely on the input features, while LDA also uses class labels to achieve the reduction.

What is the main problem caused by having too many features in a model?

-Having too many features increases the complexity of the model, which can lead to overfitting, where the model becomes too tailored to the training data and performs poorly on unseen data.

Can you explain the analogy of buying a house to illustrate PCA?

-Just like how adding too many features when buying a house (e.g., number of rooms, location, park nearby) can make the decision process confusing, having too many features in a dataset can make the model's predictions less accurate. PCA reduces unnecessary features to make decisions easier.

What are the first two steps in PCA when given a dataset?

-The first two steps in PCA are to calculate the mean of each feature and then compute the covariance matrix, which helps understand how features relate to each other.

What role do eigenvalues play in PCA?

-Eigenvalues indicate how much variance each principal component represents. The higher the eigenvalue, the more significant the principal component is in explaining the data's variance.

Why is it important to select the largest eigenvalue in PCA?

-The largest eigenvalue corresponds to the most significant principal component, capturing the most variance in the data. This helps in reducing the dimensions while retaining the most important information.

What is the purpose of multiplying the eigenvectors with the original data in PCA?

-Multiplying the eigenvectors with the original data projects the data onto the new axes defined by the principal components, effectively reducing the dimensionality while retaining the most important features of the data.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Python Exercise on kNN and PCA

PCA Algorithm | Principal Component Analysis Algorithm | PCA in Machine Learning by Mahesh Huddar

10.3 Probabilistic Principal Component Analysis (UvA - Machine Learning 1 - 2020)

StatQuest: PCA main ideas in only 5 minutes!!!

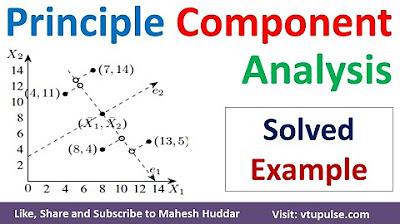

1 Principal Component Analysis | PCA | Dimensionality Reduction in Machine Learning by Mahesh Huddar

Principal Component Analysis (PCA) : Mathematical Derivation

5.0 / 5 (0 votes)