PCA Algorithm | Principal Component Analysis Algorithm | PCA in Machine Learning by Mahesh Huddar

Summary

TLDRThis video explains the concept and algorithm behind Principal Component Analysis (PCA), a technique used to reduce the dimensionality of datasets. The script walks through the step-by-step process of PCA, including defining the dataset, calculating the covariance matrix, determining eigenvalues and eigenvectors, and selecting principal components. The tutorial covers how to reduce data complexity while retaining important features, making it easier to build machine learning models. It is an essential guide for anyone looking to understand how PCA works and how to apply it in data analysis.

Takeaways

- 😀 PCA is a dimensionality reduction technique used to identify relevant features and remove redundant or irrelevant ones in a dataset.

- 😀 The first step in PCA involves defining the dataset, which consists of 'n' features (variables) and 'N' examples, represented in matrix format.

- 😀 To apply PCA, you first need to compute the mean of each feature across all examples in the dataset.

- 😀 The covariance matrix is calculated to measure how pairs of features vary together, providing insights into their relationships.

- 😀 PCA involves solving for eigenvalues and eigenvectors from the covariance matrix to capture the most significant directions of variance in the data.

- 😀 Eigenvectors represent the principal components, and eigenvalues represent the variance captured by these components.

- 😀 Normalizing eigenvectors is crucial to ensure they have unit length before proceeding to dimensionality reduction.

- 😀 To reduce dimensionality, you select the top 'p' eigenvectors corresponding to the largest eigenvalues, where 'p' is the desired number of principal components.

- 😀 The dataset is then projected onto the selected eigenvectors to form a new dataset with reduced dimensions.

- 😀 PCA enables dimensionality reduction while retaining the maximum amount of information from the original dataset, useful for data analysis and machine learning tasks.

Please replace the link and try again.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

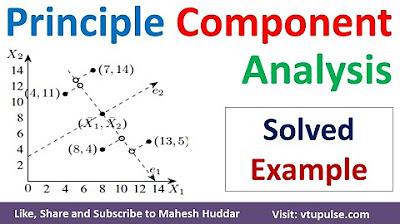

Lec-46: Principal Component Analysis (PCA) Explained | Machine Learning

StatQuest: PCA main ideas in only 5 minutes!!!

1 Principal Component Analysis | PCA | Dimensionality Reduction in Machine Learning by Mahesh Huddar

10.3 Probabilistic Principal Component Analysis (UvA - Machine Learning 1 - 2020)

Python Exercise on kNN and PCA

Week 3 Lecture 13 Principal Components Regression

5.0 / 5 (0 votes)