Learn Docker in 7 Easy Steps - Full Beginner's Tutorial

Summary

TLDRThis video script offers an in-depth tutorial on Docker for developers, focusing on containerizing a Node.js application. It covers Docker's basics, including Dockerfiles, images, and containers, and addresses advanced topics like port forwarding and volumes. The tutorial guides viewers through installation, writing Dockerfiles, building and running images, and managing multi-container setups with Docker Compose, aiming to demystify Docker and empower developers to package and deploy applications consistently across any environment.

Takeaways

- 📦 Docker is a tool for packaging software so it can run on any hardware, helping to standardize development environments.

- 🛠️ The core components of Docker are Dockerfiles, images, and containers, which work together to build, distribute, and run applications consistently.

- 💡 Docker solves the 'it works on my machine' problem by allowing developers to define and reproduce environments with Dockerfiles.

- 📝 A Dockerfile is a script with instructions to build a Docker image, which serves as a template for creating containers.

- 🚀 The process of Dockerizing an application involves creating a Dockerfile, installing dependencies, and defining how the application runs within a container.

- 🖥️ Docker Desktop is recommended for Mac or Windows users, providing both command-line access and a GUI for managing containers.

- 🔍 Docker extensions for IDEs like VS Code enhance development by providing language support for Dockerfiles and integration with registries.

- 🔑 The 'FROM' instruction in a Dockerfile starts with a base image, which can be an official image like 'node:12' to simplify setup.

- 🔄 Efficient layer caching in Dockerfiles is achieved by installing dependencies before copying the source code, minimizing rebuild times.

- 🔒 Dockerignore files help to exclude unnecessary files like 'node_modules' from being copied into the Docker image, keeping the image lean.

- 🔌 Port forwarding is essential for accessing applications running inside containers from the host machine, using the '-p' flag in 'docker run'.

- 🔄 Volumes in Docker allow data persistence and sharing across containers, useful for databases and other stateful services.

- 🔧 Docker Compose simplifies the management of multi-container applications by defining and running services, volumes, and networking in a YAML file.

- 🔄 Debugging Dockerized applications can be done through logs, direct CLI access within containers, or using Docker Desktop's GUI.

Q & A

What is one of the leading causes of imposter syndrome among developers mentioned in the script?

-Not knowing Docker and feeling left out during discussions about advanced topics like Kubernetes, swarms, and sharding at parties.

What is the main goal of the video?

-To teach developers everything they need to know about Docker, including installation, Dockerfile instructions, and advanced concepts like port forwarding, volumes, and Docker Compose.

What are the three essential concepts in Docker that one must understand?

-The three essential concepts are Dockerfiles, images, and containers. A Dockerfile is a blueprint for building a Docker image, an image is a template for running containers, and a container is a running process of that image.

Why is Docker useful for developers?

-Docker is useful for developers because it packages software so it can run on any hardware, ensuring that applications run consistently across different environments and avoiding issues like 'it works on my machine'.

What is the purpose of a Dockerfile?

-A Dockerfile is used as a blueprint to build a Docker image, which is then used to create containers. It contains a set of instructions that define the environment in which the application will run.

How does Docker solve the problem of different environments causing application failures?

-Docker solves this problem by allowing developers to define the environment in a Dockerfile, creating an immutable snapshot known as an image. This image can be used to spawn the same process in multiple places, ensuring consistency.

What command should one memorize after installing Docker?

-The 'docker' command should be memorized as it lists all the running containers on the system.

Why is it important to install the Docker extension for VS Code or another IDE?

-The Docker extension provides language support when writing Dockerfiles, and it can also link up to remote registries, offering additional functionality and convenience.

What is the significance of the 'FROM' instruction in a Dockerfile?

-The 'FROM' instruction specifies the base image to start from when building the Docker image. It's typically a specific version of an operating system or a language runtime environment.

Why should dependencies be installed before the app source code in a Dockerfile?

-Installing dependencies first allows Docker to cache them, which means that they don't need to be reinstalled every time the app source code changes, making the build process more efficient.

What is the purpose of the 'docker build' command?

-The 'docker build' command is used to build a Docker image from a Dockerfile. It processes each instruction in the Dockerfile and creates an image that can be used to run containers.

How can one access a running Docker container's logs and interact with its command line?

-One can access logs and interact with a Docker container's command line using Docker Desktop's GUI, which provides a dashboard for viewing logs and a CLI button for executing commands, or by using the 'docker exec' command from the command line.

What is Docker Compose and how does it help with running multiple containers?

-Docker Compose is a tool for defining and running multi-container Docker applications. It allows you to configure your application's services, networks, and volumes in a 'docker-compose.yaml' file and start all the containers together with a single command.

Why should each container in a Dockerized application ideally run only one process?

-Running only one process per container helps keep the containers simple and maintainable. It also aligns with the microservices architecture, making it easier to manage and scale individual components of the application.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Docker - Containerize a Django App

How to deploy your Streamlit Web App to Google Cloud Run using Docker

you need to learn Kubernetes RIGHT NOW!!

Docker Tutorial For Beginners | What Is Docker? | DevOps Tutorial | DevOps Tools | Simplilearn

Day-19 | Jenkins ZERO to HERO | 3 Projects Live |Docker Agent |Interview Questions | #k8s #gitops

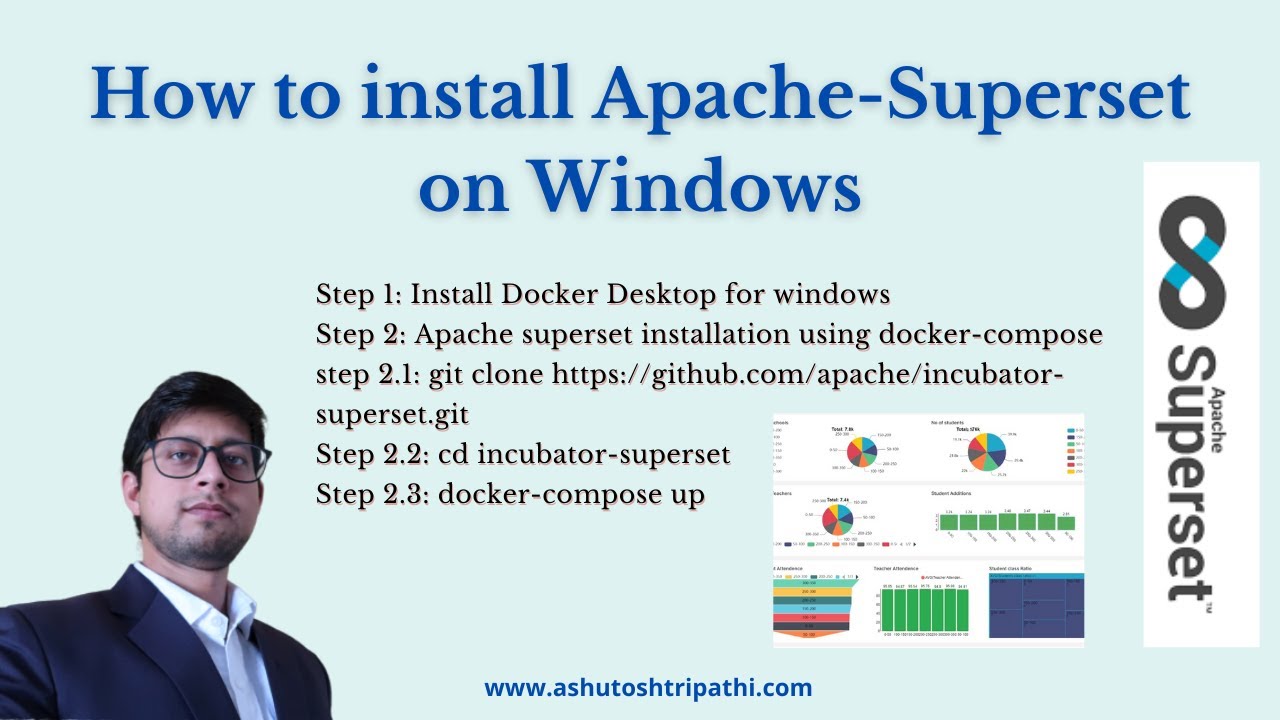

How to install apache-superset on windows | Dashboard building | Data Analytics | Ashutosh Tripathi

5.0 / 5 (0 votes)