Large Language Models explained briefly

Summary

TLDR本视频介绍了大型语言模型(LLM)如何通过预测文本中的下一个词来生成自然语言响应。视频深入解释了LLM的训练过程,包括如何通过处理海量文本数据并调整参数来优化模型预测。特别强调了使用“变换器”模型和注意力机制来并行处理文本,显著提升了效率。此外,还介绍了强化学习和人类反馈如何进一步优化模型性能。整体来说,视频为大众提供了一个易于理解且内容丰富的入门介绍,适合对AI和自然语言处理感兴趣的人群。

Takeaways

- 😀 大型语言模型是通过预测文本中的下一个单词来工作的,基于大量的文本数据训练。

- 😀 模型的输出看起来非常自然,因为它在生成每个单词时会选择随机的、不太可能的词语。

- 😀 语言模型的行为由数百亿个参数决定,这些参数通过不断调整来改进预测。

- 😀 训练语言模型所需的计算量巨大,甚至可能需要超过1亿年的时间,才能完成最大的语言模型的训练。

- 😀 训练过程中,模型会通过与大量文本的交互不断优化,以做出更准确的预测。

- 😀 训练包括两种主要阶段:预训练和通过人类反馈的强化学习。

- 😀 强化学习阶段涉及人类标注的反馈,帮助模型改进,以满足用户需求。

- 😀 语言模型的训练离不开特殊的计算机芯片(GPU),它们能够并行处理大量数据。

- 😀 Transformer模型使用并行处理技术,能够同时读取整个文本,而不是一词一词地处理。

- 😀 Transformer模型的独特之处在于‘注意力’机制,它使得模型能够在上下文中调整每个词的含义。

- 😀 语言模型的生成是一个复杂的过程,涉及多次迭代与多种操作,结果是生成流畅、富有洞察力且实用的文本。

Q & A

什么是大型语言模型,它是如何工作的?

-大型语言模型是一种复杂的数学函数,它通过预测给定文本的下一个单词来生成语言。当用户与聊天机器人互动时,模型通过预测下一个单词,并逐步生成回答。模型会根据概率为每个可能的下一个单词分配一个值,而不是确定性地选择一个单词。

如何训练大型语言模型?

-大型语言模型的训练过程包括两部分:预训练和强化学习。预训练阶段,模型通过处理大量文本来学习如何做出预测。强化学习阶段,通过人类反馈来优化模型,使其更能给出符合用户期望的答案。

为什么大型语言模型需要如此庞大的计算资源?

-训练大型语言模型涉及数以万亿计的运算,需要使用专门的计算芯片(如GPU)来处理这些运算。即使能够每秒进行十亿次加法和乘法运算,训练最大规模的语言模型也需要超过1亿年。

什么是变换器模型(Transformer),它如何改进了语言模型?

-变换器模型(Transformer)是Google团队在2017年提出的一种新型语言模型。与以往的模型按顺序处理文本不同,变换器能够并行处理整个文本,极大地提高了处理速度和效率。它通过注意力机制来强化上下文的理解,从而生成更准确的预测。

什么是‘注意力机制’(Attention Mechanism),它如何在语言模型中起作用?

-注意力机制是一种操作,可以使模型在处理文本时考虑所有词语之间的关系,从而更好地理解词语在上下文中的含义。例如,‘bank’这个词在不同上下文中可能指代‘银行’或‘河岸’,注意力机制有助于根据上下文来调整词语的意义。

为什么大型语言模型的参数如此重要?

-大型语言模型的行为完全由其参数(或称为权重)决定。这些参数决定了模型对下一个单词的预测概率。在训练过程中,通过不断调整这些参数,模型能够逐渐提高预测准确性,甚至可以生成对未见过的文本的合理预测。

在训练语言模型时,如何使用反向传播算法(Backpropagation)?

-反向传播算法通过比较模型预测的结果与实际的正确答案,逐步调整模型参数。每次调整都让模型在未来的预测中变得更准确。通过不断进行这种调整,模型能够提高其预测的准确性。

强化学习在人类反馈中的作用是什么?

-强化学习通过人类反馈来进一步优化模型。工作人员会标记不合适或有问题的预测,并提供修改意见,这些反馈会帮助模型在未来做出更符合用户期望的预测。

为何需要使用专用的GPU来训练大型语言模型?

-GPU(图形处理单元)能够并行处理大量运算,这对训练大型语言模型至关重要。由于训练过程中需要进行数以万亿次的计算,GPU的并行处理能力使得这些运算能够更高效地执行。

为什么大型语言模型在完成任务时每次的回答可能不同?

-虽然语言模型本身是确定性的,但每次生成的答案可能会有所不同,这是因为模型在预测下一个单词时会选择不同的可能性,部分基于随机性。这种随机性使得模型的回答更加自然和多样。

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

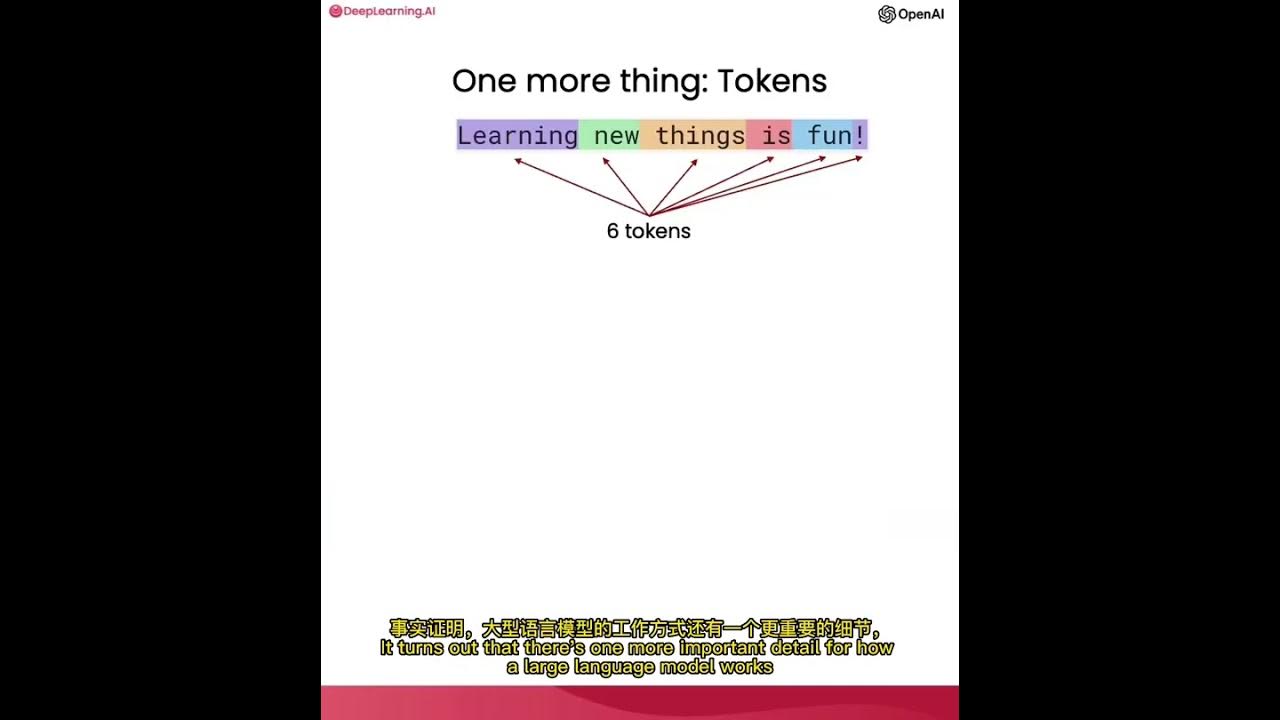

使用ChatGPT API构建系统1——大语言模型、API格式和Token

Understand DSPy: Programming AI Pipelines

[ML News] Jamba, CMD-R+, and other new models (yes, I know this is like a week behind 🙃)

"VoT" Gives LLMs Spacial Reasoning AND Open-Source "Large Action Model"

【人工智能】万字通俗讲解大语言模型内部运行原理 | LLM | 词向量 | Transformer | 注意力机制 | 前馈网络 | 反向传播 | 心智理论

【生成式AI】ChatGPT 可以自我反省!

Trying to make LLMs less stubborn in RAG (DSPy optimizer tested with knowledge graphs)

5.0 / 5 (0 votes)