"VoT" Gives LLMs Spacial Reasoning AND Open-Source "Large Action Model"

Summary

TLDR微软发布了一个开源的大型动作模型,名为Pi win助手,它利用自然语言控制Windows环境中的应用程序。该技术基于一篇名为“可视化思维激发空间推理和大型语言模型”的白皮书,该白皮书介绍了如何通过可视化思维提示(VOT)来增强大型语言模型的空间推理能力。空间推理是大型语言模型历史上表现不佳的一个领域,但这项研究表明,通过可视化思维提示,可以显著提高模型在空间推理任务中的表现。Pi win助手展示了如何通过自然语言指令执行复杂的任务,如在Twitter上发帖或在网页上导航,而无需任何视觉上下文。这项技术不仅展示了大型语言模型的新可能性,也揭示了它们在空间推理方面的潜力。

Takeaways

- 📄 微软发布了一篇研究论文和开源项目,展示了如何通过自然语言控制Windows环境中的应用程序。

- 🤖 该技术被称为“可视化思维”(Visualization of Thought),旨在提升大型语言模型的空间推理能力。

- 🧠 空间推理是指在三维或二维环境中想象不同对象之间的关系,这是大型语言模型历史上表现不佳的一个领域。

- 🚀 通过“可视化思维”提示技术,大型语言模型能够创建内部心理图像,从而增强其空间推理能力。

- 🔍 该技术通过零样本提示而非少量样本演示或图像到文本的可视化,来评估空间推理的有效性。

- 📈 通过可视化思维提示,大型语言模型在自然语言导航、视觉导航和视觉铺砖等任务中取得了显著的性能提升。

- 📊 实验结果显示,使用可视化思维提示的大型语言模型在多个任务中的表现优于未使用可视化的模型。

- 📝 该技术通过在每个推理步骤后可视化状态,生成推理路径和可视化,从而增强了模型的空间感知能力。

- 📚 论文中提到,尽管可视化思维提示技术有效,但它依赖于先进的大型语言模型的能力,可能在较不先进的模型或更具挑战性的任务中导致性能下降。

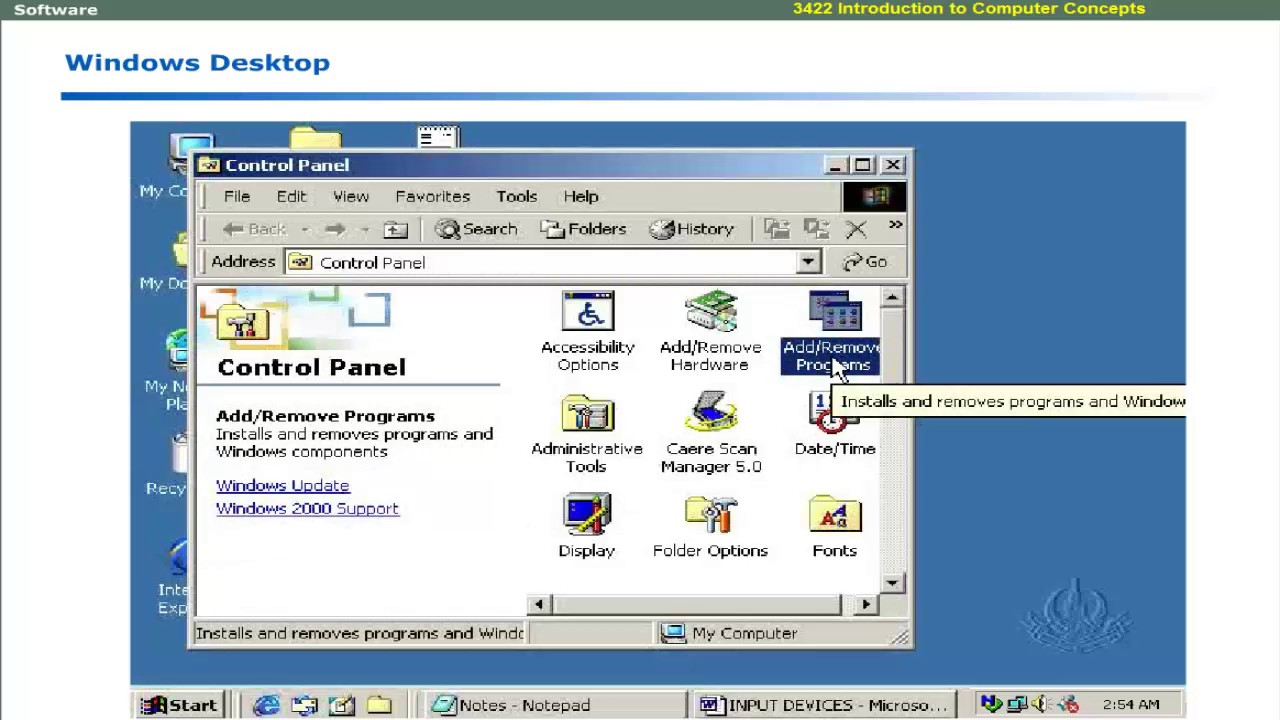

- 🌐 微软的开源项目名为Pi win assistant,是第一个开源的大型动作模型,能够仅通过自然语言控制完全的人类用户界面。

- 💡 Pi win assistant展示了如何将可视化思维提示技术应用于实际的Windows环境控制,如打开浏览器、导航到特定网站、输入文本等。

- 🔗 对于想要深入了解这项技术的人,可以通过阅读微软的研究论文或尝试使用Pi win assistant开源项目来获取更多信息。

Q & A

什么是空间推理?

-空间推理是指在三维或二维环境中,对不同对象之间的关系进行视觉化的能力。它是人类认知的一个重要方面,涉及到对物体、它们的动作和交互之间空间关系的理解和推理。

为什么空间推理对于大型语言模型来说是一个挑战?

-空间推理对于大型语言模型来说是一个挑战,因为这些模型传统上在处理需要视觉化或空间操作的任务时表现不佳。它们通常只能处理文本语言,而空间推理需要能够像人类一样在“心灵的眼睛”中创建和操作视觉图像。

微软发布的开源项目是什么,它如何与空间推理相关?

-微软发布的开源项目名为 Pi win Assistant,它是第一个开源的大型行动模型,专门用于通过自然语言控制完全由人类使用的界面。这个项目使用了论文中提到的“思维可视化”技术,通过在每一步中可视化推理过程,来增强模型的空间推理能力。

什么是“思维可视化”(Visualization of Thought, VOT)?

-“思维可视化”是一种提示技术,它要求大型语言模型在处理任务的每一步中都生成一个内部的视觉空间草图,以此来可视化其推理步骤,并指导后续步骤。这种方法可以帮助模型更好地进行空间推理。

在测试中使用了哪些任务来评估空间推理能力?

-在测试中使用了三种需要空间意识的任务来评估大型语言模型的空间推理能力,包括自然语言导航、视觉导航和视觉铺砌。这些任务通过设计二维网格世界和使用特殊字符作为输入格式,来挑战模型的空间推理能力。

Pi win Assistant 如何在 Windows 环境中控制应用程序?

-Pi win Assistant 通过自然语言处理用户的指令,然后自动执行一系列的操作来控制 Windows 环境中的应用程序。例如,它可以打开 Firefox 浏览器,导航到 YouTube,搜索特定的内容,或者在 Twitter 上发布新的帖子。

为什么 Pi win Assistant 需要在每一步中可视化?

-在每一步中可视化是为了提供推理过程的可追溯性,类似于“思维链”(Chain of Thought)的概念。这不仅可以帮助模型更准确地执行任务,还可以让开发者或用户理解模型是如何一步步到达最终结果的。

Pi win Assistant 的性能如何,它在哪些方面表现出色?

-根据测试结果,使用 VOT 提示技术(即在每一步中可视化)的 GPT-4 在所有任务中都表现出色,特别是在路线规划、下一步预测、视觉铺砌和自然语言导航等方面,其成功率和完成率都显著高于未使用可视化技术的模型。

Pi win Assistant 有什么局限性?

-Pi win Assistant 的局限性在于它依赖于先进的大型语言模型的能力,因此在性能较差的语言模型或者更复杂的任务上可能会导致性能下降。此外,它需要能够理解和处理自然语言描述的二维空间,而不是直接处理图形或图像。

如何获取并使用 Pi win Assistant?

-Pi win Assistant 是一个开源项目,用户可以从相关的开源社区或微软的官方网站获取源代码。根据提供的文档和指南,用户可以下载、安装并在本地环境中使用 Pi win Assistant。

除了控制 Windows 环境,Pi win Assistant 是否可以扩展到其他操作系统?

-虽然 Pi win Assistant 目前是为 Windows 环境设计的,但其基于自然语言控制接口的核心概念理论上可以扩展到其他操作系统。这将需要对不同操作系统的用户界面和控件进行适配和开发。

Pi win Assistant 的未来发展方向可能是什么?

-Pi win Assistant 的未来发展方向可能包括提高模型的空间推理能力,扩展到更多的操作系统和应用程序,以及增强用户交互体验。此外,它还可以被集成到更复杂的自动化系统中,或者用于辅助视觉障碍人士使用计算机。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Understand DSPy: Programming AI Pipelines

Introduction to windows | computer software language learning | Computer Education for All

I Forked Bolt.new and Made it WAY Better

Optimization of LLM Systems with DSPy and LangChain/LangSmith

OpenAI unveils new AI model and desktop version of ChatGPT

[ML News] Jamba, CMD-R+, and other new models (yes, I know this is like a week behind 🙃)

5.0 / 5 (0 votes)