Complete Azure Data Factory CI/CD Process (DEV/UAT/PROD) with Azure Pipelines

Summary

TLDRThis video provides a comprehensive guide on deploying Azure Data Factory (ADF) using Azure Pipelines, covering the UAT and production environments. It explains the process of configuring pre-deployment and post-deployment steps, including the use of Azure Resource Manager (ARM) templates and PowerShell scripts. Key points include managing environment-specific parameters, setting up service connections, and performing incremental deployments. The video also emphasizes the approval process, resource cleanup, and the importance of securing sensitive data through encrypted variables, offering a streamlined method for deploying ADF code effectively.

Takeaways

- 😀 Pre-deployment steps focus on stopping triggers and ensuring that no resources are deleted prematurely, with 'false' settings for cleanup during the pre-deployment phase.

- 😀 The Azure Resource Manager (ARM) deployment task is crucial for deploying code to UAT and production environments using Azure service connections and service principals.

- 😀 Conditional logic in the pipeline ensures that the correct subscription ID is used based on the environment (UAT or PROD) by referencing encrypted variables.

- 😀 ADF deployments are incremental, meaning the code updates existing resources without removing them entirely, preserving the current state of the data factory.

- 😀 After deployment, a cleanup phase ensures that outdated resources (like pipelines or triggers) are deleted from Azure Data Factory to maintain a clean state.

- 😀 Manual approvals are set in the pipeline to confirm deployment to UAT and production environments, ensuring controlled releases.

- 😀 The pipeline automatically downloads the necessary artifact (like ARM templates and PowerShell scripts) before starting the deployment process.

- 😀 ADF triggers are stopped during the deployment process to avoid any interference while the code is being deployed and resources are updated.

- 😀 Azure service principals with appropriate RBAC (Role-Based Access Control) permissions are required to deploy code successfully to both UAT and production environments.

- 😀 The deployment process uses encrypted secrets (e.g., subscription IDs and tenant information) to securely manage sensitive data during the pipeline execution.

- 😀 The pipeline script optionally uses template overrides for parameters instead of relying on external parameter files, offering flexibility in how deployment parameters are handled.

Q & A

What is the purpose of the pre-deployment phase in the pipeline?

-The pre-deployment phase ensures that no unnecessary resources are deleted before the actual code deployment. It stops triggers to prevent any interruptions during deployment and ensures that the environment is prepared correctly before pushing new code.

How does the Azure Resource Manager (ARM) deployment task function in the pipeline?

-The ARM deployment task is used to deploy resources, like Azure Data Factory and Key Vault, to specified resource groups (e.g., UAT and production). It uses a service connection with a service principal that has permission to deploy to the resource groups. The deployment process uses ARM templates and parameter files to ensure consistency.

What is the role of encrypted variables in the pipeline?

-Encrypted variables store sensitive information, such as subscription IDs and keys, in a secure way. These variables are used in the pipeline to ensure that sensitive data is not exposed in logs or code while maintaining the security of the deployment process.

Why are approval gates used in the pipeline for UAT and production deployments?

-Approval gates are added to ensure that a manual approval step occurs before deploying to UAT or production environments. This helps control the deployment process and prevent accidental or unauthorized deployments, ensuring that code changes are thoroughly reviewed before going live.

What is the purpose of the 'if' condition when selecting the subscription ID?

-The 'if' condition determines which environment (UAT or production) the pipeline is targeting. It checks the environment parameter passed to the pipeline, then selects the appropriate subscription ID and template parameters file, ensuring that the correct configuration is used for each environment.

How does the pipeline handle deleting resources that no longer exist in the new code?

-After deployment, the pipeline checks for any resources that are no longer present in the updated code. If resources (like pipelines or triggers) that were previously deployed no longer exist in the new code, they are automatically deleted to keep the environment clean and avoid orphaned resources.

What happens after the Azure Data Factory resources are deployed?

-Once the resources are deployed, the pipeline runs a post-deployment cleanup phase. It restarts ADF triggers and deletes any resources that shouldn't be there anymore, ensuring the system is only using the latest configuration and removing any outdated or unnecessary items.

What is the advantage of using ARM templates over inline code overrides?

-ARM templates provide a more structured and consistent way to deploy resources across environments. They are easier to maintain, version, and manage, especially in large projects. Inline overrides can be used in specific cases for quick adjustments, but templates ensure greater scalability and repeatability in deployments.

How is the service principal used in the pipeline?

-A service principal is used in the pipeline as part of an Azure Resource Manager (ARM) connection. It is configured with the necessary permissions, typically the Contributor role, to deploy resources to the UAT and production environments. This ensures that the pipeline can authenticate and deploy resources securely without requiring user credentials.

What is the significance of the post-deployment phase?

-The post-deployment phase is crucial for cleaning up any unnecessary resources that were part of the previous deployment but are not part of the new code. It also restarts ADF triggers, ensuring that the system is in a stable state after deployment and only the relevant resources remain active.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

DP-203: 11 - Dynamic Azure Data Factory

#16. Different Activity modes - Success , Failure, Completion, Skipped |AzureDataFactory Tutorial |

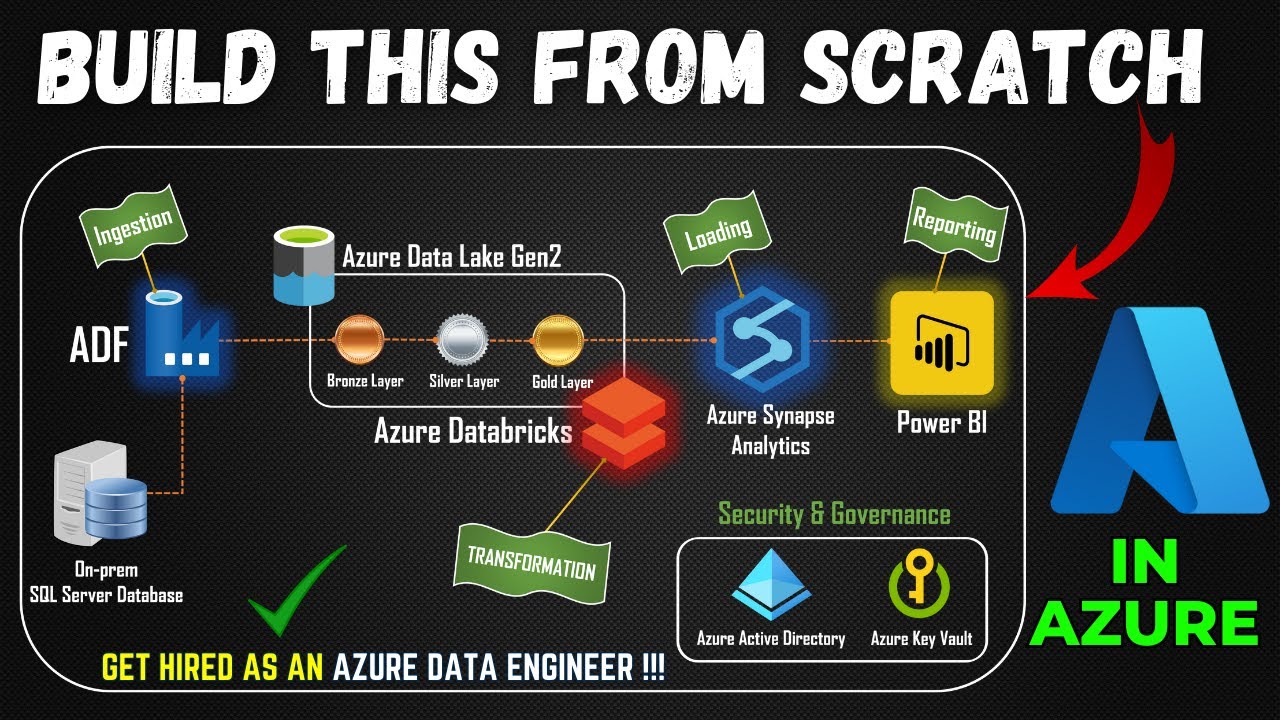

Part 1- End to End Azure Data Engineering Project | Project Overview

Big Data Engineer Mock Interview | Real-time Project Questions | Amount of Data | Cluster Size

How to refresh Power BI Dataset from a ADF pipeline? #powerbi #azure #adf #biconsultingpro

Azure Data Factory Part 3 - Creating first ADF Pipeline

5.0 / 5 (0 votes)