Http Request Chunking

Summary

TLDRThe video discusses how to handle HTTP request size limitations using request chunking. It explains the process of splitting large files into chunks for upload, avoiding the 413 'Content Too Large' error. The server groups chunks with an upload ID, stores them, and only completes the process when all chunks are received. The method allows for parallel uploads and improves performance, even across multiple servers. Chunking is beneficial for overcoming size limits, optimizing server resources, and enabling efficient file uploads.

Takeaways

- 🚫 HTTP servers like Nginx limit request sizes (e.g., 1MB), resulting in a 413 Content Too Large error if exceeded.

- 🛠️ To bypass size limits, file uploads can be split into smaller chunks to ensure smoother handling.

- 🔢 A unique uploadId is necessary to group all file chunks together on the server.

- 📏 Information like chunkId, chunkSize, and total fileSize must be included with each chunk for accurate tracking.

- 📥 Each chunk is stored separately on the server, which returns an 'accepted' state until the full file is uploaded.

- 📦 Once all chunks are received, the server combines them and returns a blobId, indicating successful upload completion.

- 🚀 Chunks can be uploaded in parallel, but the server must handle potential out-of-order arrivals efficiently.

- 🗂️ When dealing with multiple servers, a state store and chunk storage are used to ensure smooth chunk handling across instances.

- 🗺️ The state store tracks chunk completion via a bitmap, ensuring the entire file is processed once all chunks are received.

- ⚡ Chunking improves performance by overcoming server size limits, enabling parallel uploads, and utilizing multiple servers.

Q & A

What happens if the body size of an HTTP request exceeds the server's limit?

-If the body size of an HTTP request exceeds the server's limit, the server will return a 413 Content Too Large response.

What is the default body size limit for Nginx, and can it be changed?

-The default body size limit for Nginx is 1MB. It can be increased, but doing so may negatively affect server performance.

How can request chunking help in handling large file uploads?

-Request chunking helps by splitting large files into smaller chunks, allowing the server to process them incrementally without exceeding the size limit, improving overall performance.

What additional information must be sent along with each chunk during a chunked file upload?

-Along with each chunk, information such as an uploadId to group the chunks, a chunkId, chunkSize, and the total file size must be sent so the server can identify and assemble the chunks.

How does the server handle a file upload when chunks are received out of order?

-The server stores each chunk as it arrives, regardless of order, and tracks which chunks have been received. Once all chunks are received, the server assembles them and returns a blobId.

What happens when the final chunk of a file is uploaded to the server?

-When the final chunk is uploaded, the server assembles the file, creates a blobId, and returns a 200 OK or 201 CREATED response, indicating the upload is complete.

Can file chunks be uploaded in parallel, and what challenges does this present?

-Yes, file chunks can be uploaded in parallel. However, the server needs to handle out-of-order chunk arrivals and ensure all chunks are correctly tracked and stored.

What is the purpose of a state store in a distributed system with multiple machines handling uploads?

-In a distributed system, a state store keeps track of the chunk upload process by maintaining a map with a bitmap of received chunks and validating requests, ensuring the system knows when the file is complete.

How does chunking improve server performance aside from overcoming size limitations?

-Chunking improves server performance by allowing uploads to be processed in parallel, distributing the load across multiple machines, and ensuring requests have a consistent size, which optimizes resource usage.

What key information must be tracked to ensure a chunked file upload is successful?

-The server must track the uploadId, chunkId, chunkSize, and the total file size, as well as which chunks have been received to ensure the file can be correctly reassembled.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What are web servers and how do they work (with examples httpd and nodejs)

Basic concepts of web applications, how they work and the HTTP protocol

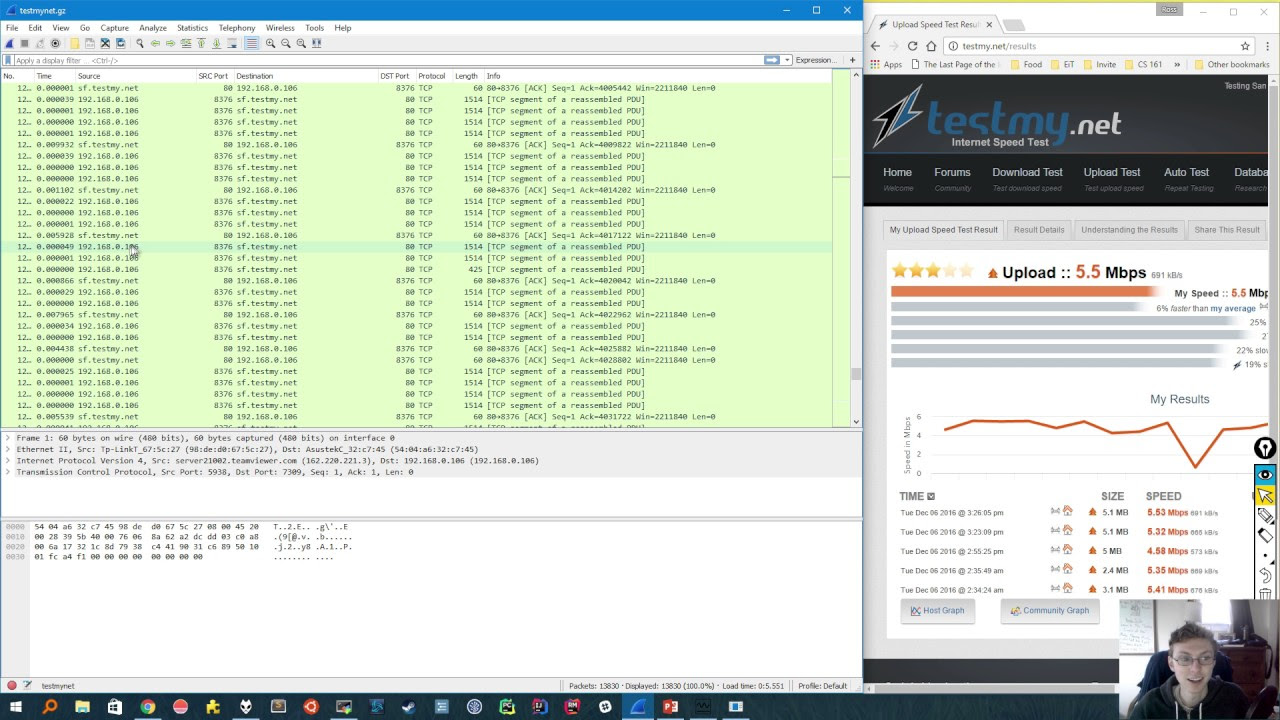

Intro to Wireshark: Basics + Packet Analysis!

5- شرح HTTP Protocol بالتفصيل | دورة اختبار اختراق تطبيقات الويب

#25 How to get selected endpoint | Understanding Routing | ASP.NET Core MVC Course

Polling vs WebSockets vs Socket.IO (Simple Explanation) - Chat App Part11

5.0 / 5 (0 votes)