The New Stack and Ops for AI

Summary

TLDR本次演讲介绍了如何将基于人工智能的应用程序从原型阶段过渡到生产阶段。演讲者Sherwin和Shyamal分别来自OpenAI的工程团队和应用团队,他们分享了构建用户友好体验、处理模型不一致性、通过评估迭代应用以及管理应用规模的策略。提出了包括控制不确定性、构建可信赖的用户体验、利用知识库和工具锚定模型、实施评估以及通过编排管理成本和延迟等概念。演讲强调了构建和维护大型语言模型(LLM)运营的新学科——LLM Ops的重要性。

Takeaways

- 😀 大型语言模型(LLM)从原型到生产的过程需要一个指导框架,帮助开发者和企业构建和维护基于模型的产品。

- 🚀 ChatGPT自2022年11月发布以来,已经从社交媒体上的玩具转变为企业和开发者试图集成到自己产品中的能力。

- 🔒 构建原型相对简单,但要将应用从原型阶段带入生产阶段,需要解决模型的非确定性本质带来的挑战。

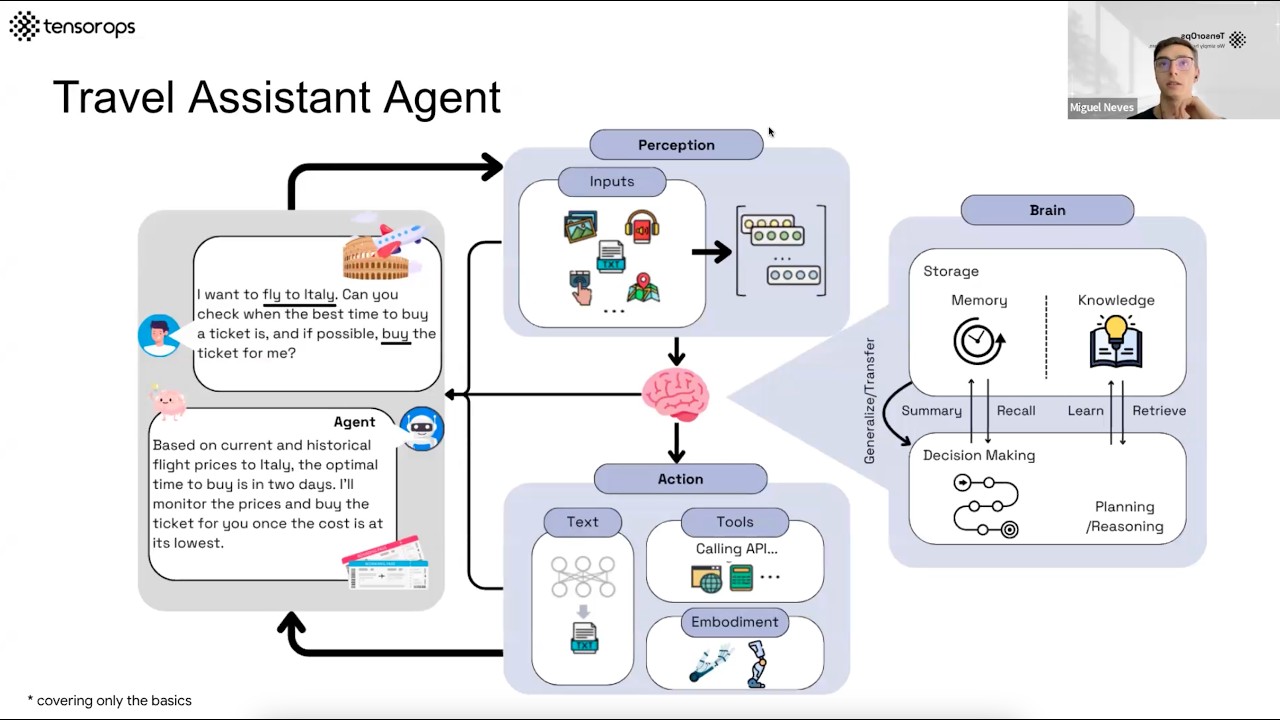

- 🛠️ 提供了一个由栈图组成的框架,包括构建用户体验、处理模型不一致性、迭代应用以及管理规模等方面。

- 👥 构建以人为中心的用户体验,通过控制不确定性和构建可操作性和安全性的护栏,来提高用户交互的质量。

- 🔄 通过模型级功能和知识库工具,如JSON模式和可复现输出,来解决模型的不一致性问题。

- 📝 使用评估来测试和监控模型性能,确保应用在部署过程中不会发生退化。

- 💡 通过语义缓存和路由到成本更低的模型等策略,来管理规模,减少延迟和成本。

- 🌐 介绍了LLM Ops(大型语言模型操作)的概念,作为应对使用LLM构建应用时所面临的独特挑战的新学科。

- 🛑 强调了在构建基于LLM的产品时,需要考虑的长期平台和专业知识,而不是一次性工具。

- 🌟 鼓励开发者和企业共同构建下一代助手和生态系统,探索和发现新的可能性。

Q & A

Stack and Ops for AI 是什么?

-Stack and Ops for AI 是一个关于如何将人工智能应用从原型阶段带入生产阶段的讨论,由 Sherwin 和 Shyamal 主持,他们分别来自 OpenAI Developer Platform 的工程团队和应用团队。

为什么从原型到生产阶段的转变很重要?

-从原型到生产阶段的转变很重要,因为原型阶段虽然可以快速展示创意,但在生产环境中需要考虑模型的一致性、用户体验、成本和扩展性等多种因素。

ChatGPT 是什么时候推出的?

-ChatGPT 是在2022年11月底推出的,至今尚未满一年。

GPT-4 的推出时间和它的特点是什么?

-GPT-4 是在2023年3月推出的,它是一个旗舰模型,推出至今不到八个月,它代表了人工智能模型的最新进展。

为什么说非确定性模型的扩展会感到困难?

-非确定性模型的扩展会感到困难,因为这些模型的输出具有随机性,难以预测,这使得在生产环境中保持一致性和可靠性成为一个挑战。

什么是知识库和工具(Knowledge Store and Tools)?

-知识库和工具是一种方法,通过向模型提供额外的事实信息来减少模型的不一致性,帮助模型在回答问题时有更可靠的信息来源。

模型级特性(Model-level features)有哪些?

-模型级特性包括 JSON 模式和可复现输出等,这些特性可以帮助开发者更好地控制模型的行为,提高输出的一致性。

什么是语义缓存(Semantic Caching)?

-语义缓存是一种技术,通过存储先前查询的响应来减少对 API 的调用次数,从而降低延迟和成本。

如何评价和测试 AI 应用的性能?

-可以通过创建针对特定用例的评估套件(Evals)来评价和测试 AI 应用的性能,这包括手动和自动评估方法,以及使用 AI 模型自身进行评估。

什么是 LLM Ops?

-LLM Ops,即 Large Language Model Operations,是一种新兴的实践,涉及工具和基础设施,用于端到端地管理大型语言模型的运维。

如何使用 GPT-4 创建训练数据集来微调 3.5 Turbo?

-可以使用 GPT-4 生成一系列提示和相应的输出,这些输出可以作为训练数据集,用于微调 3.5 Turbo,使其在特定领域的表现接近 GPT-4。

为什么需要在 AI 应用中建立用户信任?

-建立用户信任对于 AI 应用至关重要,因为它可以帮助用户理解 AI 的能力和局限性,确保用户在使用过程中获得安全、可靠的体验。

如何通过设计来控制 AI 应用中的不确定性?

-通过设计可以控制不确定性,例如通过保留人为干预的选项、提供反馈机制、透明地沟通系统的能力与限制,以及设计引导性的用户界面。

为什么说建立 guardrails 对于 AI 应用很重要?

-建立 guardrails 可以作为用户界面和模型之间的约束或预防性控制,目的是防止有害和不想要的内容到达应用,同时增加模型在生产中的可引导性。

如何使用检索系统(RAG 或向量数据库)来增强 AI 应用?

-通过使用检索系统,可以在用户查询到来时,先向检索服务发送请求,检索服务返回相关的信息片段,然后将这些信息与原始查询一起传递给 API,以生成更准确的响应。

自动化评估(Automated Evals)有哪些优势?

-自动化评估可以减少人为参与,快速监控进度和测试回归,使开发人员能够更专注于处理复杂的边缘情况,从而优化评估方法。

为什么说模型级特性和知识库的结合对于保持一致性很重要?

-模型级特性可以帮助约束模型行为,而知识库提供了额外的事实信息,两者结合可以显著减少模型的随机性和不确定性,提高应用在生产环境中的一致性。

如何通过微调来降低成本和提高性能?

-通过微调 GPT-3.5 Turbo,可以创建一个针对特定用例优化的模型版本,这不仅可以降低成本,还可以提高模型在特定任务上的性能。

什么是观察性(Observability)和追踪(Tracing)?

-观察性和追踪是 LLM Ops 的重要组成部分,它们帮助识别和调试提示链和助手的失败,加快生产环境中问题的处理速度,并促进不同团队之间的协作。

为什么说 LLM Ops 是应对构建 LLM 应用挑战的新兴领域?

-LLM Ops 作为一门新兴学科,提供了应对构建大型语言模型应用时所面临的独特挑战的实践、工具和基础设施,它正在成为许多企业架构和堆栈的核心组件。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)