Lecture 5.4 Advanced ERP Topics

Summary

TLDRThe video script delves into extensions of basic linear discriminant analysis (LDA) for machine learning, highlighting its effectiveness on linearly transformed data. It discusses the importance of applying LDA to principal components or independent components without losing information. The script also explores the use of ICA for source time courses, allowing for interpretable classifiers and the introduction of brain-informed constraints. It touches on alternative linear features like wavelet decomposition for pattern matching and the concept of sparsity in feature selection. The discussion extends to nonlinear features, emphasizing the need for proper spatial filtering before feature extraction. The script concludes with considerations for class imbalance and the use of the area under the ROC curve as a performance measure, suggesting direct optimization of classifiers under sophisticated cost functions.

Takeaways

- 📚 The script discusses extensions and tweaks to basic machine learning techniques to enhance their performance.

- 🔍 Linear Discriminant Analysis (LDA) provides the same result whether applied to raw data or its linear transformations, as long as no information is lost.

- 🌐 The script highlights the importance of considering non-linear aspects, such as Independent Component Analysis (ICA), for analyzing EEG data.

- 🔄 It emphasizes that ICA assigns weights to components rather than channels, allowing for more interpretable classifiers.

- 🧠 The script suggests using ICA for relating components to source space in the brain, which can inform constraints on feature selection.

- 📊 The speaker mentions the use of linear features like averages and wavelet decompositions for pattern matching in EEG data.

- 🔑 The concept of sparsity is introduced, where only a small number of coefficients contain the relevant information, which can be leveraged for classifier design.

- 💡 Non-linear features are also discussed, with a focus on the importance of applying non-linear transformations after linear spatial filtering.

- 📉 The script addresses the challenges of dealing with non-linear features in EEG due to issues like volume conduction.

- ⚖️ The importance of considering class imbalance and cost-sensitive classification is highlighted, with suggestions to use measures like the area under the ROC curve.

- 🛠️ The speaker recommends using classifiers that can be directly optimized for performance metrics such as the area under the curve, mentioning the support vector framework as an example.

Q & A

What is the basic technique discussed in the script that is easy to comprehend but can be extended with tweaks and twists?

-The basic technique discussed is Linear Discriminant Analysis (LDA), which is a simple yet effective method for classification that can be enhanced with various modifications to improve its performance.

Why does applying LDA to linearly transformed data yield the same result as applying it to the original data?

-Applying LDA to linearly transformed data gives the same result because LDA is a linear method. It would simply learn different weights to achieve the same performance, as long as no information is lost during the transformation.

What is the difference between applying LDA to linear and non-linear data?

-In the case of linear data, LDA can handle transformations like scaling or channel swapping without affecting performance. However, for non-linear data, such as oscillations, applying LDA directly to the data or to transformed data can yield different results, emphasizing the need for appropriate pre-processing or feature extraction techniques.

How does Independent Component Analysis (ICA) differ from LDA in terms of assigning weights?

-ICA assigns weights to every component of the signal rather than to every channel. This allows for a more interpretable classification, as each component can be associated with specific signal sources, such as blinks or muscle activity.

What is the advantage of using ICA in conjunction with LDA for EEG signal classification?

-ICA can provide source time courses that relate to the brain's activity, which can be used to inform constraints for LDA, such as excluding components from non-relevant brain regions. This enhances interpretability and allows for more targeted feature selection.

Why might one choose to use wavelet decomposition instead of averages for feature extraction in EEG signals?

-Wavelet decomposition can be more suitable when the underlying ERP (Event-Related Potential) has specific forms like ripples. It allows for pattern matching with the time course of the signal, which can capture more nuanced features than simple averages.

How does dimensionality reduction relate to the use of wavelet features in EEG signal classification?

-Dimensionality reduction is important when using wavelet features because using all of them would essentially be the same as using the raw data. By selecting a small number of wavelet coefficients that contain the relevant information, the model can become more efficient and focused on the signal of interest.

What is the significance of sparsity in the context of feature selection for EEG signal classification?

-Sparsity refers to the idea of using only a small number of non-zero coefficients in the model. This can improve the classifier's performance by focusing on the most informative features and reducing the impact of noise or irrelevant information.

Why is it important to consider the order of operations when extracting non-linear features from EEG data?

-The correct order is to first apply a spatial filter to isolate the source signal, then perform non-linear feature extraction, and finally apply a classifier. This sequence ensures that the non-linear properties of the source signal are captured accurately, rather than being distorted by channel-level transformations.

What is the area under the curve (AUC) and how is it used to evaluate classifier performance in imbalanced datasets?

-The AUC represents the area under the Receiver Operating Characteristic (ROC) curve, which plots the true positive rate against the false positive rate for different threshold settings. It provides a measure of a classifier's ability to distinguish between classes, especially in situations with imbalanced datasets or when different types of errors have different costs.

How can classifiers be optimized for imbalanced classes or when certain errors are more costly?

-Classifiers can be optimized by incorporating cost-sensitive learning, where the misclassification costs are taken into account during training. Additionally, using performance metrics like AUC or F-scores, which are more informative than simple misclassification rates, can help in such scenarios.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Perceptron Training

Lec-46: Principal Component Analysis (PCA) Explained | Machine Learning

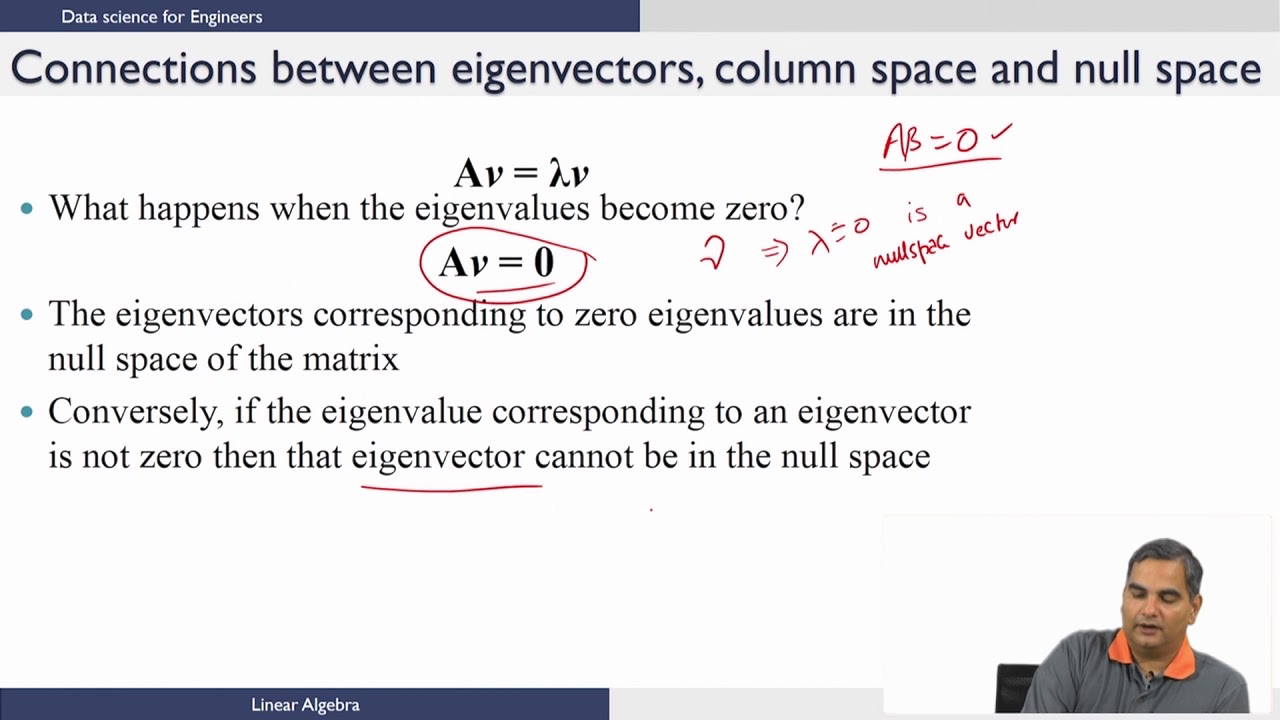

Linear Algebra - Distance,Hyperplanes and Halfspaces,Eigenvalues,Eigenvectors ( Continued 3 )

Week 3 Lecture 19 Linear Discriminant Analysis 3

What Linear Algebra Is — Topic 1 of Machine Learning Foundations

#10 Machine Learning Specialization [Course 1, Week 1, Lesson 3]

5.0 / 5 (0 votes)