Azure Data Factory Part 5 - Types of Data Pipeline Activities

Summary

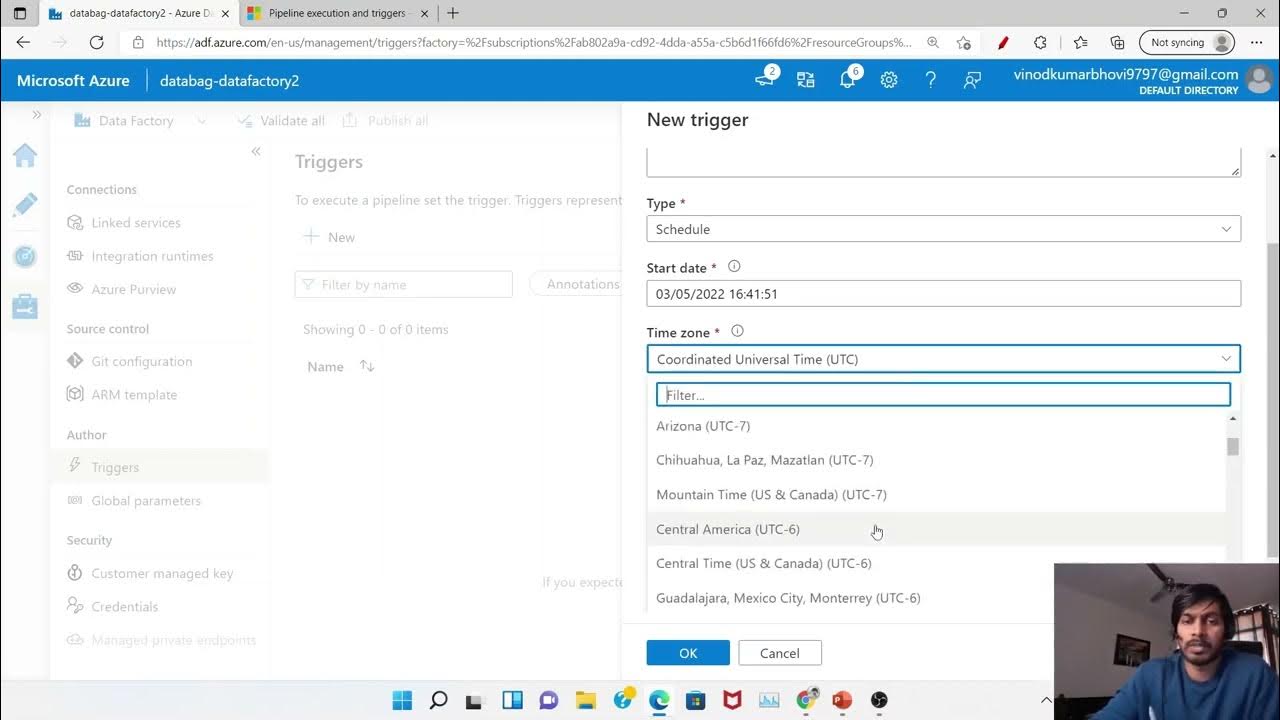

TLDRThis video from the Azure Data Factory series delves into the concept of pipelines and activities, explaining their roles in data processing. It clarifies the distinction between a pipeline, a logical grouping of activities, and an activity, a processing step within a pipeline. The video outlines three main types of activities: data movement, data transformation, and control flow, providing examples and emphasizing their importance in data factory operations. It also directs viewers to Microsoft's detailed documentation for further understanding.

Takeaways

- 📚 The video is part of a series on Azure Data Factory, focusing on pipelines and activities, and their types.

- 🔍 Pipelines are logical groupings of activities that perform a unit of work in Azure Data Factory.

- 🔄 Activities represent individual processing steps within a pipeline, such as copying data or performing transformations.

- 🔑 Understanding different types of activities is crucial for determining which to use based on specific requirements.

- 🔗 The script revisits the concept of pipelines and activities, emphasizing their roles in data movement and transformation.

- 🌐 The video mentions Azure ADF's integration runtimes, which are essential for data movement activities.

- 📈 Data movement activities in Azure Data Factory primarily involve the Copy Activity, which supports various data stores.

- 🔧 Data transformation activities include Data Flows, Azure Functions, Hive, Pig, and MapReduce for big data processing.

- 📝 Control flow activities are used for managing the flow of execution in a pipeline, such as conditionals and iterations.

- 📚 The video references Microsoft's detailed documentation on pipelines and activities in Azure Data Factory and Azure Synapse Analytics.

- 👍 The presenter encourages viewers to subscribe to the channel for more educational content, emphasizing continuous learning and sharing.

Q & A

What is the main focus of the fifth part of the Azure Data Factory video series?

-The main focus of the fifth part is to explore the concept of pipelines and activities, including the different types of activities available in Azure Data Factory.

What are the top-level concepts discussed in section one of the video series?

-In section one, the top-level concepts discussed include pipelines, activities, datasets, linked services, integration runtimes, and triggers.

What did the audience learn about in section three of the video series?

-In section three, the audience learned about creating their first pipeline and got an introduction to different types of activities.

What is a pipeline in Azure Data Factory?

-A pipeline in Azure Data Factory is a logical grouping of activities that perform a unit of work, such as copying data from one location to another or performing data transformations.

What is an activity in the context of Azure Data Factory?

-An activity in Azure Data Factory represents a processing step within a pipeline, such as a copy activity that moves data from one data store to another.

What is the difference between a dataset and a linked service in Azure Data Factory?

-A dataset in Azure Data Factory refers to a table or file, whereas a linked service defines the connection to a data source or a cloud service.

What are the three main types of activities in Azure Data Factory?

-The three main types of activities in Azure Data Factory are data movement activities, data transformation activities, and control flow activities.

What is a data movement activity in Azure Data Factory?

-A data movement activity, such as the copy activity, is used for moving data from various sources to various destinations within Azure Data Factory.

What is a data transformation activity in Azure Data Factory?

-Data transformation activities in Azure Data Factory, such as data flows, Azure Functions, Hive, Pig, and MapReduce, are used to transform data based on specific requirements.

What are control flow activities in Azure Data Factory?

-Control flow activities in Azure Data Factory include ForEach, If Conditions, Execute Pipeline, Lookup, Add Variable, Switch, Until, and Validation activities, which are used to control the flow of data processing.

Where can one find detailed documentation on pipelines and activities in Azure Data Factory?

-One can find detailed documentation on pipelines and activities in Azure Data Factory on the Microsoft documentation website, specifically in the section about Azure Data Factory and Azure Synapse Analytics.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

#16. Different Activity modes - Success , Failure, Completion, Skipped |AzureDataFactory Tutorial |

Azure Data Factory Part 6 - Triggers and Types of Triggers

Azure Data Factory Part 4 - Integration Run Time and Different types of IR

DP-203: 11 - Dynamic Azure Data Factory

DP-900 Exam EP 03: Data Job Roles and Responsibilities

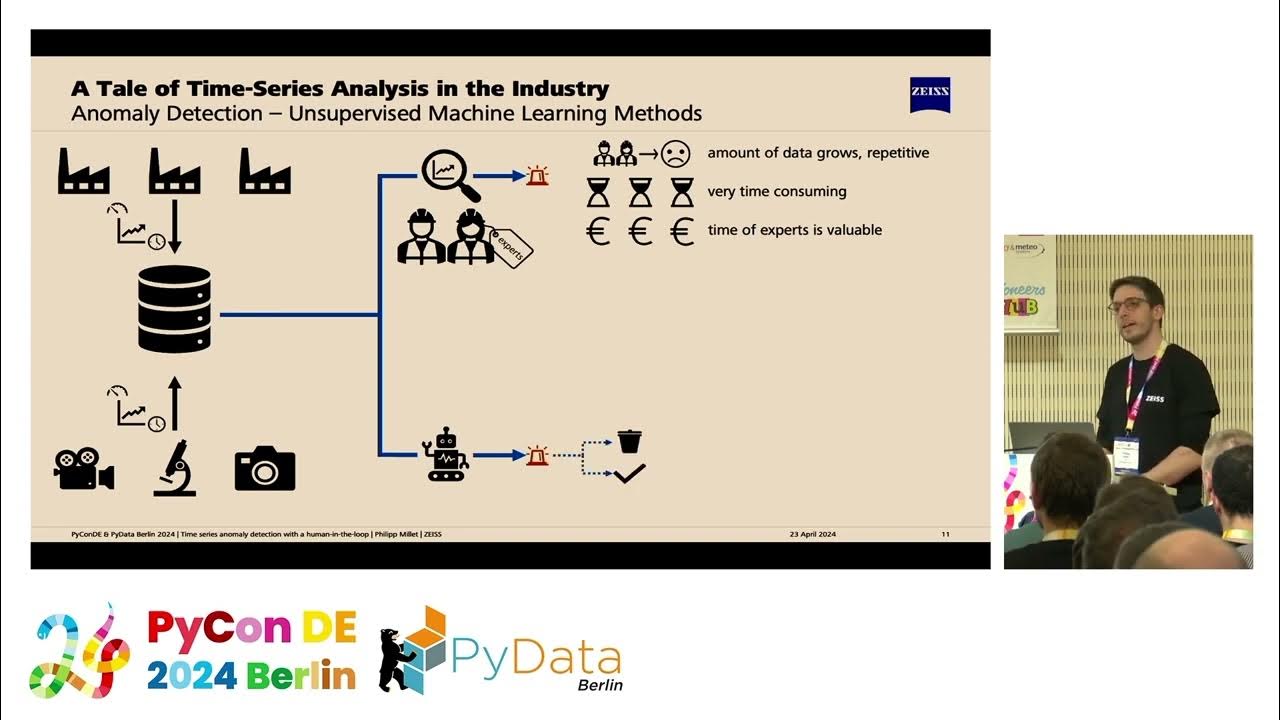

Time series anomaly detection with a human-in-the-loop [PyCon DE & PyData Berlin 2024]

5.0 / 5 (0 votes)