Azure Data Factory Part 6 - Triggers and Types of Triggers

Summary

TLDRThis video from the Azure Data Factory series introduces triggers, which automate pipeline execution. It explains three types: scheduled, tumbling window, and event-based triggers. The presenter demonstrates how to create and configure these triggers in Azure Data Factory, emphasizing their role in batch and real-time processing. The tutorial also covers manual triggering and monitoring triggers, providing practical insights for automating data workflows.

Takeaways

- 🔧 Triggers in Azure Data Factory are mechanisms to execute a pipeline run automatically.

- 📅 There are three main types of triggers: scheduled, tumbling window, and event-based triggers.

- ⏰ Scheduled triggers run pipelines based on a fixed time schedule, like every day at 9 a.m.

- 🔄 Tumbling window triggers operate on a periodic interval and differ from scheduled triggers in their granularity, focusing on hours and minutes.

- 📂 Event-based triggers initiate pipeline execution in response to specific events, such as the creation of a file in a storage account.

- 🛠️ Creating a trigger involves setting a name, description, start time, time zone, and recurrence pattern.

- 🔄 Recurrence settings for triggers allow for customization of execution frequency, from minutes to months.

- 🔗 Tumbling window triggers can have dependencies on other triggers, indicating a sequence of operations.

- 🚫 Advanced options for triggers include the ability to set delays, manage concurrency, and define retry policies for failed pipeline executions.

- 📝 Annotations can be added to triggers for additional information or notes.

- 🔒 Security and monitoring are implied as part of the process, with a dashboard to view trigger statistics and status.

- 🔄 The video script demonstrates the creation and management of triggers within Azure Data Factory, emphasizing automation and customization.

Q & A

What is the primary purpose of triggers in Azure Data Factory?

-Triggers in Azure Data Factory are used to automate the execution of a pipeline run. They determine when a pipeline execution should be initiated, allowing for the automation of batch processing and real-time processing tasks.

How many types of triggers are discussed in the video?

-The video discusses three main types of triggers: scheduled trigger, tumbling window trigger, and event-based trigger.

What is a scheduled trigger and how does it work?

-A scheduled trigger is a type of trigger that invokes a pipeline on a wall clock schedule. It can be set to run a pipeline at specific times, such as every day at 9 a.m., according to a predefined schedule.

Can you explain the concept of a tumbling window trigger?

-A tumbling window trigger operates on a periodic interval. It is different from a scheduled trigger in terms of its granularity, which is limited to hours and minutes, and it does not include months, days, and weeks as options.

What is an event-based trigger and how does it differ from other triggers?

-An event-based trigger responds to a specific event, such as the creation or deletion of a file in a storage account. It differs from other triggers as it does not rely on a time-based schedule but rather on the occurrence of an event.

What is the difference between 'trigger now' and creating a new trigger in Azure Data Factory?

-'Trigger now' is a manual trigger that initiates a pipeline run immediately, whereas creating a new trigger sets up an automated process that will run the pipeline at specified intervals or in response to an event.

How can you set up a pipeline to run every 15 minutes in Azure Data Factory?

-You can set up a pipeline to run every 15 minutes by creating a scheduled trigger, setting the recurrence to every 15 minutes, and specifying the appropriate time zone.

What is the role of dependencies in tumbling window triggers?

-Dependencies in tumbling window triggers allow one trigger to be dependent on the completion or start of another trigger. This ensures that the pipeline execution is coordinated based on the status of other triggers.

What options are available for configuring an event-based trigger in Azure Data Factory?

-For an event-based trigger, you can configure the Azure subscription, storage account, container name, blob path with regular expressions, and specify the event type, such as blob creation or deletion, that will trigger the pipeline execution.

How can you monitor the performance and status of triggers in Azure Data Factory?

-You can monitor the performance and status of triggers in Azure Data Factory using the monitoring dashboard, which provides statistics for different types of triggers, including scheduled, tumbling window, storage events, and custom events.

What are some advanced options available for configuring triggers in Azure Data Factory?

-Some advanced options for configuring triggers include setting a delay, managing concurrency to control how many triggers run simultaneously, defining retry policies for failed pipeline executions, and specifying a retry interval time.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

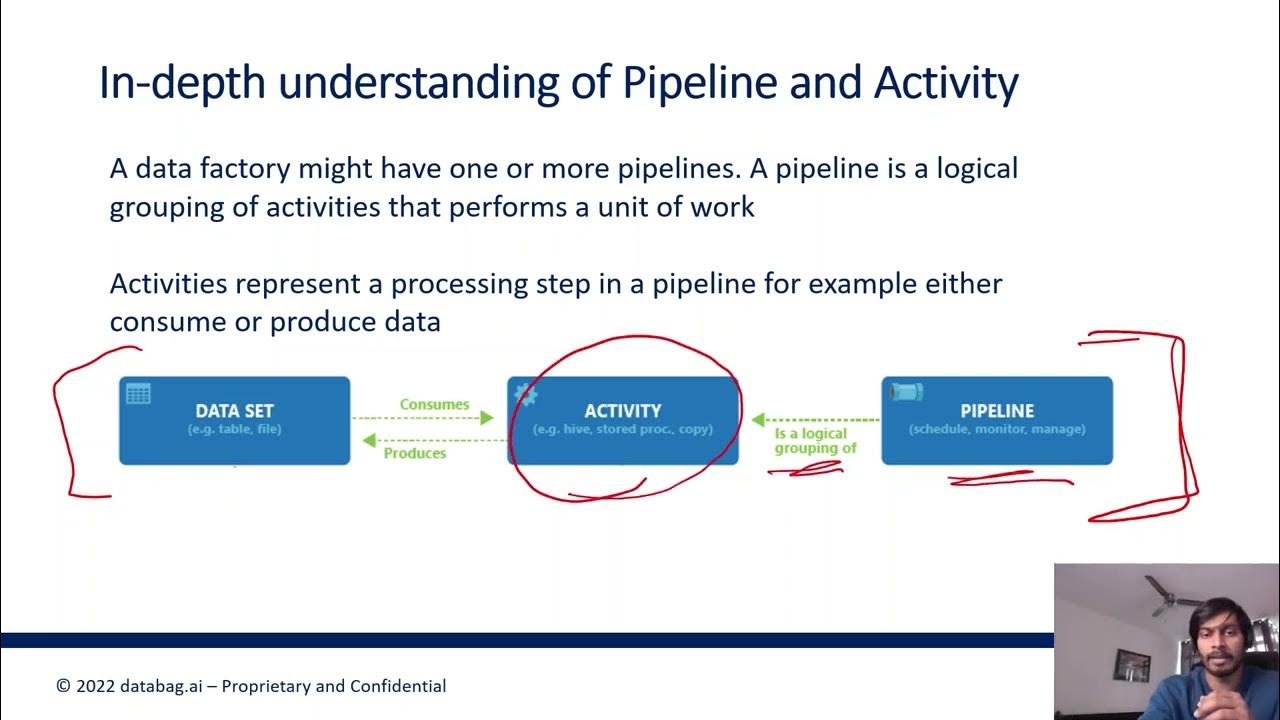

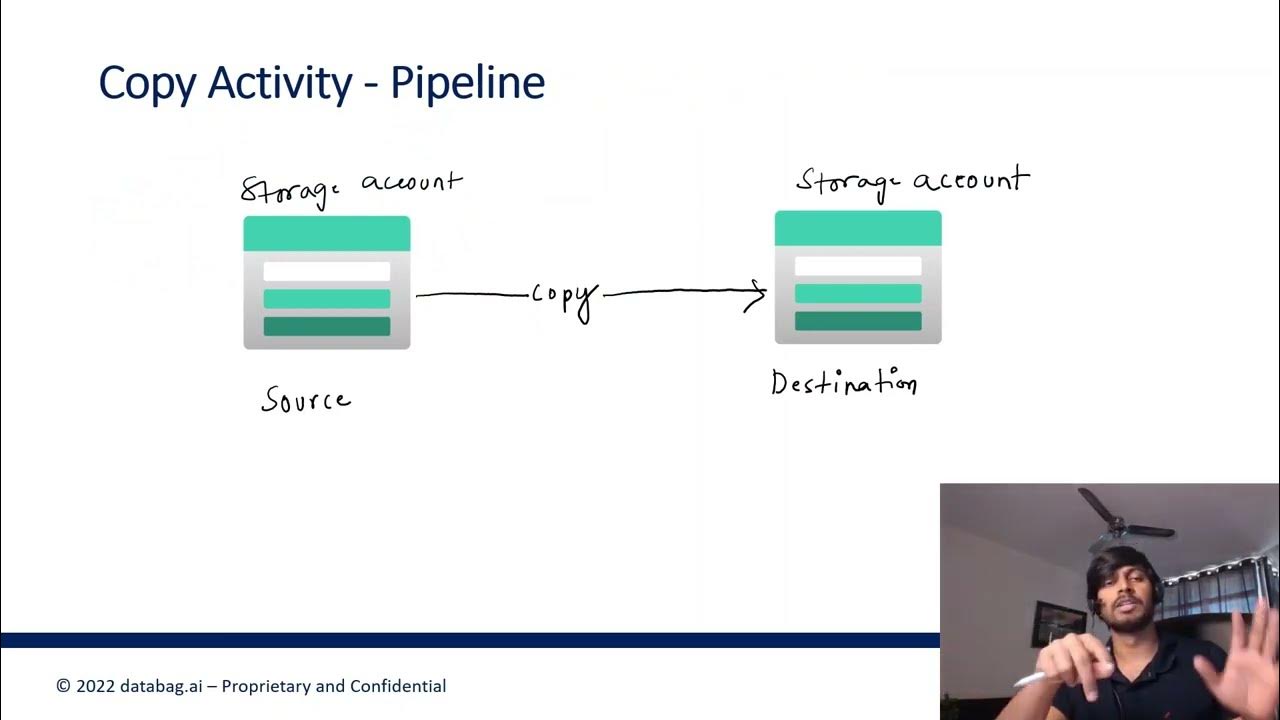

Azure Data Factory Part 5 - Types of Data Pipeline Activities

#16. Different Activity modes - Success , Failure, Completion, Skipped |AzureDataFactory Tutorial |

Azure Data Factory Part 3 - Creating first ADF Pipeline

How to refresh Power BI Dataset from a ADF pipeline? #powerbi #azure #adf #biconsultingpro

Part 8 - Data Loading (Azure Synapse Analytics) | End to End Azure Data Engineering Project

Big Data Engineer Mock Interview | Real-time Project Questions | Amount of Data | Cluster Size

5.0 / 5 (0 votes)