AWS DevDays 2020 - Deep Dive on Amazon SageMaker Debugger & Amazon SageMaker Model Monitor

Summary

TLDRThis session delves into Amazon SageMaker's advanced capabilities, focusing on SageMaker Debugger for monitoring model training and Model Monitor for detecting data and prediction quality issues post-deployment. The speaker demonstrates using these tools with a classification model, highlighting features like data capture, real-time analytics, and automated rule-based checks to ensure model reliability and efficiency. Additionally, cost optimization strategies for SageMaker are discussed, including spot instance training and model tuning for efficient resource utilization.

Takeaways

- 📘 The session focuses on Amazon SageMaker's capabilities, specifically the Debugger and Model Monitor features, which assist in inspecting model training and identifying data quality issues post-deployment.

- 🛠️ Amazon SageMaker Debugger allows users to save model states, such as tensors representing the model's parameters, gradients, and weights, periodically during training to S3 for later inspection.

- 🔍 Debugger's rules can be configured to monitor for undesirable conditions during training, such as class imbalance or vanishing gradients, providing real-time feedback and potentially stopping the training job if issues arise.

- 📊 SageMaker Debugger can visualize feature importance, helping to understand which dataset features contribute most to the model's predictions, enhancing model explainability.

- 🔄 The session demonstrates using spot instances for training models on SageMaker to save costs, with significant savings shown in the example.

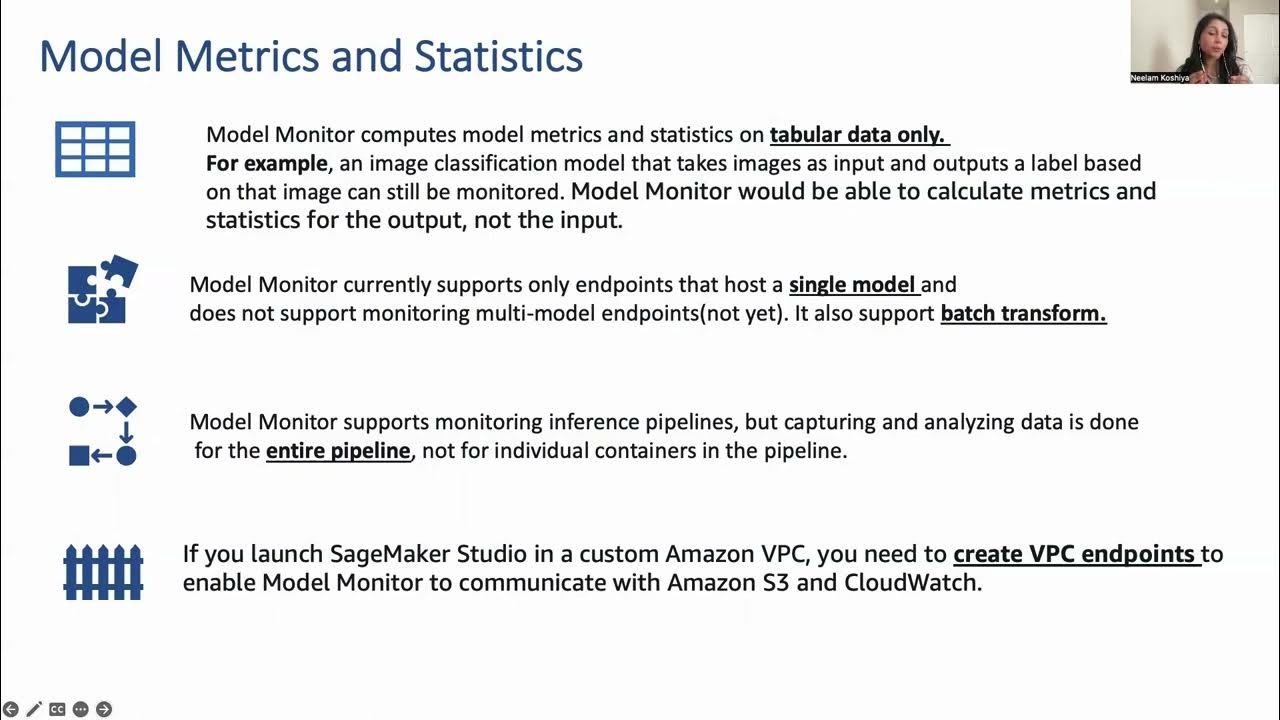

- 📈 Model Monitor captures data sent to a model in production, including requests and predictions, storing this information in S3 for analysis and monitoring data quality over time.

- 📉 Model Monitor can detect data quality issues such as missing features, mistyped features, or drifting features, which may degrade prediction quality if not addressed.

- 📝 The speaker emphasizes the importance of cost optimization in using SageMaker, covering topics like using managed services, spot training, and elastic inference to reduce expenses.

- 📚 The session mentions various resources for learning more about SageMaker, including documentation, AWS blog posts, YouTube videos, and podcasts, encouraging attendees to explore these for further insights.

- 🚀 The speaker highlights the productivity improvements offered by SageMaker Debugger and Model Monitor, suggesting they can save users significant time and frustration in model development and monitoring.

- 🗓️ The session ends with an invitation for attendees to ask questions, indicating the speaker's availability for further discussion and assistance on Twitter.

Q & A

What is the main focus of the session on Amazon SageMaker?

-The session focuses on Amazon SageMaker Debugger and Model Monitor, explaining how they help in inspecting model training and finding data quality and prediction quality issues once models are deployed.

Where can the slides and recording of the session be found?

-The slides can be found in the handout tab on the control panel, and the recording will be sent in a follow-up email after the event.

What is the dataset used in the notebook for training the model?

-The dataset used is a direct marketing dataset, which is a supervised learning problem classifying customers into two classes based on whether they accept an offer or not.

What is the purpose of using spot instances in SageMaker?

-Spot instances are used to save money on training costs. They allow users to specify a maximum training time and a total time, including waiting for spot instances, to control how long they are willing to wait for spot instances.

How does SageMaker Debugger save model information during training?

-SageMaker Debugger saves tensors, which are high-dimensional arrays representing the state of the model, periodically during the training job. This model state is saved to S3.

What is the purpose of defining rules in SageMaker Debugger?

-Rules in SageMaker Debugger are used to check for unwanted conditions during the training job. They can be built-in or custom Python code to inspect tensors and ensure the training job is not suffering from issues like class imbalance, vanishing gradients, or exploding tensors.

How can feature importance be visualized using SageMaker Debugger?

-Feature importance can be visualized by accessing the specific tensor by name, getting all the steps, and then plotting the values for each step using a tool like matplotlib.

What is the role of Amazon SageMaker Model Monitor in the session?

-Amazon SageMaker Model Monitor helps in capturing data sent to the model in production, saving incoming and outgoing data (request and response) to S3, and running analytics to detect data quality and prediction quality issues.

How can baseline statistics be generated for Model Monitor?

-Baseline statistics are generated by uploading the training set to S3 and creating a baseline using SageMaker Processing. This process computes statistics on the training set, such as feature types, ranges, distributions, and constraints.

What is the significance of creating a monitoring schedule in Model Monitor?

-A monitoring schedule in Model Monitor is used to periodically analyze the captured data and compare it with the baseline statistics. It helps in detecting discrepancies and alerting to problems like missing features, mistyped features, or drifting features.

What are some cost optimization strategies mentioned in the session?

-Cost optimization strategies include using managed services like EMR or Glue, stopping and sizing notebook instances appropriately, using local mode, managing spot training, optimizing models with Model Tuning Autopilot, using Elastic Inference, and leveraging inference with custom chips for high-throughput prediction.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级5.0 / 5 (0 votes)