AWS re:Invent 2020: Detect machine learning (ML) model drift in production

Summary

TLDRThis AWS re:Invent session, led by Principal Solutions Architect Sireesha Muppala, delves into detecting machine learning model drift in production using Amazon SageMaker. The session covers the importance of model monitoring, introduces SageMaker's Model Monitoring capability, and outlines the end-to-end deployment and monitoring process. It discusses strategies for addressing model drift, including retraining, and provides a functional notebook demonstrating the code and APIs behind SageMaker's monitoring steps, ensuring models remain accurate and reliable over time.

Takeaways

- 🌟 Amazon SageMaker is a fully managed service that streamlines the machine learning process, including data collection, model training, deployment, and monitoring.

- 🔍 Model Monitoring in SageMaker is crucial for detecting model drift in production, ensuring models remain accurate and reliable over time.

- 🛠️ SageMaker's Model Monitor capability automates the monitoring of machine learning models in production, detecting errors and triggering corrective actions.

- 📈 Model drift, both in data and performance, can significantly impact prediction quality, making continuous monitoring essential for maintaining model accuracy.

- 🔄 SageMaker allows for the setting up of alarms in Amazon CloudWatch based on model monitoring metrics, enabling proactive management of model performance.

- 📊 Data drift and accuracy drift metrics are persisted in S3 buckets and can be visualized in SageMaker Studio, providing insights into model behavior.

- 🚀 The end-to-end flow for deploying and monitoring models in production includes deploying the trained model, capturing inference requests, baselining, and reacting to drift detection.

- 📚 The demo in the session showcased how to use SageMaker to host trained models, capture inference data, generate baseline statistics, and monitor for data quality drift.

- 🔧 SageMaker processing jobs can be used to automate the analysis of captured inference data against baseline constraints, identifying data drift violations.

- 💡 CloudWatch alerts can be configured based on threshold values for drift metrics, triggering actions such as retraining when model performance degrades.

- 👨🏫 The session emphasized the importance of understanding and managing model drift to ensure business outcomes are not negatively impacted by outdated or degraded models.

Q & A

What is the main focus of the re:Invent session presented by Sireesha Muppala?

-The session focuses on detecting machine learning model drift in production using Amazon SageMaker, discussing the importance of monitoring models, introducing model monitoring capabilities, and demonstrating an end-to-end model deployment and monitoring flow.

What is Amazon SageMaker and what does it offer?

-Amazon SageMaker is a fully managed service that simplifies each step of the machine learning process by providing decoupled modules for data collection, model training and tuning, model deployment, and model monitoring in production.

Why is it important to monitor machine learning models in production?

-Monitoring is crucial because real-world data may differ from training data, leading to model drift or data drift over time. This can degrade model performance and impact business outcomes, making continuous monitoring essential for identifying when to retrain models.

What is model drift and how can it affect model performance?

-Model drift refers to the gradual misalignment of a model with real-world data as it ages, due to changes in data distributions. This can significantly impact prediction quality and model accuracy, necessitating proactive monitoring and corrective actions.

How does Amazon SageMaker's Model Monitoring capability help in detecting model drift?

-Amazon SageMaker's Model Monitoring capability uses Model Monitor to continuously monitor machine learning models, detect errors, and trigger alerts for remedial actions. It analyzes data based on built-in or customer-provided rules to determine rule violations and emits metrics into Amazon CloudWatch for further action.

What are the steps involved in the end-to-end model deployment and monitoring flow?

-The flow starts with deploying the trained model, enabling data capture, capturing real-time inference requests and responses, generating baseline statistics and constraints, creating a data drift monitoring job, and taking corrective actions once drift is detected.

How does SageMaker handle data capture for model monitoring?

-When deploying a SageMaker endpoint, data capture can be enabled to capture request and response data in a specified S3 location. This captured data is used for comparing against baseline data to identify data drift.

What is the purpose of generating baseline statistics and constraints in model monitoring?

-Baseline statistics and constraints are used to establish a reference point for monitoring. They include metadata analysis and thresholds for monitoring purposes, helping to detect deviations in real-world data compared to the training data.

How can businesses react to model drift detection?

-Once model drift is detected, businesses can take corrective actions such as retraining the model, updating training data, or updating the model itself. They can also set up CloudWatch alarms to trigger these actions when certain thresholds are violated.

What is the role of SageMaker Studio in visualizing model monitoring results?

-SageMaker Studio can visualize data drift and accuracy drift metrics that are persisted in S3 buckets. It allows users to chart metrics against baselines for better analysis and understanding of model performance over time.

Can you provide an example of how to use SageMaker Model Monitor in a real-world scenario?

-In the demo, a Jupyter notebook is used to demonstrate hosting trained machine learning models on Amazon SageMaker, capturing inference requests and results, analyzing a training dataset to generate baseline statistics and constraints, and monitoring a live endpoint for violations against these baseline constraints.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Model Monitoring with Sagemaker

AWS re:Invent 2020: Understand ML model predictions & biases with Amazon SageMaker Clarify

What is Amazon SageMaker?

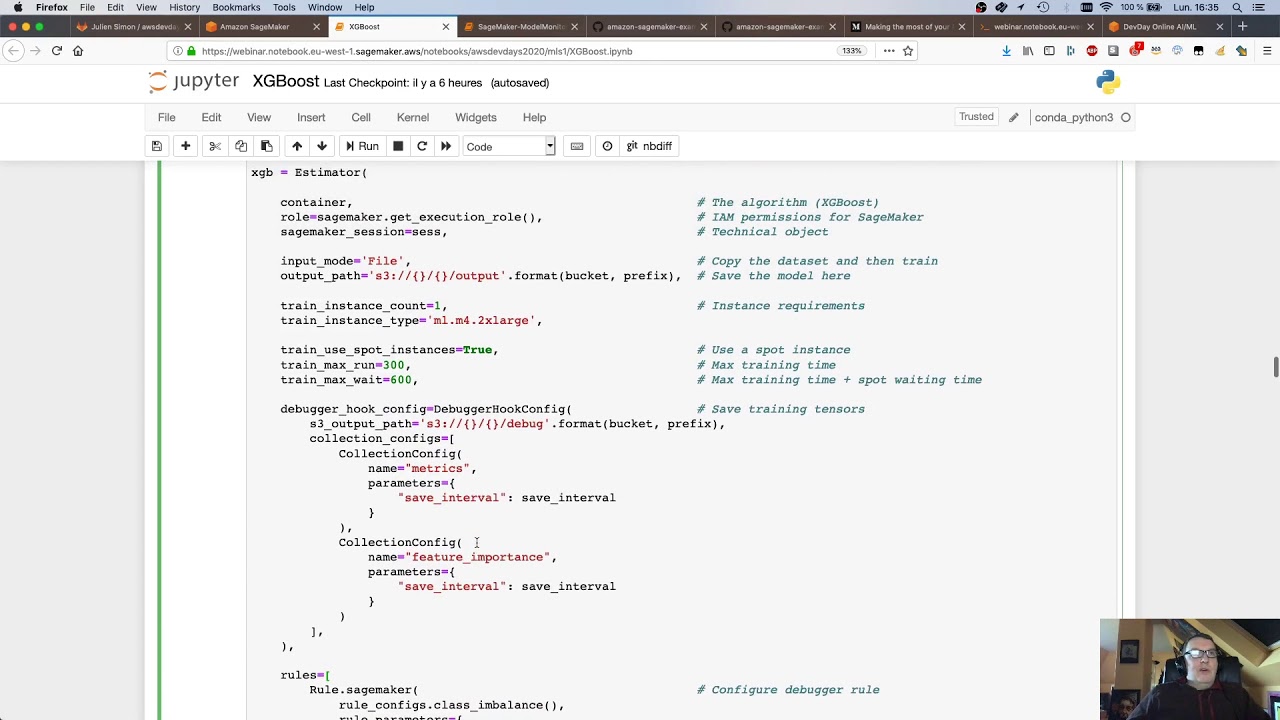

AWS DevDays 2020 - Deep Dive on Amazon SageMaker Debugger & Amazon SageMaker Model Monitor

Estimating Healthcare Receivables using Amazon SageMaker Canvas | Amazon Web Services

24 MOST Popular AWS Services - Explained in 13 mins (2024)

5.0 / 5 (0 votes)