Web Scraping with Linux Terminal feat. pup

Summary

TLDRThis video tutorial introduces viewers to web scripting techniques for automating data extraction from websites using a single command in the Linux terminal. The host shares personal experiences from a digital marketing internship, demonstrating how to scrape Amazon's website for price changes and using tools like 'curl' and 'pop' to simplify the process. The video also encourages viewers to create their own web scraping scripts for fun projects, like a quotation finder.

Takeaways

- 😀 The video teaches web scripting techniques to automate data retrieval from websites using Linux terminal commands.

- 🛠 It emphasizes the efficiency of web scripting, claiming it saved the creator 50% of their time during a digital marketing internship.

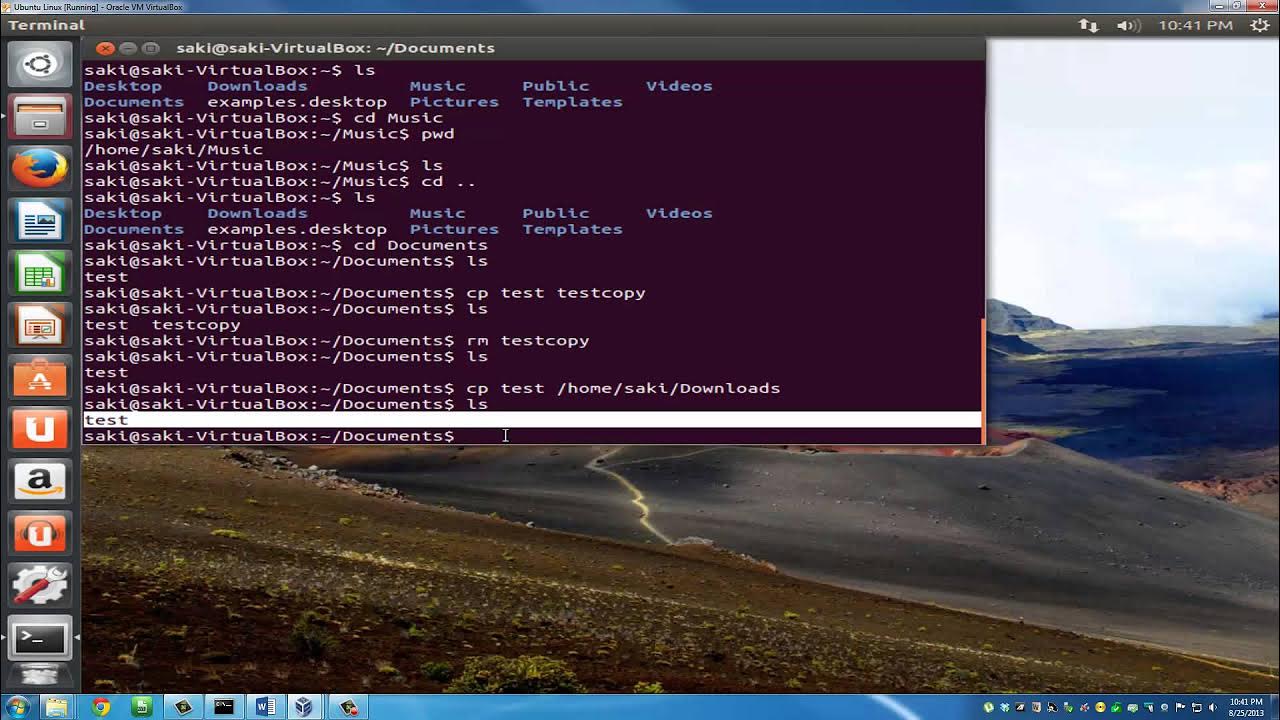

- 🔍 The creator demonstrates how to scrape a webpage's HTML content using the 'curl' command, specifically targeting Amazon.in.

- 👀 The importance of inspecting the network tab in the browser's developer tools is highlighted for identifying the correct URL to scrape.

- 📝 A step-by-step guide is provided on how to filter out unnecessary data and keep only the required HTML content.

- 🌐 The script explains how to use 'curl' to mimic browser requests to ensure the terminal fetches the same content as the browser.

- 📑 The video introduces 'pop', a tool for web scraping that simplifies the process of extracting specific elements from a webpage using CSS selectors.

- 📈 The creator shows how to use 'pop' to extract the price of a product and then refine the output to get the numerical price value.

- 💡 A practical example is given on creating a bash script to monitor price changes and send notifications when the price drops below a certain threshold.

- 📝 The script provided can be adapted to other websites and used as a template for similar web scraping tasks.

- 💡 The video concludes with a challenge for viewers to create a 'quotation finder' program using the demonstrated web scraping techniques.

Q & A

What is the main topic of the video?

-The main topic of the video is demonstrating techniques for web scripting in a Linux terminal to scrape information from websites, using the example of monitoring price changes on Amazon India.

Why is web scripting considered a must-learn skill according to the video?

-Web scripting is considered a must-learn skill because it can save a significant amount of time in scenarios where information needs to be gathered from websites, as illustrated by the video creator's experience during a digital marketing internship.

What is the purpose of the example given in the video?

-The purpose of the example is to show how to scrape the price of a 'Moyu 3x3 Speed Cube' from Amazon India and set up a script to get notified if the price drops.

Why is it necessary to use the network tab in inspect element to get the HTML document?

-Using the network tab in inspect element is necessary because modern websites like Amazon are bloated, and simply copying the URL won't fetch the correct HTML document needed for web scraping.

What is the significance of the user agent in the HTTP request?

-The user agent is significant because it identifies the client making the request, ensuring that the server sends the appropriate content for the client, which in this case is the terminal acting as a browser.

Why is it recommended to prioritize the use of an ID over a class name when scraping web elements?

-It is recommended to prioritize the use of an ID over a class name because an ID is unique to a single element on a page, whereas a class name can be shared by multiple elements, which can lead to incorrect data being scraped.

What is the tool 'pop' used for in the video?

-The tool 'pop' is used for web scraping by allowing the user to select specific HTML elements using CSS selectors, making it easier to extract the desired data from a webpage.

How can the 'pop' tool help in avoiding the use of regular expressions for web scraping?

-The 'pop' tool helps in avoiding regular expressions by providing a more straightforward and effective way to select and extract specific HTML elements using CSS selectors, which is considered a better practice for web scraping.

What command is used to remove the rupee symbol and any floating point from the price in the script?

-The 'set' command is used to replace the first two characters (presumably the rupee symbol) with nothing, and then the 'cut' command with the appropriate flags is used to separate and remove the floating point value.

How can the script be made more efficient and cleaner?

-The script can be made more efficient and cleaner by breaking down the process into multiple commands, storing intermediate results in variables, and using conditional statements for decision-making.

What is the final application of the script as demonstrated in the video?

-The final application of the script is to run it as a cron job that monitors the price of the 'Moyu 3x3 Speed Cube' on Amazon India and sends a notification if the price drops below a certain threshold.

What additional project is suggested for the viewers to try out web scraping?

-The viewers are suggested to try out a project where they create a 'quotation finder' program that scrapes famous quotes from a website like Google, using the techniques demonstrated in the video.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Scraping Dark Web Sites with Python

Lecture 2 - Command line environment

СТАНЬ БОГОМ ТЕРМИНАЛА / ГАЙД НА РАБОТУ С КОМАНДНОЙ СТРОКОЙ

Introduction to Linux and Basic Linux Commands for Beginners

Linux Essentials For Hackers - #2 - Useful keyboard Shortcuts

Bash Scripting for Beginners: Complete Guide to Getting Started - Data Streams (Part 11)

5.0 / 5 (0 votes)