Paper deep dive: Evolutionary Optimization of Model Merging Recipes

Summary

TLDRこの動画は、Sakana Labの研究者たちが進化アルゴリズムを用いて、モデル融合の自動化に取り組んだことを紹介しています。彼らは、異なるタスクに特化した複数のモデルを組み合わせ、新しい基盤モデルを作成し、特に日本語の数学問題に対する応答能力を向上させることを目指しています。進化アルゴリズムを用いた最適化プロセスは、パラメータ空間とデータフロー空間の両方を考慮し、効果的なモデル融合を実現しています。このアプローチの結果、日本語の数学問題に対する正解率が大幅に向上し、視覚モデルと組み合わせることで、画像に関する質問に対する応答能力も向上しています。

Takeaways

- 🌟 模型マージは、異なるタスクに特化した複数のモデルを組み合わせ、より強力な基盤モデルを作成する方法です。

- 🔍 日本のSakana Labでは、群れ知能や生物学にインスパイアされた手法を用いて、モデルマージの研究を行っています。

- 🧬 進化アルゴリズムを応用して、パラメータ空間とデータフロー空間でのモデルマージを自動化しようとしています。

- 🔗 モデルマージの目的は、異なるドメインの知識を統合し、新しい能力やスキルを持つモデルを作成することです。

- 🤖 進化アルゴリズムを使用することで、ブラックアートのような手動調整を避け、より自動化された最適解を見つけることができます。

- 📈 結果は、日本語の数学問題に対する正解率の向上や、画像を理解するモデルと日本語モデルの統合に成功しています。

- 🌐 モデルマージの分野はまだ新しい分野で、多くの研究が行われることが期待されています。

- 🚀 Sakana Labの研究は、基盤モデル開発の分野において革新的なアプローチを提供しています。

- 📚 進化アルゴリズムは、大規模なパラメータ空間を探索し、最適なモデルの組み合わせを見つけるのに役立ちます。

- 🎯 評価指標に基づいて最適なモデルを選択し、進化アルゴリズムを用いて最適なパラメータを探索することが重要です。

- 🌈 多目標遺伝的アルゴリズムを用いることで、複数の異なる目標を同時に最適化することが可能です。

Q & A

Sakana Labはどのような研究を行っていますか?

-Sakana Labは群れ知能、生物学にインスパイアされたこと、進化的アルゴリズム、人工生命など、様々な興味深いトピックに取り組むAI研究ラボです。

モデルマージとは何ですか?

-モデルマージは、既存のモデルを組み合わせることで、より強力な基礎モデルを作成する方法です。これは、人間的な直感や分野知識に頼るという暗黒魔術のようなものであり、進化的アプローチを用いて自動化しようとしています。

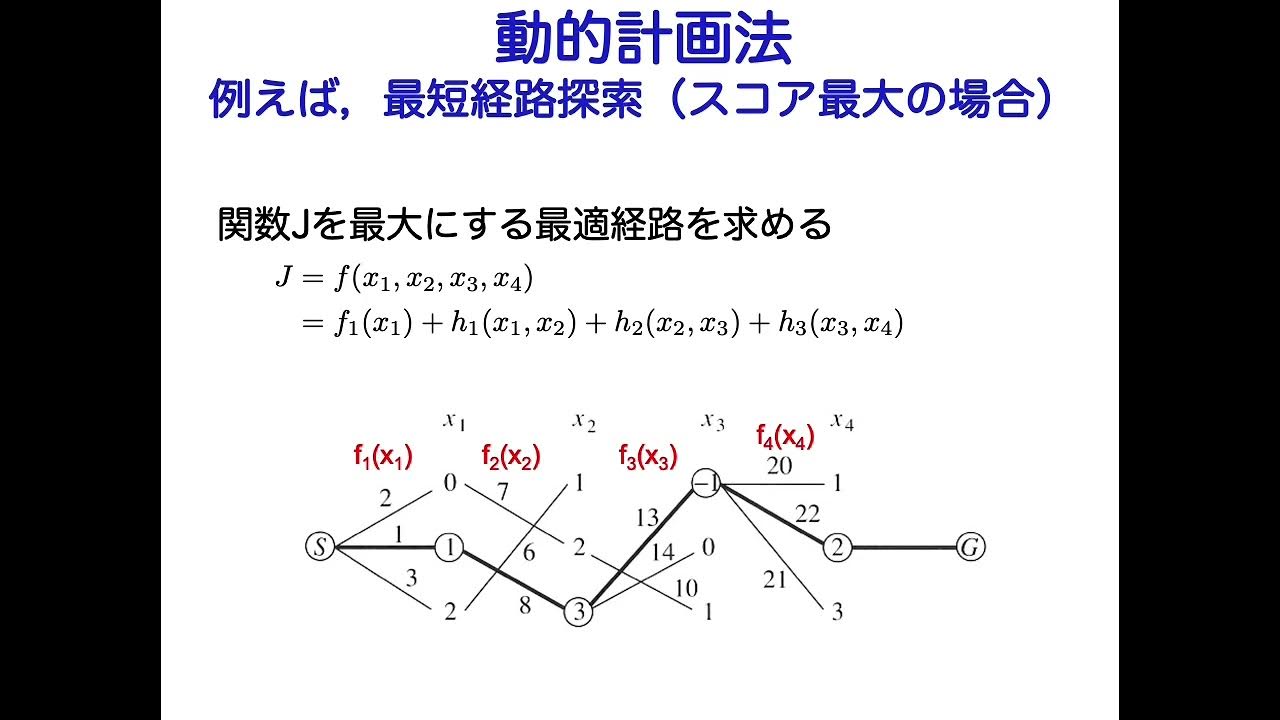

進化的アルゴリズムはどのように適用されていますか?

-進化的アルゴリズムは、パラメータ空間とデータフロー空間でのモデルマージに適用されています。これにより、異なるドメインのモデルを組み合わせたり、文化に配慮された視覚言語モデルを作成したりすることができます。

Ties MergeとDAREはどのような手法ですか?

-Ties Mergeは、重複するパラメータ値を解消し、符号の不一致を解決する方法です。DAREは、微調整されたパラメータの更新をランダムにドロップアウトし、残りのものを再スケールする技術です。これにより、衝突を減らし、より良い結果を得ることができます。

Frankenマージとは何ですか?

-Frankenマージは、同じ形状の異なるモデルの層を組み合わせ、新しいレイヤーを生成する方法です。これは、Transformerモデルの各層がデータにほとんど変更を加えないという直感に基づいています。

進化的アルゴリズムのCMA戦略とは何ですか?

-CMA戦略は、多変量問題を解決するための進化的アルゴリズムの手法です。この戦略では、パラメータ空間を探索するために、分布からサンプルをとり、最も適切な候補を選択し、分布を更新することで、最適解に近づきます。

データフロー空間でのマージの課題は何ですか?

-データフロー空間でのマージの課題は、検索空間が非常に広くなり、計算的に過密になることです。これに対処するために、論文では、順序を固定して繰り返しレイヤーをスタックし、どのレイヤーを含めるかを学習するという方法を提案しています。

モデルマージの結果評価はどのように行われましたか?

-モデルマージの結果評価は、訓練データセットとは異なるテストデータセットで行われました。進化的検索を使用して最適なウェイトやスケーリングファクターを発見し、組み合わされたモデルが期待どおりにパフォーマンスを発揮していることを確認しました。

Sakana Labの研究ではどのような成果が得られましたか?

-Sakana Labの研究では、日本語の数学問題や画像に関する質問に対する答えを正确に提供できる、文化に配慮された日本語視覚言語モデルが開発されました。これにより、異なるドメインの知識を統合し、より包括的なAIモデルを作成することができました。

この研究の意義は何ですか?

-この研究の意義は、進化的アルゴリズムを使用してモデルマージを自動化し、異なるドメインの知識を統合することで、より高度なAIモデルを作成できることです。また、この技術は一般的な言語モデルや視覚言語モデルの開発にも適用 가능であり、AIの応用範囲を広げることになります。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)