Stanford CS224W: Machine Learning with Graphs | 2021 | Lecture 1.1 - Why Graphs

Summary

TLDRCS224W, Machine Learning with Graphs, is an introductory course led by Associate Professor Jure Leskovec at Stanford University. The course emphasizes the significance of graph-structured data in various domains, such as social networks, biomedicine, and computer science. It explores the application of novel machine learning methods to model and analyze these complex relational structures. The curriculum covers traditional machine learning methods, node embeddings, graph neural networks, and their scalability. The course also delves into heterogeneous graphs, knowledge graphs, and their applications in logical reasoning, biomedicine, and industry, aiming to equip students with the tools to harness the power of graph data for accurate predictions and insights.

Takeaways

- 🌟 Introduction to CS224W, a course on Machine Learning with Graphs, taught by Jure Leskovec, an Associate Professor at Stanford University.

- 📈 Graphs are a fundamental data structure for representing entities, their relations, and interactions, moving beyond isolated data points to a network perspective.

- 🔍 Graphs can model various domains effectively, including computer networks, disease pathways, social networks, economic transactions, and more, capturing the relational structure of these domains.

- 🧠 The importance of graph representation is highlighted by its ability to capture complex relationships, such as those in the brain's neurons or molecules' atomic structures.

- 📊 The course aims to explore how machine learning, particularly deep learning, can be applied to graph-structured data to improve predictions and model accuracy.

- 🌐 The challenge of processing graphs in deep learning is addressed, noting their complex topology and lack of spatial locality compared to sequences and grids.

- 🤖 The development of neural networks for graph data is a new frontier in deep learning and representation learning research, focusing on end-to-end learning without manual feature engineering.

- 📈 The concept of representation learning is introduced, where the goal is to map graph nodes to d-dimensional embeddings for better data analysis and machine learning.

- 🎓 The course will cover a range of topics, from traditional machine learning methods for graphs to advanced deep learning approaches like graph neural networks.

- 🔗 Special attention will be given to graph neural network architectures, including Graph Convolutional Neural Networks, GraphSage, and Graph Attention Networks.

- 📚 The course will also delve into heterogeneous graphs, knowledge graphs, logical reasoning, and applications in biomedicine, scientific research, and industry.

Q & A

What is the primary focus of CS224W, Machine Learning with Graphs course?

-The primary focus of the CS224W course is to explore graph-structured data and teach students how to apply novel machine learning methods to it, with an emphasis on understanding and utilizing the relational structure of data represented as graphs.

Why are graphs considered a powerful language for describing and analyzing entities and their interactions?

-Graphs are a powerful language because they allow us to represent the world or a given domain not as isolated data points but as networks with relations between entities. This representation enables the construction of more faithful and accurate models of the underlying phenomena in various domains.

Provide examples of different types of data that can be naturally represented as graphs.

-Examples of data that can be represented as graphs include computer networks, disease pathways, networks of particles in physics, food webs, social networks, economic networks, communication networks, scene graphs, computer code, and molecules.

What are natural graphs or networks, and provide an example?

-Natural graphs or networks are domains that can inherently be represented as graphs. An example is a social network, which is a collection of individuals and connections between them, such as societies with billions of people and their interactions through electronic devices and financial transactions.

How does the course address the challenges of processing graphs in deep learning?

-The course discusses the challenges of processing graphs in deep learning, such as their arbitrary size, complex topology, and lack of spatial locality. It then explores how to develop neural networks that are more broadly applicable to complex data types like graphs and delves into the latest deep learning approaches for relational data.

What is representation learning in the context of graph-structured data?

-Representation learning for graph-structured data involves automatically learning a good representation of the graph so that it can be used for downstream machine learning algorithms. It aims to map nodes of a graph to d-dimensional embeddings, capturing the structure and relationships within the data without the need for manual feature engineering.

Name some of the graph neural network architectures that will be covered in the course.

-The course will cover graph neural network architectures such as Graph Convolutional Neural Networks (GCN), GraphSage, and Graph Attention Networks (GAT), among others.

What are the main differences between traditional machine learning approaches and representation learning?

-Traditional machine learning approaches require significant effort in designing proper features and ways to capture the structure of the data. In contrast, representation learning aims to automatically extract or learn features in the graph, eliminating the need for manual feature engineering and allowing the model to learn from the graph data directly.

How will the course structure its content over the 10-week period?

-The course will be structured week by week, covering traditional methods for machine learning and graphs, generic node embeddings, graph neural networks, expressive power and scaling of GNNs, heterogeneous graphs, knowledge graphs, logical reasoning, deep generative models for graphs, and various applications in biomedicine, science, and industry, with a particular focus on graph neural networks and representation learning.

What are some of the applications of graph-structured data and machine learning in biomedicine?

-In biomedicine, graph-structured data and machine learning can be applied to model genes and proteins regulating biological processes, analyze connections between neurons in the brain, and understand complex disease pathways, among other applications.

Can you explain the concept of a scene graph as mentioned in the script?

-A scene graph is a representation of relationships between objects in a real-world scene. It captures the interactions and spatial or functional relationships among various elements within the scene, organizing them into a graph structure where nodes represent objects and edges represent the relationships between those objects.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Shane Hutson, Introductory Physics

Lexicology as a linguistic subject | Z.T.Tukhtakhodjaeva (PhD) Associate Professor

Orientasi dan Kontrak Perkuliahan (Makul Tahfizh)

Introduction to Data Visualization - Intro

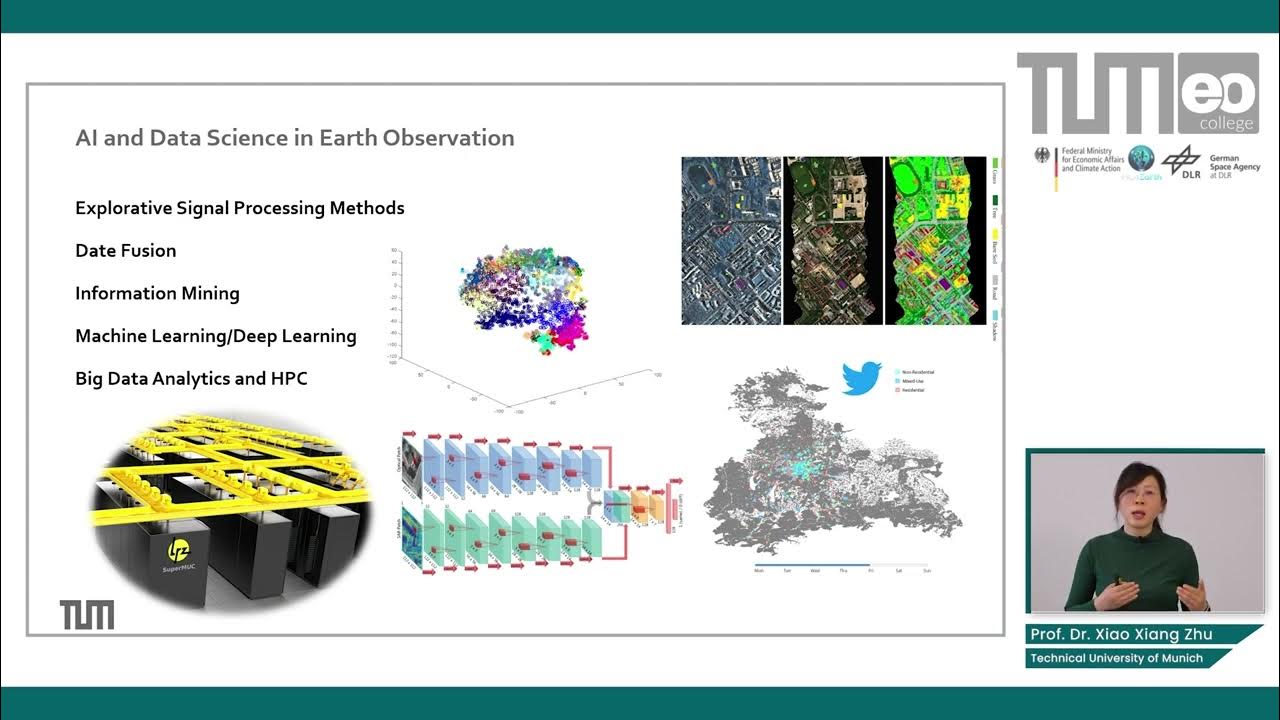

AI and Data Science in Earth Observation - Introduction

FREE guided hypnotherapy, with Stanford psychiatrist Dr Spiegel

5.0 / 5 (0 votes)