Elons NEW Prediction For AGI, METAs New Agents, New SORA Demo, China Surpasses GPT4, and more

Summary

TLDR本文概述了人工智能领域的最新动态,包括Meta开发付费AI助手的计划,以及AI代理的未来发展。特别提到了Meta可能不会开放其400亿参数的大型语言模型,以及Elon Musk对2025年实现人工通用智能(AGI)的预测。同时,讨论了AI的商业化、监管以及不同AI模型之间的竞争,如01doai的Ye Large模型。此外,还介绍了对AI模型内部工作原理的解读性研究,例如Golden Gate Claude研究,展示了AI如何将特定概念与回答关联起来。

Takeaways

- 🤖 Facebook的母公司Meta正在开发付费版本的AI助手,可能类似于Google、OpenAI和Anthropic等公司提供的聊天机器人服务。

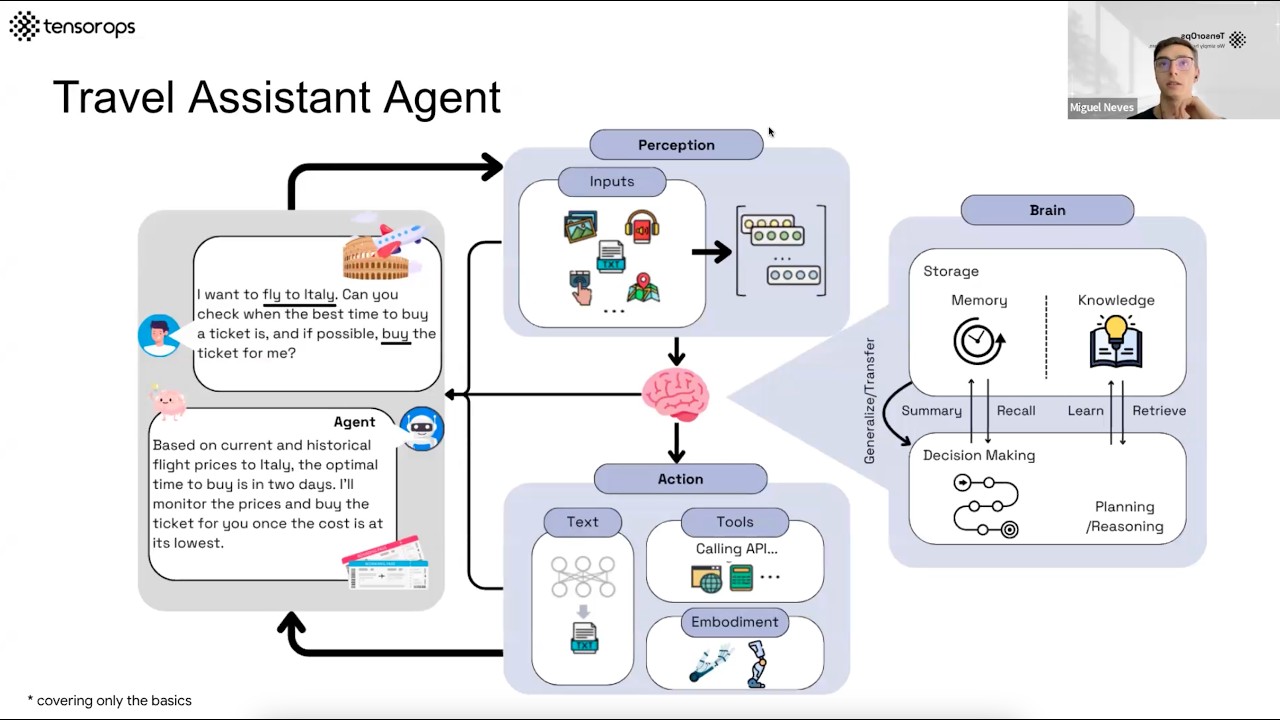

- 🔍 Meta也在开发能够无需人类监督完成任务的AI代理,这表明Meta正在投入资源研究未来的AI技术。

- 👨💻 Meta正在考虑包括一个工程代理来协助编码和软件开发,类似于GitHub的Copilot。

- 💰 有关AI代理的商业化,Meta的员工表示这些代理将帮助企业在Meta的应用上做广告,可能既用于内部也用于客户。

- 🗣️ 有传言称Meta的400亿参数模型可能不会公开,这与Meta计划对其未来模型收费的报道相符。

- 🚀 Elon Musk预测,我们将在明年实现人工通用智能(AGI),这可能意味着顶级AI实验室将取得重大突破。

- 🎥 在VivaTech会议上,有人展示了如何结合使用语音引擎和Chat GPT快速创建关于法国历史的综合性视频。

- 🛡️ Eric Schmidt表示,未来最强大的AI系统可能需要被限制在军事基地内,因为它们的功能可能非常危险。

- 🏆 01doai公司开发的'ye large'模型在基准测试中超过了GPT 4和Llama 3,显示出其他公司正在迎头赶上。

- 🧠 有关Claude模型的研究揭示了当模型遇到与金门大桥相关的文本或图片时激活的神经元,这有助于我们理解AI的内部工作机制。

- 🔄 通过调整Claude模型中特定特征的激活强度,研究人员能够影响模型的输出,展示了AI模型的可解释性和可控性研究的进步。

Q & A

Meta是否正在开发付费版本的AI助手?

-是的,Meta正在开发付费版本的AI助手,这项服务可能会类似于Google、OpenAI、Anthropic和Microsoft等公司提供的聊天机器人服务。

Meta的AI助手服务与Google和Microsoft的聊天机器人服务有何相似之处?

-Meta的AI助手服务将允许用户在工作场所应用程序中使用这些聊天机器人,类似于Google和Microsoft提供的每月20美元的订阅服务。

Meta是否也在开发AI代理,可以在没有人类监督的情况下完成任务?

-对,Meta正在开发AI代理,这些代理能够独立完成任务,这表明Meta正在将资源投入到AI的未来领域。

AI代理与现有的大型语言模型(LLMs)有何不同?

-AI代理是下一代AI技术,它们不仅能够处理语言,还能够执行更广泛的任务,如编程和软件开发,而不仅仅是语言理解。

Meta是否计划开发一个工程代理来协助编码和软件开发?

-是的,Meta计划开发一个工程代理,类似于GitHub的Co-Pilot,以协助编码和软件开发。

Meta的AI代理开发计划是否已经取得了一些成果?

-目前Meta的AI代理开发计划的具体成果尚未公开,但内部帖子显示他们正在积极探索这一领域。

Meta是否计划将其400亿参数的大型模型Llama 70B作为AI代理的基础?

-根据讨论,Meta可能会考虑使用Llama 70B或其他大型模型作为开发AI代理的基础,但具体的计划尚未公开。

Elon Musk关于AGI(人工通用智能)的预测是什么?

-Elon Musk预测我们明年将会拥有AGI,这表明他认为AI领域将很快取得重大突破。

Eric Schmidt关于未来AI系统的看法是什么?

-Eric Schmidt认为未来最强大的AI系统将需要被限制在军事基地内,因为它们的能力强大到可能很危险。

01doai公司在AI领域取得了哪些进展?

-01doai公司的YE Large模型在基准测试中超过了GPT-4和Llama 3,显示出该公司在AI领域的快速发展。

Claude的神经网络研究揭示了哪些关于AI内部工作的信息?

-Claude的神经网络研究揭示了当模型遇到特定激活时的反应,例如Golden Gate Bridge特征,这有助于我们理解AI的内部工作机制并提高模型的可解释性。

Outlines

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードMindmap

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードKeywords

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードHighlights

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレードTranscripts

このセクションは有料ユーザー限定です。 アクセスするには、アップグレードをお願いします。

今すぐアップグレード関連動画をさらに表示

Interview with Dr. Ilya Sutskever, co-founder of OPEN AI - at the Open University studios - English

AI Agents– Simple Overview of Brain, Tools, Reasoning and Planning

Marc Andreessen & Andrew Chen Talk Creative Computers

2024年开年AI大牛世界论坛关于AI的三大访谈之一 李飞飞、吴恩达对谈:这一次,AI冬天不会到来2024 A Dialogue between Li Fei-Fei and Andrew Ng

2 Ex-AI CEOs Debate the Future of AI w/ Emad Mostaque & Nat Friedman | EP #98

Is Cursor's Copilot++ BETTER than Github Copilot? FAST AI Coding Master Class

5.0 / 5 (0 votes)