Naïve Bayes Classifier - Fun and Easy Machine Learning

Summary

TLDRThis tutorial introduces the Naive Bayes algorithm, explaining its application in predicting whether or not to play golf based on weather conditions. It covers how probabilities are calculated from a dataset, including the likelihood of certain conditions like sunny, overcast, and humid weather. By calculating and comparing probabilities using Bayes' Theorem, the video demonstrates how to determine the likelihood of an outcome. The tutorial also highlights Naive Bayes' strengths, such as its simplicity and effectiveness in multi-class predictions, and discusses its uses in various fields like text classification, spam filtering, and recommendation systems.

Takeaways

- 😀 Naive Bayes is a machine learning algorithm that predicts the likelihood of an outcome based on input features, like weather conditions in the tutorial's golf game example.

- 😀 The algorithm uses Bayes' Theorem, which helps in calculating probabilities for different classes (such as 'play' or 'not play').

- 😀 Naive Bayes is particularly effective in scenarios where input features are assumed to be independent of each other.

- 😀 The tutorial demonstrates how to compute conditional probabilities for each feature based on the data (e.g., sunny, overcast, windy).

- 😀 The model's output is a probability for each class, and the class with the highest probability is the predicted outcome.

- 😀 The Naive Bayes algorithm is fast and simple, making it ideal for real-time predictions and scenarios where quick decisions are needed.

- 😀 One disadvantage of Naive Bayes is the assumption that all features are independent, which may not hold true in real-life situations.

- 😀 The 'zero frequency' problem occurs when a feature category in the test data hasn't been seen in the training data; this can be resolved with techniques like Laplace smoothing.

- 😀 Naive Bayes performs well with categorical data and works better than some other models, like logistic regression, when dealing with small datasets.

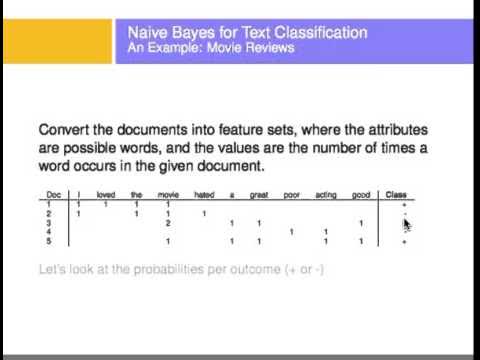

- 😀 Naive Bayes is widely used in text classification tasks, including spam filtering and sentiment analysis on social media platforms.

- 😀 Despite some limitations, Naive Bayes is well-suited for applications in credit scoring, medical data classification, and collaborative filtering for recommendation systems.

Q & A

What is Naive Bayes in machine learning?

-Naive Bayes is a classification algorithm based on Bayes' Theorem, used to predict the probability of a class given certain features. It assumes that the features are independent, which is often not the case in real-world data, but the model can still perform well in many scenarios.

How does Naive Bayes use Bayes' Theorem?

-Naive Bayes uses Bayes' Theorem to calculate the posterior probability, which represents the likelihood of a class given the observed evidence. The formula combines prior probabilities and likelihoods, normalizing by the total probability of the evidence.

What is the main assumption behind Naive Bayes?

-The main assumption behind Naive Bayes is that all features are independent of each other. This is often not true in real-world scenarios, but Naive Bayes still works well in practice due to its simplicity and efficiency.

What are the components of Bayes' Theorem?

-Bayes' Theorem consists of four components: prior probability (how probable the hypothesis is before observing evidence), likelihood (how well the model explains the data), normalizing constant (ensures the posterior sums to 1), and posterior probability (how probable the hypothesis is given the evidence).

What problem does Naive Bayes solve in the provided golf-playing example?

-In the golf-playing example, Naive Bayes helps determine the likelihood of playing golf based on weather conditions such as outlook, temperature, humidity, and wind speed, by calculating the probability of a 'yes' or 'no' outcome given these features.

What is zero frequency in Naive Bayes and how can it be solved?

-Zero frequency occurs when a feature category in the test dataset is not present in the training dataset, leading to a zero probability and inability to make a prediction. This can be solved using smoothing techniques, like Laplace Estimation, which adds a small value to avoid division by zero.

Why is Naive Bayes a good choice for text classification?

-Naive Bayes is widely used in text classification due to its effectiveness in multi-class problems and the assumption of independence between words, making it efficient for tasks like spam filtering and sentiment analysis.

What are some advantages of using Naive Bayes?

-Naive Bayes is fast, easy to implement, works well with small datasets, performs well with categorical input variables, and is effective in multi-class classification. It's also suitable for real-time predictions.

What are some disadvantages of Naive Bayes?

-Some disadvantages include the strong assumption of independent predictors, which is often unrealistic. It can also perform poorly if there is zero frequency for certain categories, and the predicted probabilities may not be reliable due to the model's 'bad estimator' nature.

In which applications is Naive Bayes commonly used?

-Naive Bayes is commonly used in credit scoring, e-learning platforms, medical data classification, text classification, spam filtering, sentiment analysis, and recommendation systems, particularly due to its speed and ability to handle multi-class predictions.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Klasifikasi dengan Algoritma Naive Bayes Classifier

Naive Bayes dengan Python & Google Colabs | Machine Learning untuk Pemula

Naive Bayes, Clearly Explained!!!

Text Classification Using Naive Bayes

Machine Learning: Multinomial Naive Bayes Classifier with SK-Learn Demonstration

Perceptron Learning Algorithm

5.0 / 5 (0 votes)