Machine Learning: Multinomial Naive Bayes Classifier with SK-Learn Demonstration

Summary

TLDRThis session covers the multinomial Naive Bayes classifier, particularly its application in text classification. The instructor explains when to use the model, focusing on datasets with unstructured text. Key topics include converting sentences into vector matrices, calculating word frequencies, and using the model to predict whether messages are spam or ham. The session also covers the formula and process for building a multinomial Naive Bayes model using scikit-learn, including tokenization, model training, and testing, culminating in the evaluation of the model's accuracy.

Takeaways

- 📚 The session discusses the Multinomial Naive Bayes classifier, suitable for text data sets with discrete features.

- 📈 Use Multinomial Naive Bayes when dealing with unstructured data represented in sentences.

- 🔢 The classifier calculates the probability of attributes for a given class label using a specific formula involving factorials and probabilities.

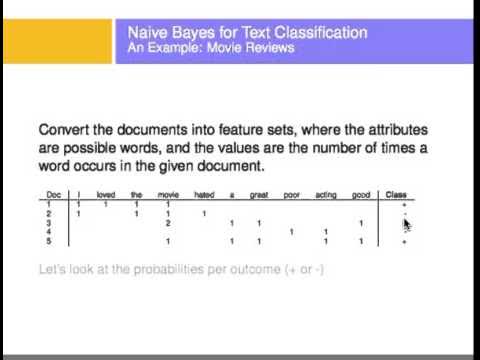

- 📝 The example given uses a training data set of sentences to predict whether messages are spam or ham.

- 🔑 The process involves converting sentences into a vector matrix representing word frequency counts.

- 📊 The training data set is used to build a model that can predict the class of new sentences.

- 🔍 The script explains how to convert unstructured data into a matrix form for model building and prediction.

- 💻 The solution involves calculating probabilities with respect to class labels and checking for the maximum probability to make predictions.

- 📋 The script outlines steps to convert a data set into vectors using scikit-learn's CountVectorizer.

- 📊 It demonstrates how to build the model, predict the class of new queries, and calculate the accuracy of the model using scikit-learn.

Q & A

What is a multinomial Naive Bayes classifier?

-A multinomial Naive Bayes classifier is a type of Naive Bayes classifier that is suitable for classification tasks with discrete features. It is particularly useful for text classification.

When should you use a multinomial Naive Bayes classifier?

-You should use a multinomial Naive Bayes classifier when your dataset is a text dataset or an unstructured dataset represented using sentences.

How does the multinomial Naive Bayes classifier work?

-The multinomial Naive Bayes classifier works by calculating the probability of the attributes for a given class label. It uses a formula that involves the product of all the word counts and their probabilities.

What is the role of word counts in the multinomial Naive Bayes classifier?

-Word counts are crucial in the multinomial Naive Bayes classifier as they represent the frequency count of each word occurring in the dataset. These counts are used to build the model for classification.

How do you convert sentences into a vector matrix for the classifier?

-To convert sentences into a vector matrix, you need to follow steps that include tokenization and creating a term-document matrix where rows represent documents and columns represent terms.

What is the purpose of adding 1 in the numerator of the probability calculation?

-Adding 1 in the numerator of the probability calculation is a technique known as Laplace smoothing, which helps to avoid the zero probability problem and ensures that the probability does not exceed 1.

How do you predict the class of a new sentence using the multinomial Naive Bayes classifier?

-To predict the class of a new sentence, you first convert the sentence into a vector matrix, then use the trained model to calculate the probability of the sentence belonging to each class label and select the class with the highest probability.

What is the significance of the denominator in the probability calculation?

-The denominator in the probability calculation is used to normalize the probabilities so that they sum up to 1. It includes the total number of words in the dataset to ensure the probability stays between 0 and 1.

How can you convert text data into vectors using scikit-learn?

-You can use the CountVectorizer function from scikit-learn's feature_extraction.text module to convert text data into vectors. This function performs tokenization and creates a term-document matrix.

What is the accuracy of the model mentioned in the script?

-The accuracy of the model mentioned in the script is 98.85 percent, as calculated using the accuracy_score function from scikit-learn.

How do you build the multinomial Naive Bayes model using scikit-learn?

-You build the multinomial Naive Bayes model using scikit-learn by importing the MultinomialNB class, creating an object of it, and then training the model using the fit function with the training data.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Naive Bayes, Clearly Explained!!!

Langsung Paham!!! Berikut Cara Mudah Membuat Sentiment Analysis dengan Python

Naïve Bayes Classifier - Fun and Easy Machine Learning

Text Classification Using Naive Bayes

Machine Learning Tutorial Python - 15: Naive Bayes Classifier Algorithm Part 2

Naive Bayes dengan Python & Google Colabs | Machine Learning untuk Pemula

5.0 / 5 (0 votes)