Naive Bayes dengan Python & Google Colabs | Machine Learning untuk Pemula

Summary

TLDRThis video tutorial explains the Naive Bayes algorithm for machine learning classification using Python. It covers the fundamentals of Naive Bayes, its statistical foundation, and why it is computationally efficient compared to other algorithms like KNN. The video provides a hands-on example with a dataset on water droplets, demonstrating how to implement the algorithm using Google Colab. Viewers are shown how to split data, train a Naive Bayes model, and evaluate its accuracy using Python libraries. The tutorial offers a practical approach to learning Naive Bayes with a focus on classification tasks.

Takeaways

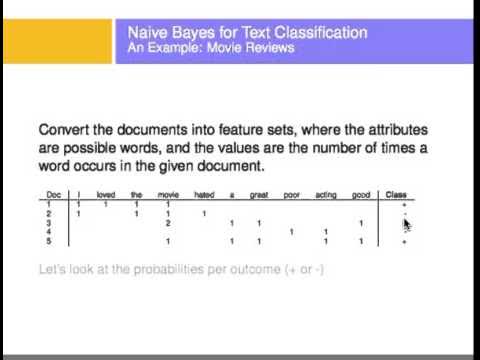

- 😀 Naive Bayes is a statistical classification method that works by estimating the probability of membership for each class based on input data.

- 😀 The algorithm is based on Bayes' Theorem and is relatively simple because it doesn't require complex training processes.

- 😀 Naive Bayes is particularly effective for categorical data but can be extended to numeric data using different techniques.

- 😀 The method is computationally efficient and often outperforms algorithms like K-Nearest Neighbors (KNN) in many scenarios.

- 😀 Google Colab is used to implement Naive Bayes without the need for local installations, providing a user-friendly environment for machine learning tasks.

- 😀 The Iris dataset is often used as a sample for testing machine learning algorithms due to its simplicity and well-labeled structure.

- 😀 The dataset used in this video contains 150 samples with 4 features and 3 different classes.

- 😀 The data is split into training and testing sets, with an 80% training and 20% testing split for the machine learning process.

- 😀 After training the model with the Naive Bayes algorithm, predictions are made using the test data, and the results are compared to the actual values.

- 😀 The accuracy of the model is calculated by comparing the predicted outcomes to the actual results, achieving a 96.6% accuracy rate in this case.

- 😀 Although this example uses a well-known dataset, these steps can be applied to more complex, real-world datasets for classification tasks.

Q & A

What is the main topic discussed in the video?

-The video discusses the implementation of the Naive Bayes algorithm for classification using Python, specifically applying it to the Iris dataset.

What is Naive Bayes and how is it used in machine learning?

-Naive Bayes is a probabilistic machine learning algorithm based on Bayes' Theorem. It is used for classification tasks by calculating the probability of a data point belonging to a particular class based on the available features.

Why is Naive Bayes considered a simple algorithm?

-Naive Bayes is considered simple because it makes predictions based on statistical probabilities without the need for complex training processes or model building, making it computationally efficient.

Which dataset is used in the video for demonstrating Naive Bayes?

-The Iris dataset is used in the video, which contains 150 samples and is divided into three classes, with attributes such as sepal and petal length and width.

What is the training and testing data split ratio used in the tutorial?

-The dataset is split into training and testing sets with an 80-20 ratio, meaning 80% of the data is used for training the model, and 20% is used for testing.

What Python libraries are mentioned as necessary for implementing Naive Bayes?

-The Python libraries mentioned are pandas, scikit-learn, and possibly matplotlib, which are used for handling data, applying machine learning algorithms, and visualizing results.

What is the accuracy achieved by the Naive Bayes classifier in this tutorial?

-The Naive Bayes classifier achieved an accuracy of 96.6% when tested on the Iris dataset.

How is the accuracy of the Naive Bayes model calculated?

-The accuracy is calculated by comparing the predicted labels of the test set with the actual labels and determining the proportion of correct predictions.

What is the primary benefit of using Naive Bayes over other algorithms like KNN?

-The primary benefit of Naive Bayes over algorithms like KNN is its computational efficiency, as it only relies on statistical probabilities, while KNN requires calculating distances and performing more computationally intensive operations.

Can Naive Bayes be used for datasets with numerical values, or is it only for categorical data?

-Although Naive Bayes is often applied to categorical data, it can also be adapted for datasets with numerical features by using methods like Gaussian Naive Bayes, which assumes the data follows a normal distribution.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

SKLearn 13 | Naive Bayes Classification | Belajar Machine Learning Dasar

Tutorial Klasifikasi Algoritma Naive Bayes Classifier dengan Python - Google Colab

Text Classification Using Naive Bayes

Langsung Paham!!! Berikut Cara Mudah Membuat Sentiment Analysis dengan Python

All Learning Algorithms Explained in 14 Minutes

Naïve Bayes Classifier - Fun and Easy Machine Learning

5.0 / 5 (0 votes)