The One and Only Data Science Project You Need

Summary

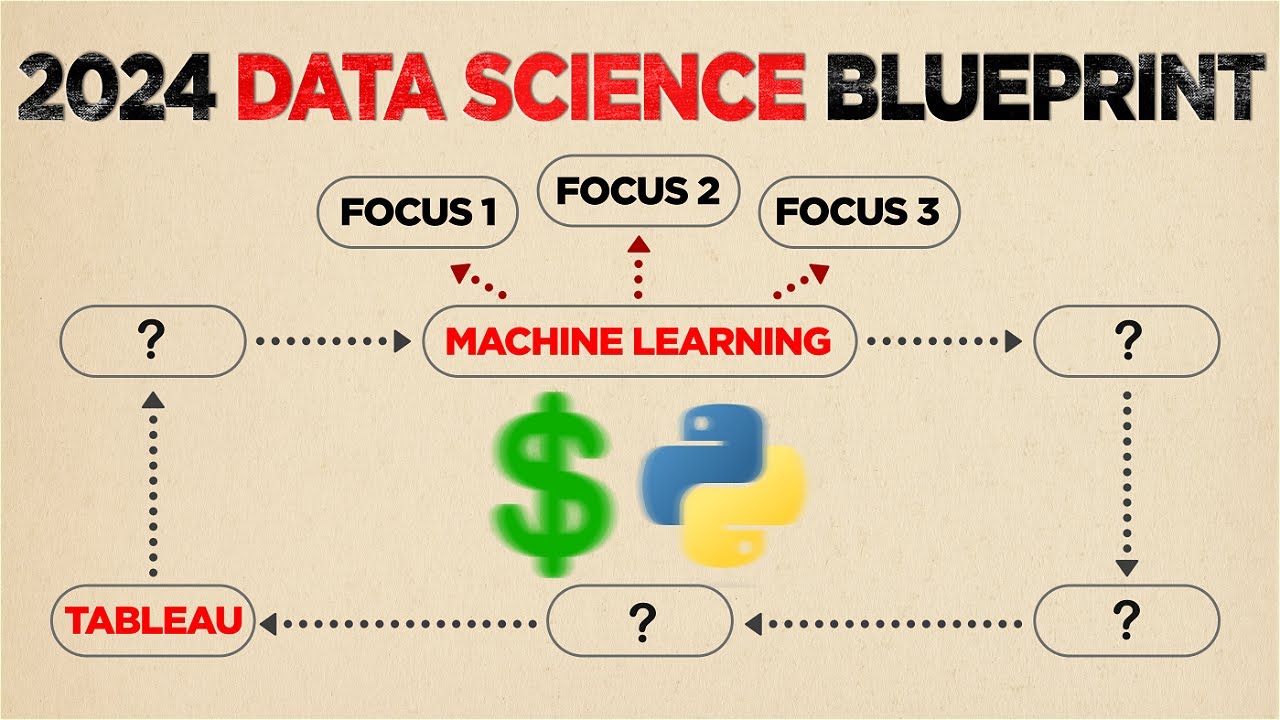

TLDRIn this video, Nate shares invaluable advice for aspiring data scientists seeking to create an impactful project. He emphasizes avoiding overused datasets like Titanic and Iris, and steering clear of Kaggle unless aiming for top rankings. Nate outlines key components for a successful project: utilizing real-time data, mastering modern tech like APIs and cloud databases, building robust models, and demonstrating project impact. He stresses the importance of understanding model decisions and underlying math, and suggests sharing insights through code, visuals, or even deploying applications for real-world validation. Nate's ultimate secret? One comprehensive project that covers all skills can serve as a foundation for future endeavors, impressing interviewers and solidifying a career in data science.

Takeaways

- 🚀 The ultimate data science project should help you gain full-stack data science experience and impress interviewers.

- 🙅 Avoid overused datasets like Titanic or Iris and common platforms like Kaggle unless you can rank in the top 10.

- 💡 Focus on real-world skills in coding, analytics, and modern technologies to become a fully independent data scientist.

- 📈 Work with real, updated data, preferably real-time streaming data, to demonstrate relevance and timeliness.

- 🔌 Learn to use APIs to collect real-time data, showcasing your ability to handle live data feeds.

- 💾 Utilize cloud databases to store and manage data efficiently, reflecting common industry practices.

- 🤖 Building models is crucial, but understanding the decision-making process behind them is even more important.

- 📊 Be prepared to explain your model choices, data cleaning processes, and validation tests during interviews.

- 🌟 A great data science project should make an impact and have validation from others, showing its value and interest to the community.

- 🛠️ Share your work through code repositories, visual insights, or by building an application to demonstrate practical application.

- 🔄 Once you've built an end-to-end data science infrastructure, you can reuse and adapt it for various projects with minor revisions.

Q & A

What is the primary advice given by Nate for someone looking to start a data science project?

-Nate suggests building a project that provides full stack data science experience and impresses interviewers, focusing on real-world skills and modern technologies.

What are the two things Nate advises to avoid when choosing a data science project?

-Nate advises to avoid projects involving analysis on the Titanic or Iris data sets as they are overdone, and to migrate away from Kaggle unless one can rank in the top 10.

What does Nate mean by 'full stack data science experience'?

-Full stack data science experience refers to having skills in both coding and analytics, as well as proficiency in using modern technologies and tools, making one a fully independent data scientist.

What are the four components of a good data science project according to the script?

-The four components are working with real data, using modern technologies like APIs and cloud databases, building models, and making an impact by getting validation.

Why is working with real-time streaming data important for a data science project?

-Working with real-time streaming data is important because it demonstrates the ability to work with relevant and timely data, as opposed to outdated datasets.

What are some examples of popular APIs that can be used for data analysis?

-Some popular APIs for data analysis include Twitter, Google Analytics, YouTube, Netflix, and Amazon.

What skills are essential when working with APIs in a data science project?

-Essential skills include setting up and configuring APIs, using libraries for making API calls, and working with data structures like JSON and dictionaries.

Why is it beneficial to store data collected from APIs in a cloud database?

-Storing data in a cloud database is beneficial because it allows for efficient management of regularly updated data, avoiding the need to re-pull and re-clean entire datasets.

What aspects of model building are most important to an interviewer according to the script?

-Interviewers are more interested in the thought process and decision-making behind model building rather than just the performance metrics of the model.

How can a data science project make an impact and get validation?

-A project can make an impact by sharing insights through visuals, graphs, blog articles, or by building an application that serves insights to users, demonstrating the value of the work.

What is the secret to mastering various data science skills as mentioned in the script?

-The secret is to build a single comprehensive data science project that covers all necessary components, which can then be iteratively improved and adapted for different analyses.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

IBM Python For Data Science Certificate Review - Is it worth it?

What Recruiters Look For In Data Scientists

5 Skills every Electronics / Electrical Engineering student should learn

Create a Standout Project Write-Up | Data Analyst Insights

How I Became A Data Scientist (No CS Degree, No Bootcamp)

How I'd Learn Data Science In 2024 (If I Could Restart) - The Ultimate Roadmap

5.0 / 5 (0 votes)