Scraping Data from a Real Website | Web Scraping in Python

Summary

TLDRIn this tutorial, the instructor guides viewers through the process of web scraping data from a real website, specifically Wikipedia's list of largest companies in the United States by revenue. The data is then imported into a pandas DataFrame and exported to a CSV file. The lesson covers the use of BeautifulSoup and requests libraries, handling multiple tables on a webpage, extracting headers and data, and troubleshooting common issues like mismatched columns. The instructor emphasizes the importance of cleaning and formatting data for usability and concludes with a demonstration of exporting the scraped data.

Takeaways

- 😀 The lesson focuses on scraping data from a real website and handling it with pandas DataFrames.

- 🔍 The task involves navigating to Wikipedia to scrape a list of the largest U.S. companies by revenue.

- 🛠 The process begins with importing libraries like BeautifulSoup and requests to fetch and parse the webpage.

- 📝 The script demonstrates how to inspect the webpage to identify the correct table and its structure for data extraction.

- 🔑 The importance of properly specifying the table and its class attributes is highlighted to target the right data.

- 📑 The script includes steps to extract table headers using the 'th' tags and clean them up for DataFrame usage.

- 🔄 A loop is used to iterate through 'tr' and 'td' tags to gather row data from the table.

- 📊 Data is appended to a pandas DataFrame incrementally as rows are extracted from the table.

- 📈 The script addresses common issues such as mismatched columns and empty data entries during the scraping process.

- 💾 The final step is exporting the collected data into a CSV file, with attention to formatting and removing unnecessary indices.

- 📚 The lesson concludes with a reminder of the importance of learning pandas for data manipulation and the potential for encountering and overcoming errors in web scraping projects.

Q & A

What is the main objective of the lesson in the provided script?

-The main objective of the lesson is to demonstrate how to scrape data from a real website, specifically Wikipedia's list of the largest companies in the United States by revenue, and put it into a pandas DataFrame, with the possibility of exporting it to a CSV file.

Which programming libraries are mentioned as necessary for the web scraping project?

-The necessary programming libraries mentioned are Beautiful Soup for parsing HTML and Requests for making HTTP requests.

What is the initial approach to finding the correct table on the Wikipedia page?

-The initial approach involves using the 'find' method from Beautiful Soup to locate the table with the desired data, specifying the class 'wikitable sortable' to target the correct table.

Why was there a need to switch from 'find' to 'find_all' in the script?

-The need to switch from 'find' to 'find_all' arose because there were two tables on the page with the same class, and 'find_all' allows for the selection of multiple elements with the same class attribute.

What mistake did the instructor make when initially trying to extract the table data?

-The instructor initially made a mistake by using 'soup.find_all' instead of 'table.find_all', which resulted in pulling data from all tables instead of the specific table they were interested in.

How does the instructor handle the issue of extracting headers from the table?

-The instructor handles the extraction of headers by using the 'find_all' method on the 'th' tags within the table, then using a list comprehension to extract the text from each header.

What is the purpose of using the 'strip' method in the script?

-The 'strip' method is used to clean up the extracted text data by removing any leading or trailing whitespace characters, including newline characters, to ensure the data is clean and usable.

How does the instructor ensure that the data from each row is appended to the pandas DataFrame?

-The instructor uses the 'loc' property of the pandas DataFrame, specifying the length of the DataFrame as the row index where the new data should be appended.

What issue did the instructor encounter when trying to append data to the DataFrame?

-The instructor encountered an issue where the DataFrame had mismatched columns due to an empty row being appended. This was resolved by adjusting the 'column_data' extraction to exclude the unwanted empty row.

How does the instructor export the final DataFrame to a CSV file?

-The instructor uses the 'to_csv' method of the pandas DataFrame, specifying the file path and file name, and setting 'index=False' to prevent the DataFrame index from being included in the CSV file.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

This AI Agent can Scrape ANY WEBSITE!!!

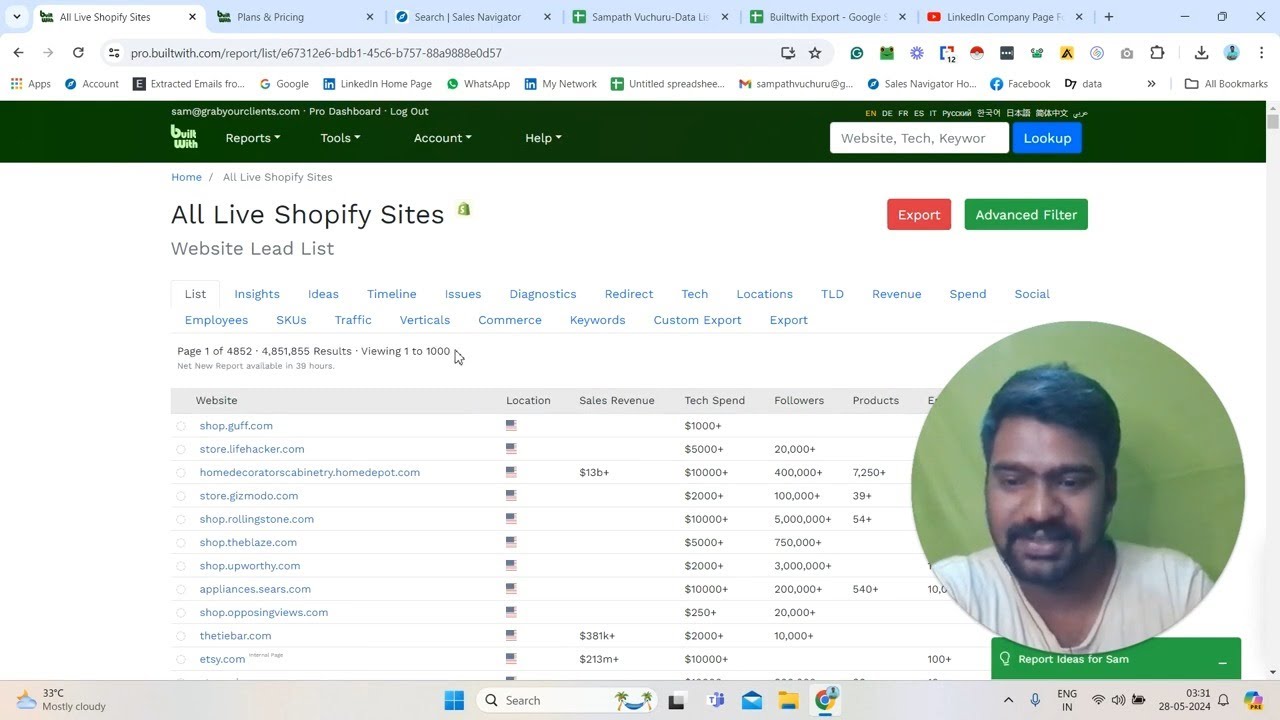

How to Use BuiltWith Pro for E-commerce Data | BuiltWith Pro Tutorial (2024)

Scraping Data Ulasan Produk Tokopedia Menggunakan SELENIUM & BEAUTIFULSOUP

Webscraping with AutoHotkey-101.5 Getting the text from page

BeautifulSoup + Requests | Web Scraping in Python

Web Scraping with Linux Terminal feat. pup

5.0 / 5 (0 votes)