[1hr Talk] Intro to Large Language Models

Summary

TLDR此视频概述了大型语言模型,特别是介绍了Llama 270b模型的结构和用途。演讲者讨论了模型的训练过程,包括预训练和微调,以及这些模型如何通过互联网数据和GPU集群进行训练。他还探讨了大型语言模型在未来的发展方向,包括多模态能力、系统思维、自我改进和个性化。此外,演讲者也提到了与大型语言模型相关的安全挑战,如越狱攻击、提示注入攻击和数据投毒。视频旨在为观众提供大型语言模型的全面理解,同时指出了该技术领域的潜在风险和发展机遇。

Takeaways

- 🧠 大型语言模型(LLM)如Llama 270B是由数以亿计的参数构成的神经网络,能够理解和生成人类语言。

- 💾 这些模型基于大量的互联网文本进行训练,通过压缩这些文本数据来学习语言和知识。

- 🔍 使用大型语言模型不需要互联网连接,只需模型的参数文件和运行代码即可在本地设备上使用。

- 🚀 Meta AI发布的Llama系列模型因其开放权重而受到欢迎,这使得研究人员和开发人员可以轻松地使用这些模型。

- 🤖 模型训练分为预训练和微调两个阶段,预训练阶段利用大量文本数据构建知识基础,微调阶段则专注于特定任务的性能优化。

- 🛠️ 大型语言模型的一个关键能力是使用各种工具(如浏览器、计算器或代码库)来解决复杂问题,这种能力不断增强。

- 🌐 模型的多模态能力,包括处理和生成图像、音频等多种类型的数据,正在迅速发展。

- 🔐 随着模型能力的增强,安全性和防御恶意攻击的能力(如防止模型被用于生成有害内容)变得尤为重要。

- 📈 模型性能遵循可预测的扩展定律,即通过增加模型参数和训练数据量可以系统地提高性能。

- 🤹♂️ 未来的语言模型可能会具有更高级的推理能力和自我改进的能力,使其能够在更复杂的任务中表现得更像人类。

Q & A

大型语言模型是什么?

-大型语言模型是一种利用大量参数(例如,Llama 270b模型有700亿个参数)来预测文本序列中下一个单词的人工智能技术。它们能够理解和生成人类语言,从而执行各种文本相关的任务。

为什么Llama 270b模型受到欢迎?

-Llama 270b模型因为是一个开源模型,即其权重、架构和相关论文都公开可用,这使得任何人都能够轻松地使用和研究这个模型,与其他一些模型不同,后者可能不公开架构或仅通过网络接口提供。

运行大型语言模型需要哪些文件?

-运行大型语言模型主要需要两个文件:一个参数文件,存储神经网络的权重(例如,140GB的参数文件存储Llama 270b模型的参数);另一个是运行文件,包含实现神经网络架构的代码,可以用C语言或Python等编程语言编写。

获取大型语言模型的参数需要哪些步骤?

-获取大型语言模型的参数(即模型训练过程)涉及复杂的计算过程,包括从互联网上获取大量文本数据,使用特殊的计算机(如GPU集群)处理这些数据,通过长时间(例如,12天)的训练压缩这些文本信息到模型参数中。

大型语言模型是如何进行下一个词预测的?

-大型语言模型通过分析输入的词序列,利用其内部的参数(由之前的训练过程获得的知识)来预测序列中下一个词可能是什么。这个过程涉及到复杂的数学运算和神经网络内部的相互作用。

为什么大型语言模型能理解世界上的知识?

-大型语言模型通过在大量文本数据上的训练,能够"学习"到文本中隐含的信息和知识,从而能够对特定的问题给出有知识基础的回答。它们通过预测文本序列中的下一个词来"理解"和"记忆"这些信息。

什么是模型微调?

-模型微调是在大型语言模型预训练的基础上,通过在特定任务相关的数据集上进一步训练模型来优化其性能的过程。这使得模型能够更好地适应特定类型的任务,如回答问题或生成文本。

如何使用大型语言模型生成诗歌或代码?

-通过向大型语言模型提供特定的提示或问题,模型可以利用其学习到的语言规则和知识库生成相应的文本,如诗歌或代码。这是通过逐词预测文本序列中下一个最可能的词来实现的。

大型语言模型如何改进和自我提升?

-大型语言模型可以通过进一步的数据训练、算法优化和使用高级技术(如基于人类反馈的强化学习)来改进和提升性能。这些方法可以帮助模型更好地理解和生成人类语言。

大型语言模型面临哪些安全挑战?

-大型语言模型面临的安全挑战包括但不限于:jailbreak攻击、提示注入攻击、数据投毒和后门攻击等。这些攻击可能导致模型行为异常或被恶意利用,因此需要持续的研究和防御措施来保护模型的安全性。

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

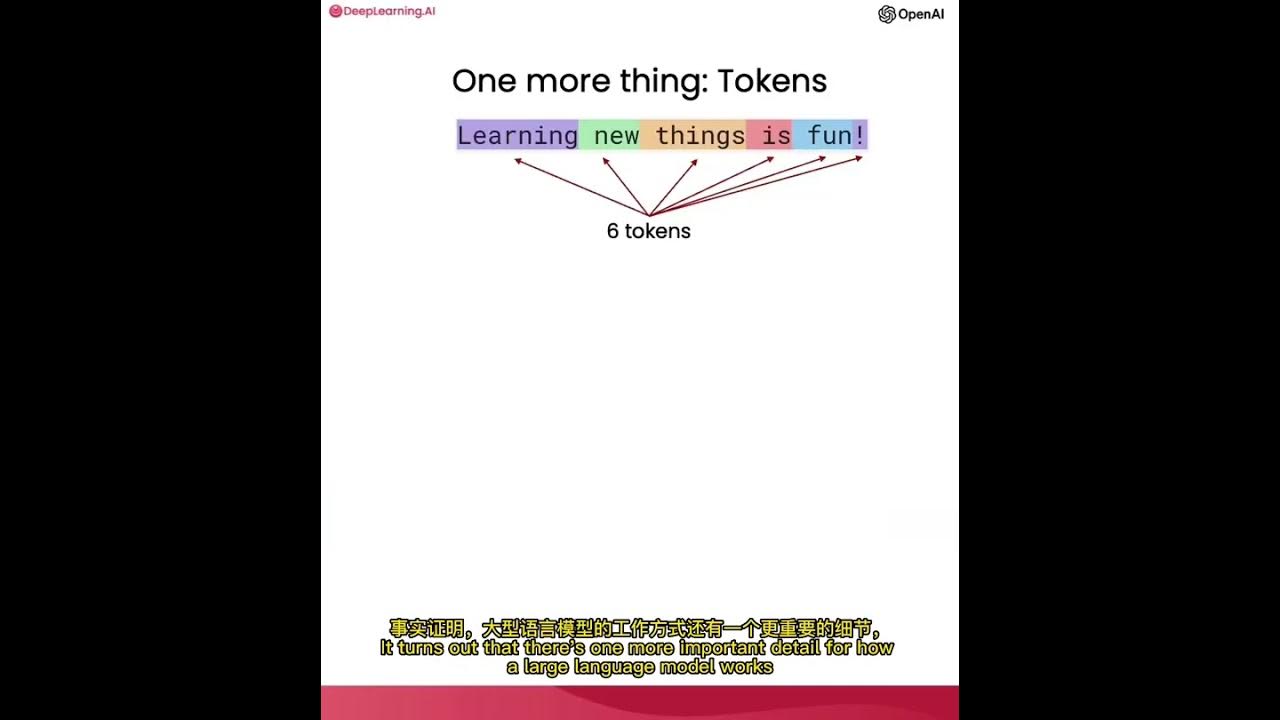

使用ChatGPT API构建系统1——大语言模型、API格式和Token

[ML News] Jamba, CMD-R+, and other new models (yes, I know this is like a week behind 🙃)

A little guide to building Large Language Models in 2024

How Did Dario & Ilya Know LLMs Could Lead to AGI?

Trying to make LLMs less stubborn in RAG (DSPy optimizer tested with knowledge graphs)

一键部署Google开源大模型Gemma,性能远超Mistral、LLama2 | 本地大模型部署,ollama助您轻松完成!

【生成式AI】ChatGPT 可以自我反省!

5.0 / 5 (0 votes)