Claude Code just got WAY more expensive (called it)

Summary

TLDRThis video delves into the high costs of running AI models, explaining how expensive infrastructure, such as GPUs and energy, makes offering cheap AI services unsustainable. The speaker highlights businesses like Cursor and Anthropic, showing that pricing strategies, including 'unlimited' plans, are often used as marketing tools to gain market share. The discussion emphasizes that while low-cost options may seem appealing, they can't realistically cover the costs of providing AI services. Ultimately, the video warns against falling for 'too good to be true' deals in AI services and suggests the need for sustainable pricing models.

Takeaways

- 😀 AI models require significant investment in expensive GPUs and energy to run, making their true cost high for providers.

- 😀 Offering AI services cheaply often involves subsidizing costs, making it unsustainable in the long term for businesses.

- 😀 If a pricing model seems too good to be true, it is likely subsidized or misleading, and may not reflect actual API costs.

- 😀 Pricing miscalculations by AI companies, like Anthropic with Claude, can lead to backlash and PR issues when users face unexpected limits.

- 😀 Some AI services, such as cursor, had to adjust pricing due to overuse of their $20/month tier by heavy users, highlighting the need for sustainable pricing.

- 😀 High-volume users may inadvertently cause AI providers to reassess their business models as they exceed the limits of 'unlimited' plans.

- 😀 AI providers, like Anthropic, initially offer discounted pricing to attract users and generate market presence but face significant losses in the process.

- 😀 No AI provider can offer truly 'unlimited' access due to the finite nature of computational resources like electricity and GPUs.

- 😀 Open Code and other alternative AI models, such as Quen 3 and Klein, are emerging as more affordable options for users looking for cheaper AI services.

- 😀 Large-scale code generation by AI models can quickly become very expensive, as demonstrated by the 25k lines of code generated in a Sentry clone example.

- 😀 Businesses must balance user engagement with sustainability, as providers of AI services face the challenge of managing user expectations and costs effectively.

Q & A

What are the main costs involved in running AI models?

-The main costs involved in running AI models are the expensive GPUs required for processing, as well as the significant energy consumption. These resources are necessary to handle complex tasks and large-scale model inference.

Why do AI companies offer services at low prices despite the high costs?

-AI companies often offer services at low prices as part of a strategy to attract customers and generate buzz. They may hope to build a user base and create content that promotes their product. However, this comes at the cost of unsustainable financial models.

How did Cursor face challenges with its pricing model?

-Cursor initially offered a low-cost $20/month plan, but as users began utilizing the service for more intensive tasks, the business model became unsustainable. They had to adjust the pricing to avoid collapse, highlighting the difficulty in balancing pricing with usage.

Why did Anthropic’s pricing strategy backfire?

-Anthropic’s decision to price their service at $200/month was a miscalculation, as it was too cheap for the level of usage and resources required. They failed to account for the large volume of users and the associated costs, leading to a PR crisis when the service couldn’t maintain that pricing.

What does the term 'unlimited' mean in the context of AI services?

-In the context of AI services, 'unlimited' typically refers to marketing that promises unrestricted access, but in reality, no AI service can be unlimited due to the high costs of electricity, GPUs, and infrastructure needed to run these models. Eventually, users will reach limits that are not sustainable for providers.

How can AI companies maintain profitability when offering low-cost services?

-AI companies can maintain profitability by subsidizing the costs of the service initially, hoping to generate a large user base and valuable marketing through user content. However, this strategy can lead to long-term financial strain if not carefully managed.

What role do tokens play in AI models, and how can they affect pricing?

-Tokens represent units of data or computation used by AI models. The more tokens a model generates, such as for creating large amounts of code, the higher the cost becomes. This can result in unexpectedly high charges for users if not carefully managed, as seen in examples where models generated tens of thousands of tokens.

What alternatives are available to expensive AI services like Claude?

-Alternatives to expensive AI services like Claude include platforms like Open Code, which offer cheaper options while still utilizing high-quality models. Additionally, models like Quen 3 offer cost-effective and faster alternatives, highlighting that there are now various choices in the market.

Why did Anthropic choose to price Claude at $200/month?

-Anthropic priced Claude at $200/month as part of a strategy to quickly gain market traction and attract extreme users. They hoped that this pricing would allow them to build brand recognition and generate positive content about their service, despite the high costs involved.

What lessons can other AI companies learn from the issues faced by Cursor and Anthropic?

-Other AI companies can learn that pricing needs to be carefully aligned with the resources required to support the service. Offering too-low pricing can lead to unsustainable business models and PR problems. Companies must balance competitive pricing with the actual costs of running their models to avoid financial strain.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

1-Bit LLM: The Most Efficient LLM Possible?

focus on these 2 NEW cloud engineering skills

Exo: Run your own AI cluster at home by Mohamed Baioumy

What runs ChatGPT? Inside Microsoft's AI supercomputer | Featuring Mark Russinovich

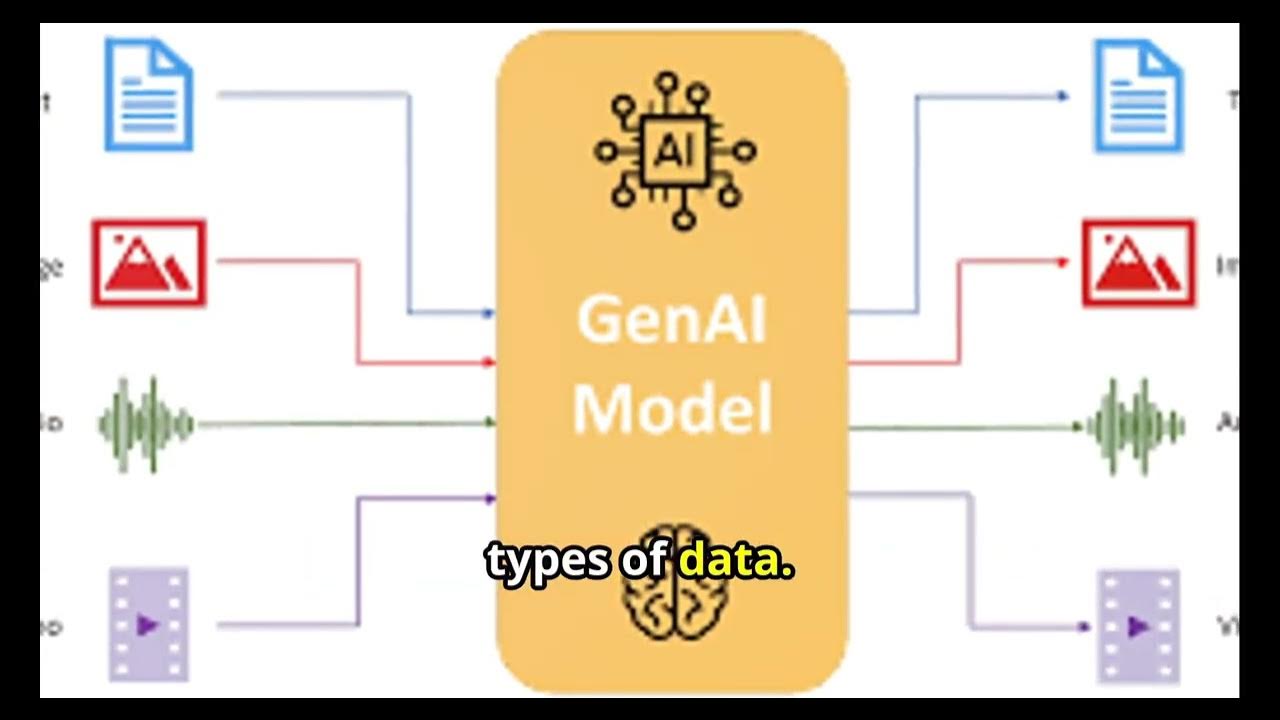

Generative AI for Absolute Beginners : Types of Generative AI

Which nVidia GPU is BEST for Local Generative AI and LLMs in 2024?

5.0 / 5 (0 votes)