Exo: Run your own AI cluster at home by Mohamed Baioumy

Summary

TLDREXO is an open-source library that enables users to run AI models across everyday devices like phones, laptops, and even Apple Watches. It allows for the creation of AI clusters without the need for specialized hardware like GPUs. EXO aggregates computing power from multiple devices, optimizing them to run large models, such as Llama 70B, efficiently. The system is designed to democratize AI access by leveraging distributed devices for inference, with a focus on simplicity, privacy, and cost-effectiveness. EXO offers a unique solution to the challenge of running large AI models without expensive infrastructure, all while being easy to use and set up.

Takeaways

- 😀 EXO is an open-source library that allows AI models to run on everyday devices like phones, laptops, and even Apple Watches, without requiring specialized hardware like GPUs.

- 😀 EXO enables users to aggregate devices on the same network, creating a cluster to run large AI models more efficiently, leveraging the combined computational power of multiple devices.

- 😀 The process to get EXO up and running is simple: clone the repository, run a shell script, and you're ready to go. It automatically aggregates devices on the same network for collaborative model execution.

- 😀 EXO democratizes access to AI by allowing anyone with basic hardware to run state-of-the-art AI models, eliminating the need for expensive hardware like high-end GPUs.

- 😀 While creating and training large AI models requires massive resources, EXO focuses on making the inference (usage) of those models accessible to users with regular consumer hardware.

- 😀 EXO allows you to run models like LLaMA 405B across multiple devices, with models being split and processed in parallel, improving efficiency without needing large data centers.

- 😀 If a model doesn't fit into memory on a single device, EXO can split the model across multiple devices, each processing a portion of the model, significantly increasing processing speed compared to using a single device.

- 😀 Devices in a cluster communicate using embeddings, which are much smaller in size compared to the full model, reducing the computational cost of transferring data between devices.

- 😀 The more devices you add to an EXO cluster, the better the throughput (number of requests processed per second), but there are diminishing returns when optimizing for latency (response time).

- 😀 EXO supports flexible configurations, including adding devices that aren't on the same local network, such as connecting an iPhone or Apple Watch to a remote cluster through tools like TailScale, further expanding the potential for distributed AI workloads.

Q & A

What is EXO, and how does it differ from traditional AI model execution methods?

-EXO is an open-source library that allows AI models to be run on a cluster of edge devices like smartphones, laptops, and wearable devices. Unlike traditional AI execution that relies on centralized servers or cloud computing, EXO distributes the workload across multiple devices, leveraging their collective computational power for improved speed and privacy.

What types of devices can be part of an EXO cluster?

-Devices like smartphones (e.g., iPhones), laptops (e.g., MacBooks), wearables (e.g., Apple Watches), and any other devices capable of running AI workloads can be added to an EXO cluster. These devices work together to process AI tasks more efficiently.

How does EXO handle AI models that are too large to fit on a single device?

-EXO splits large AI models into layers and distributes those layers across multiple devices in the cluster. Each device handles a specific portion of the model, effectively overcoming memory limitations and speeding up computation by parallelizing the workload.

What is the difference between throughput and latency in the context of EXO?

-Throughput refers to the number of requests or tasks the system can process per second, while latency refers to the time it takes to process a single request. EXO improves throughput by adding more devices, but latency only improves up to a certain point before overhead from managing devices starts to reduce the benefits.

Can EXO run AI models on Apple Watch, and if so, how?

-Yes, EXO can run small AI models like voice transcription models on an Apple Watch. The watch uses its internal GPU and Apple Neural Engine to process these models locally. However, the computational power of the watch is limited, making it less effective for larger models compared to more powerful devices like MacBooks.

What are the privacy benefits of using EXO for AI model execution?

-EXO allows AI models to run locally on devices, which means that user data never leaves the device. This reduces reliance on cloud services and enhances privacy by ensuring that sensitive information does not have to be shared with external servers or third-party services.

How does EXO handle device compatibility and performance scaling?

-EXO can aggregate devices of various specifications, but performance scaling depends on the devices' processing power and the connection speed between them. While adding more devices increases throughput, the performance gains diminish once the cluster reaches an optimal size. Device compatibility is also influenced by the backend’s ability to support various hardware accelerators like GPUs.

What challenges are associated with using Apple's Neural Engine for AI workloads on EXO?

-Apple's Neural Engine is somewhat restricted for use by developers, meaning that it is difficult to fully leverage its capabilities for AI workloads. Although EXO can run models on Apple devices, it cannot exploit the Neural Engine as effectively as other more open hardware accelerators like GPUs or CPUs.

Can EXO be used for real-time AI applications on edge devices?

-Yes, EXO is capable of running real-time AI applications, especially on clusters of devices that are connected over fast networks. The system is optimized for low-latency operations, making it suitable for real-time tasks like virtual assistants, automated scheduling, or even AI-powered wearable devices.

Is EXO scalable, and what factors influence its scalability?

-EXO is scalable, as it can add more devices to a cluster to handle larger AI models or more tasks. However, scalability is influenced by factors like network speed, device hardware capabilities, and the complexity of the AI model. As the number of devices increases, the benefits from adding more devices decrease due to coordination overhead and diminishing returns.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Ollama-Run large language models Locally-Run Llama 2, Code Llama, and other models

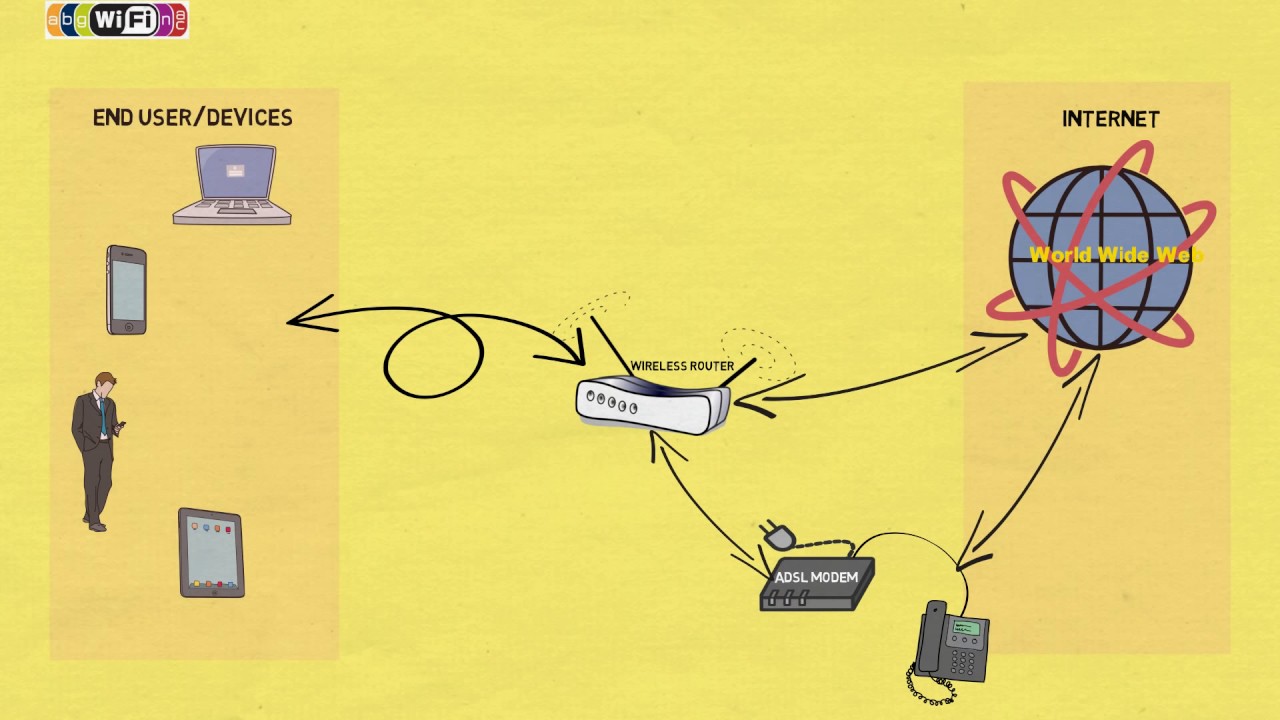

How does WiFi work - Easy Explanation

RUN LLMs Locally On ANDROID: LlaMa3, Gemma & More

What Can a 500MB LLM Actually Do? You'll Be Surprised!

This new AI is powerful and uncensored… Let’s run it

Pierre Samaties' Keynote at Crypto AI:Con

5.0 / 5 (0 votes)