Building a Generative UI App With LangChain.js

Summary

TLDRこのビデオでは、3部作のシリーズの第2部として、Langing Chainを使用して生成型UIアプリケーションの構築方法が解説されています。シリーズでは、生成型UIの概念やユースケース、そしてチャットボットのハイレベルアーキテクチャが紹介されています。さらに、PythonパワードのバックエンドとNext.jsフロントエンドを使用した実装が詳述され、TypeScript開発者向けにReactサーバーコンポーネントを活用したストリーミングUIコンポーネントの実装方法が解説されています。

Takeaways

- 😀 このビデオは、LangChainを使用して生成的UIアプリケーションを構築する三部作シリーズの第二部です。

- 😀 最初のビデオでは、生成的UIの基本概念、ユースケース、高レベルのアーキテクチャについて説明しました。

- 😀 次のビデオでは、Pythonをバックエンド、Next.jsをフロントエンドとして使用するチャットボットを取り上げます。

- 😀 TypeScriptの開発者でない場合は、次のビデオを待つか、今日のビデオの一部のトピックが重複するため視聴することをお勧めします。

- 😀 今日のビデオでは、ユーザー入力、画像、チャット履歴をLMに送信し、条件付きエッジを使用して応答をクライアントにストリーミングするチャットボットの内部構造について説明します。

- 😀 Reactサーバーコンポーネントを使用してUIコンポーネントをストリーミングし、サーバーからUIに戻すためのユーティリティサーバー.tsxファイルを実装します。

- 😀 LangChainのストリームイベントエンドポイントを使用して、すべてのイベントをクライアントにストリーミングします。

- 😀 チャットボットの構築に使用するLグラフエージェントを構築するためのLグラフのビデオへのリンクも提供されます。

- 😀 GitHubリポジトリの詳細を取得するためのツール、請求書の詳細を抽出するツール、天気情報を取得するツールを実装します。

- 😀 最後に、デモ用のアプリケーションを起動し、チャットボットが正常に動作することを確認します。

Q & A

このビデオは何について説明していますか?

-このビデオは、Lang Chainを使用して生成的なUIアプリケーションを構築する方法について説明しています。

前のビデオでどのような概念がカバーされましたか?

-前のビデオでは、生成的なUIとは何か、ユースケース、高レベルのアーキテクチャについて説明されました。

次のビデオで取り上げる予定の内容は何ですか?

-次のビデオでは、PythonチャットボットのバックエンドとNext.jsフロントエンドについて取り上げる予定です。

このビデオではどのような技術を使用していますか?

-このビデオでは、Reactサーバーコンポーネント、Lang Chain、AI SDKなどの技術を使用しています。

サーバーコンポーネントで行われる処理は何ですか?

-サーバーコンポーネントでは、UIコンポーネントのストリーミングと実行可能なランダム処理が行われます。

ストリームイベントとは何ですか?

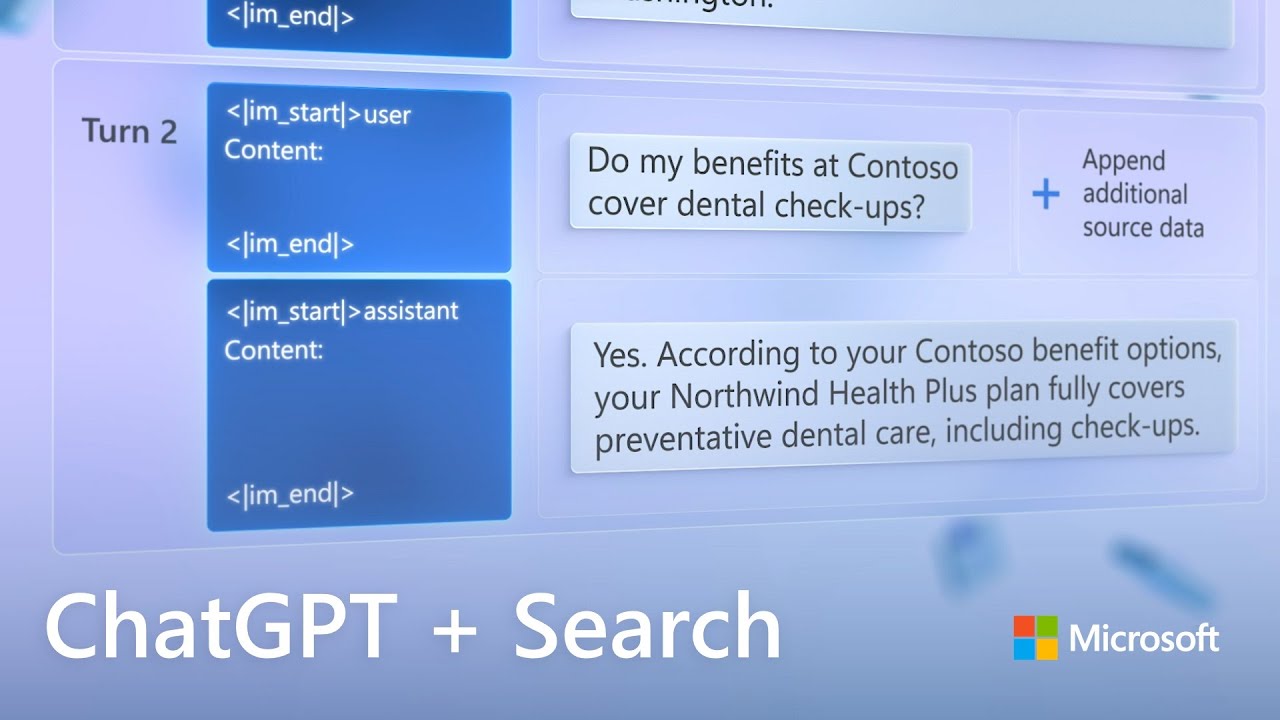

-ストリームイベントは、Lang Chainアプリケーションからクライアントに中間ステップをストリーミングする方法です。

実行可能なUIを作成するための主要なファイルは何ですか?

-utils/server.TSXファイルが、UIコンポーネントのストリーミングと実行可能なランダム処理のロジックを含んでいます。

GitHubリポジトリツールの役割は何ですか?

-GitHubリポジトリツールは、GitHub APIを使用してリポジトリの詳細を取得し、UIに表示する役割を果たします。

チャット履歴の管理方法について説明してください。

-チャット履歴は、ユーザーの入力や画像とともにLMに送信され、適切なツールを使用して応答が生成されます。

エージェントエグゼキューター関数の目的は何ですか?

-エージェントエグゼキューター関数は、ステートグラフを作成し、ノード間のエッジを定義して、ツールの呼び出しや応答の生成を管理します。

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

5.0 / 5 (0 votes)