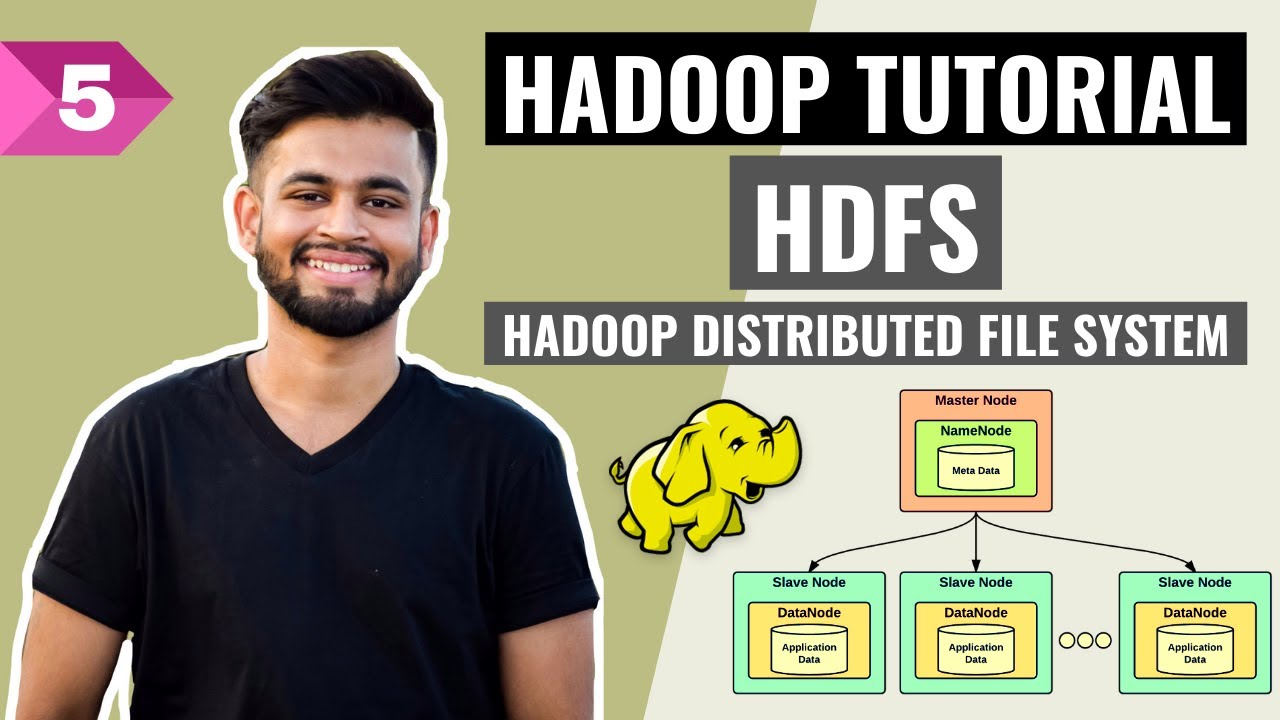

Tutorial Wordcount Hadoop- Hadoop HDFS, dan MapReduce

Summary

TLDRThis tutorial by Rahma Yulia, a student at Universitas Lita, walks through the process of using Hadoop, HDFS, and MapReduce to perform word count operations on a provided text file. It covers the steps of setting up and configuring Hadoop, including installing OpenSSH, starting essential services like DFS and YARN, and using the Hadoop command line to manipulate files. The video also explains how to run a MapReduce job to count word occurrences in a text file stored on Google Drive, illustrating the power of Hadoop for distributed data processing. The tutorial concludes with the final output of the word count process.

Takeaways

- 😀 The speaker introduces the project, explaining the task of performing word count using Hadoop, HDFS, and MapReduce.

- 😀 The text to be used for word count is provided via a Google Drive link, which the user is instructed to open and download.

- 😀 The process begins by configuring a virtual machine using Oracle VirtualBox and connecting it to the network via a bridged adapter.

- 😀 The speaker emphasizes the importance of having Hadoop already installed before proceeding with the setup.

- 😀 The installation of OpenSSH server is demonstrated to enable secure communication within the system.

- 😀 To check the status of services, the speaker uses commands like `sudo systemctl status ssh` to verify if SSH is active.

- 😀 Hadoop services are started by executing commands such as `start-dfs.sh` and `start.sh` to initiate the necessary components like DataNode.

- 😀 The speaker verifies the correct configuration of HDFS and YARN by checking the default ports (9870 for HDFS, 8088 for YARN).

- 😀 A directory for the input files is created in HDFS using the command `hadoop fs -mkdir /input`.

- 😀 The user uploads the local text file to HDFS using the command `hadoop fs -put` to place it in the newly created directory.

- 😀 The speaker runs the MapReduce job using the `hadoop jar` command to process the text file and calculate word counts.

- 😀 After execution, the output is checked using `hadoop fs -ls /output` to see the results of the word count.

- 😀 The final output shows the word frequencies in the input file, demonstrating how the MapReduce program works in Hadoop.

Q & A

What is the main objective of this tutorial?

-The main objective of the tutorial is to demonstrate how to use Hadoop, HDFS, and MapReduce for performing a word count task on a text file stored in Google Drive.

What tools are used in this tutorial for processing the data?

-The tools used in this tutorial include Hadoop, HDFS (Hadoop Distributed File System), and MapReduce for processing and analyzing the data.

What is the role of OpenSSH in this tutorial?

-OpenSSH is used to enable secure remote login to the virtual machine, allowing the user to execute commands and manage the Hadoop setup and processes.

Why is the speaker using a virtual machine in the tutorial?

-The virtual machine is used to set up a Hadoop environment for processing the data. It allows the speaker to isolate the setup and work in a controlled environment separate from their main operating system.

How does the speaker configure the network settings for the virtual machine?

-The network settings are configured by using a bridged adapter, which connects the virtual machine directly to the physical network, allowing it to have its own IP address and communicate with other devices on the network.

What command is used to start the Hadoop Distributed File System (HDFS)?

-The command used to start HDFS is `start-dfs.sh`, which initiates HDFS services such as DataNode and NameNode.

What is the function of the `hadoop fs -mkdir` command?

-The `hadoop fs -mkdir` command is used to create a new directory in the HDFS. In this case, it is used to create the 'input' directory where the data will be stored for processing.

What does the `hadoop fs -ls` command do?

-The `hadoop fs -ls` command lists the contents of a specified directory in HDFS, allowing the user to verify the directories and files present in the file system.

How does the speaker upload the input file to HDFS?

-The speaker uses the `hadoop fs -put` command to upload the input text file from their local system to the 'input' directory in HDFS.

What is the significance of the `hadoop jar` command in the tutorial?

-The `hadoop jar` command is used to execute the MapReduce job on the input data. It processes the file, counting the occurrences of each word, and outputs the result to the HDFS 'output' directory.

What is the final output of the MapReduce job in this tutorial?

-The final output of the MapReduce job is a word count result, showing how many times each word appears in the input text file, displayed in the 'output' directory in HDFS.

Why is the tutorial focused on the word count example?

-The word count example is a basic and widely-used application of MapReduce, making it an ideal starting point for demonstrating the core functionalities of Hadoop and MapReduce, such as data processing and output generation.

How does the speaker ensure the Hadoop services are running correctly?

-The speaker checks the status of the Hadoop services and verifies the IP configurations to ensure that all necessary components, such as HDFS and MapReduce, are active and properly configured.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Introduction to Hadoop

Basic Hadoop HDFS commands for beginners with live examples

What is MapReduce♻️in Hadoop🐘| Apache Hadoop🐘

What is Hadoop Yarn? | Hadoop Yarn Tutorial | Hadoop Yarn Architecture | COSO IT

Hadoop Ecosystem Explained | Hadoop Ecosystem Architecture And Components | Hadoop | Simplilearn

HDFS- All you need to know! | Hadoop Distributed File System | Hadoop Full Course | Lecture 5

5.0 / 5 (0 votes)