Deep Learning(CS7015): Lec 2.3 Perceptrons

Summary

TLDRThe video script delves into the Perceptron, an improvement over the McCulloch-Pitts neuron, addressing limitations like handling real-valued inputs and learning weights automatically. It introduces the concept of weights to prioritize certain inputs and a bias term to adjust thresholds. The Perceptron model, proposed by Minsky and Papert, operates by outputting 1 if the weighted sum of inputs exceeds a threshold, otherwise outputting 0. The script also discusses the Perceptron's ability to implement Boolean functions and its method for learning weights and biases through a learning algorithm, emphasizing its significance in decision-making tasks such as resource management in the oil industry.

Takeaways

- 📚 The Perceptron model extends the concept of Boolean input by handling real-number inputs, which allows for more complex decision-making processes.

- 🔍 Perceptrons are designed to deal with non-Boolean inputs such as salinity, pressure, and other real-world factors that influence decision-making in various industries.

- 🧠 The introduction of weights in the Perceptron allows for the differentiation of importance among inputs, enabling the model to prioritize certain factors over others.

- 🎓 The Perceptron learning algorithm is capable of adjusting weights and the threshold (bias) automatically based on past data or viewing experiences.

- 📈 The Perceptron model can represent linearly separable functions, similar to the McCulloch-Pitts neuron, but with the added ability to learn and adjust its parameters.

- 🔧 The bias (w0) in the Perceptron is often referred to as such because it represents a prior assumption or preference that influences the decision-making process.

- ⚖️ Weights in the Perceptron model are analogous to assigning different levels of importance to various inputs, which can significantly affect the output.

- 🤔 The Perceptron model raises questions about how to handle non-linearly separable functions and introduces the concept of a learning algorithm to address these challenges.

- 📉 The Perceptron's decision boundary is a hyperplane that separates the input space into two regions based on weighted sums and a threshold.

- 🔗 The Perceptron's operation can be represented by a system of linear inequalities, which can be solved to find the optimal weights and bias for a given problem.

- 🚀 The Perceptron model was proposed by Frank Rosenblatt in 1958 and further refined by Minsky and Papert, making it a foundational concept in neural networks and machine learning.

Q & A

What is the main limitation of the McCulloch-Pitts neuron model discussed in the script?

-The main limitation of the McCulloch-Pitts neuron model is that it only deals with Boolean inputs and does not have a mechanism to learn thresholds or weights for inputs.

What is the perceptron and how does it differ from the McCulloch-Pitts neuron?

-The perceptron is an improvement over the McCulloch-Pitts neuron model, allowing for real-valued inputs and introducing weights for each input. It also includes a learning algorithm to determine these weights and a threshold (bias), which are not present in the McCulloch-Pitts model.

Why are weights important in the perceptron model?

-Weights are important in the perceptron model because they allow the model to assign different importance to different inputs, enabling it to make decisions based on the significance of each input feature.

What is the role of the bias (w0) in the perceptron model?

-The bias (w0) in the perceptron model represents the prior knowledge or assumption about the inputs. It is often called the bias because it adjusts the decision boundary, allowing the perceptron to fit the data more accurately.

How does the perceptron decide whether to output 1 or 0?

-The perceptron outputs 1 if the weighted sum of the inputs is greater than a threshold (including the bias), and outputs 0 if the weighted sum is less than or equal to the threshold.

What is the significance of the example given about the oil mining company?

-The example of the oil mining company illustrates the need for real-valued inputs in decision-making problems. It shows that not all problems can be solved with Boolean inputs, and that real-world applications often require considering multiple factors with continuous values.

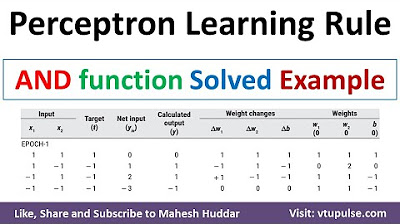

What is the perceptron learning algorithm and why is it necessary?

-The perceptron learning algorithm is a method used to adjust the weights and bias of a perceptron model. It is necessary because it allows the model to learn from data, improving its ability to make accurate predictions or classifications without manual parameter setting.

How does the script relate the perceptron model to the problem of predicting movie preferences?

-The script uses the perceptron model to illustrate how one might predict movie preferences based on factors like the actor, director, and genre. It explains how weights can be assigned to these factors based on their importance to the viewer, and how the bias can adjust the decision threshold.

What is the significance of the term 'linearly separable' in the context of the perceptron?

-Linearly separable refers to the ability of the perceptron to divide the input space into two distinct regions using a linear boundary. It highlights the perceptron's capability to solve problems where a clear linear decision boundary can separate different classes of inputs.

What are some of the challenges the script mentions when dealing with non-linearly separable functions?

-The script mentions that even within the restricted Boolean case, there can be functions that are not linearly separable. This presents a challenge because the perceptron model, as it stands, can only solve linearly separable problems. It implies the need for more advanced models to handle complex, non-linear problems.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

[JST INFORMATIKA UNINDRA] Grup 2 Kelas X8C | Tentang McCulloch Pitts

Deep Learning(CS7015): Lec 2.1 Motivation from Biological Neurons

Unit 1.4 | The First Machine Learning Classifier | Part 2 | Making Predictions

Jaringan Syaraf Tiruan [2]: Model McCulloh-Pitts dan Hebb

Neuron: Building block of Deep learning

11. Implement AND function using perceptron networks for bipolar inputs and targets by Mahesh Huddar

5.0 / 5 (0 votes)