Deep Learning(CS7015): Lec 2.1 Motivation from Biological Neurons

Summary

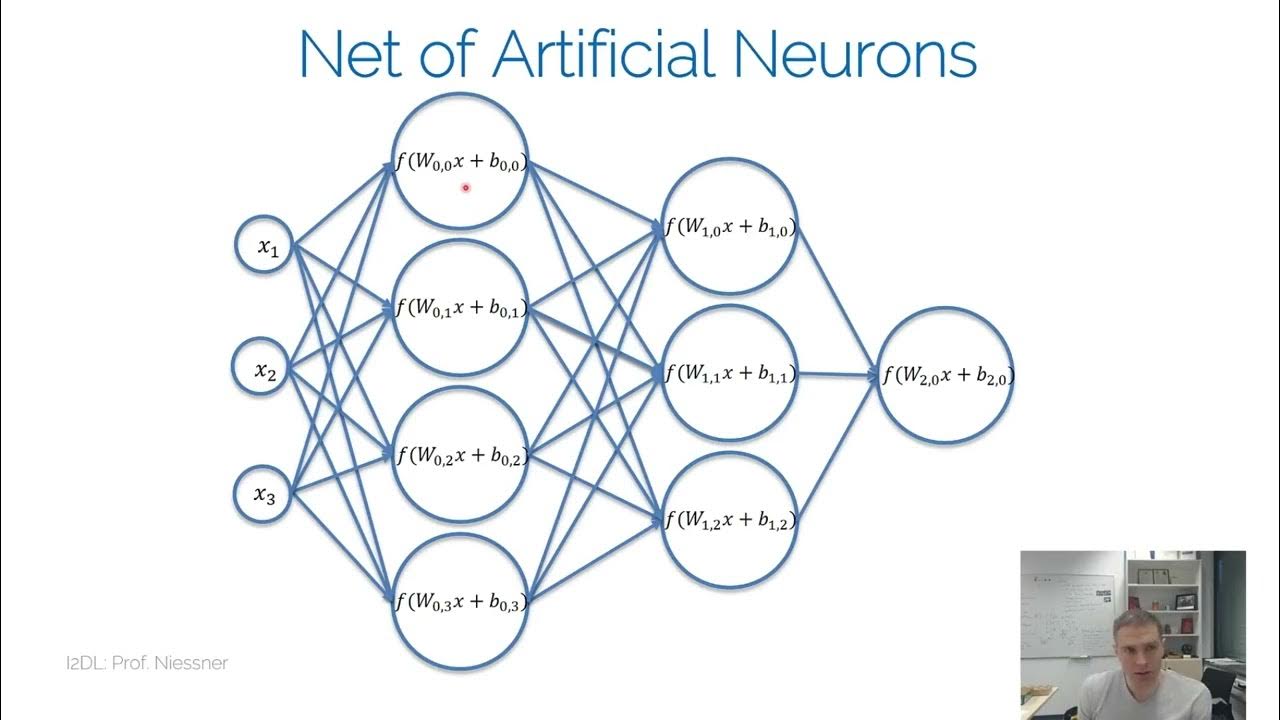

TLDRThis lecture from CS 7015 delves into the foundational concepts of deep learning, starting with the biological neurons that inspired artificial neurons. It covers the McCulloch-Pitts neuron model and introduces perceptrons, including a learning algorithm and its convergence. The lecture also explores multilayer perceptron networks and their representational capabilities. The speaker provides a simplified yet insightful overview of how the human brain processes information through a hierarchical structure of interconnected neurons, emphasizing the parallel processing and division of labor that underpins neural networks.

Takeaways

- 🧠 The course CS 7015 focuses on deep learning, covering topics like the McCulloch Pitts Neuron, Thresholding Logic, Perceptrons, and their learning algorithms.

- 🚀 The concept of an artificial neuron, fundamental to deep neural networks, is inspired by biological neurons found in the brain.

- 🌿 The term 'neuron' was coined in the 1890s to describe neural processing units or cells in our brain.

- 🧬 Biological neurons receive inputs via dendrites, process them in the soma, and transmit outputs through the axon.

- 🔗 The strength of the connection between neurons is determined by the synapse.

- 🧐 Neurons decide whether to 'fire' based on inputs, which can lead to an action like laughter if the input is deemed funny.

- 🌐 The brain operates through a massively parallel interconnected network of approximately 100 billion neurons.

- 🔄 There's a division of work among neurons, with some specializing in processing visual data, while others handle different types of sensory information.

- 📈 Neurons are arranged in a hierarchy, with higher layers in the visual cortex processing increasingly complex features of visual information.

- 📊 Layer 1 of the visual cortex detects edges and corners, layer 2 groups these into feature groups like the nose or eyes, and layer 3 recognizes these as part of a whole object, like a human face.

- ⚠️ The explanation provided is an oversimplification of how the brain works, sufficient for the course on deep learning but not exhaustive of neurobiology.

- ✨ The course emphasizes understanding the simplified model of neural processing for the purpose of studying deep learning algorithms and their applications.

Q & A

What is the main focus of CS 7015 lecture 2?

-The lecture focuses on the McCulloch Pitts Neuron, Thresholding Logic, Perceptrons, a Learning Algorithm for Perceptrons, the convergence of this algorithm, Multilayer networks of Perceptrons, and the Representation Power of perceptrons.

What is the inspiration behind the term 'artificial neuron' used in deep neural networks?

-The term 'artificial neuron' is inspired by biology, specifically from the brain, where the term 'neuron' was coined for neural processing units or cells in the brain.

What is the function of the dendrite in a biological neuron?

-The dendrite is used to receive inputs from other neurons, serving as the input point for the neuron.

What is a Synapse and its role in biological neurons?

-A Synapse is the connection between neurons that determines the strength of the connection, playing a crucial role in the transmission of signals between neurons.

What is the role of the SOMA in a biological neuron?

-The SOMA acts as the central processing unit of the neuron, where the processing of received inputs takes place.

How does the axon contribute to the function of a biological neuron?

-The axon carries the output from the neuron to other sets of neurons after the SOMA has processed the inputs.

What is the significance of the neuron firing in the context of the script?

-Neuron firing represents the decision-making process of the neuron, such as determining if an input is funny enough to evoke laughter.

How many neurons are estimated to be in the human brain?

-There are approximately 100 billion neurons in the human brain.

What does the term 'massively parallel interconnected network' refer to in the context of the brain?

-It refers to the vast network of neurons that work in parallel, interconnected with each other to process information.

What is the division of work among neurons as described in the script?

-The division of work means that different neurons are responsible for processing different types of data, such as visual data or speech, each playing a specific role.

Can you explain the hierarchical arrangement of neurons in the brain as mentioned in the script?

-The hierarchical arrangement refers to the organization of neurons in layers, where information is processed through multiple levels before reaching the final output, such as in the visual cortex where layers V1, V2, and AIT form a hierarchy for processing visual information.

What is the disclaimer provided by the lecturer regarding the explanation of the human brain?

-The lecturer acknowledges that their understanding of the human brain is limited and that the explanation provided is a very oversimplified version, sufficient for the course but not a comprehensive representation of how the brain works.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)