11. Implement AND function using perceptron networks for bipolar inputs and targets by Mahesh Huddar

Summary

TLDRThis video tutorial offers a clear explanation of the perceptron rule, guiding viewers through the process of designing and functioning a perceptron with a numerical example. It covers the fundamental concept of the perceptron, the training rule, and weight updates based on the error between calculated and target outputs. The video demonstrates the perceptron's learning process with an AND function example, showing how weights are adjusted through iterations until the network correctly classifies all inputs. The final weights are presented, illustrating the perceptron's ability to model the AND function.

Takeaways

- 🧠 The video explains the Perceptron Rule, a fundamental concept in machine learning for binary classification.

- 📚 The Perceptron training rule involves adjusting weights based on the error, which is the difference between the actual and calculated output.

- 🔢 The net input to the neuron is calculated using an equation that includes the bias and the weighted sum of inputs.

- 📈 The output of the neuron is determined by comparing the net input to a threshold, outputting 1, 0, or -1 based on whether the net input is greater than, equal to, or less than the threshold.

- 🔄 Weights are updated using the formula new weight = old weight + alpha * (target - output) * input, where alpha is the learning rate.

- 🔧 The AND function is used as an example to demonstrate how a perceptron can be trained to perform logical operations.

- 🤖 The perceptron learns by iteratively adjusting weights until it correctly classifies all given inputs, as shown in the video's example.

- 🔄 The process involves multiple epochs, or iterations, where weights are updated until the perceptron correctly learns the AND function.

- 📉 If the calculated output matches the target, no weight update is necessary; otherwise, the weights are adjusted accordingly.

- 📊 The final weights represent the learned model, capable of correctly classifying inputs for the AND function as demonstrated.

- 👍 The video encourages viewers to like, share, subscribe, and turn on notifications for more content on similar topics.

Q & A

What is the perceptron rule discussed in the video?

-The perceptron rule is a training algorithm used to train a single-layer perceptron, which is a type of neural network. It involves adjusting the weights and bias of the perceptron based on the error between the calculated output and the target output.

How does the perceptron training rule determine if the network has learned the correct output?

-The perceptron training rule determines if the network has learned correctly by comparing the calculated output of the neuron with the actual target output. If there is no difference, the network has learned; if there is a difference, the network needs further learning.

What is the formula used to calculate the net input to the output unit in a perceptron?

-The net input to the output unit is calculated using the formula: net input = B + Σ (x_i * w_i), where B is the bias, x_i is the input, and w_i is the weight of the input.

What is the role of the threshold in the perceptron's activation function?

-The threshold in the perceptron's activation function determines the output of the perceptron. If the net input is greater than the threshold, the output is 1; if it is equal to the threshold, the output is 0; and if it is less than the threshold, the output is -1.

How are the weights updated in the perceptron training rule?

-The weights are updated based on the difference between the calculated output and the target output. If they are not the same, the new weight is calculated as the old weight plus alpha * T * x, where alpha is the learning rate, T is the target, and x is the input.

What is the AND function in the context of the video?

-In the context of the video, the AND function is a logical operation where the output is high (1) only when both inputs (X1 and X2) are high (1). In all other cases, the target output is low (-1 or 0).

How is the learning rate (alpha) used in the weight update process?

-The learning rate (alpha) is a scaling factor that determines the step size during the weight update process. It is multiplied by the difference between the target and the calculated output, and then this product is added to the old weight to get the new weight.

What is the purpose of initializing weights and bias to zero at the beginning of the perceptron training?

-Initializing weights and bias to zero is a starting point for the training process. It ensures that the perceptron begins learning from a neutral state without any preconceived notions or biases.

Can you explain the process of calculating the net input and output for the first epoch with the given inputs and weights?

-In the first epoch, the input (X1, X2) is presented, and the net input is calculated using the initialized weights and bias (all set to zero). The activation function then determines the output based on whether the net input is greater than, equal to, or less than the threshold. If the calculated output does not match the target, the weights and bias are updated accordingly.

What does it mean when the calculated output matches the target output during the training process?

-When the calculated output matches the target output, it means that the perceptron has correctly classified the input for that particular training example, and there is no need to update the weights for that instance.

How many iterations or epochs are typically required for a perceptron to learn correctly?

-The number of iterations or epochs required for a perceptron to learn correctly depends on the complexity of the problem and the initial weights. In some cases, it may take only a few iterations, while in others, it may require many iterations or might not converge at all if the problem is not linearly separable.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

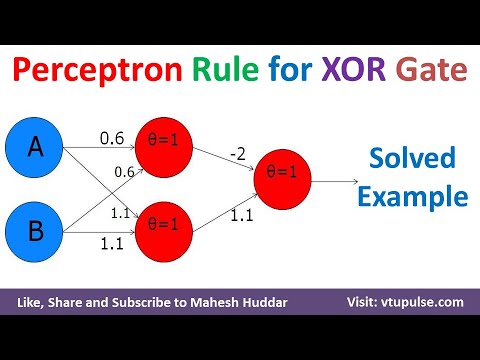

Perceptron Rule to design XOR Logic Gate Solved Example ANN Machine Learning by Mahesh Huddar

Perceptron Training

Logistic Regression Part 2 | Perceptron Trick Code

Solved Example Multi-Layer Perceptron Learning | Back Propagation Solved Example by Mahesh Huddar

Redes Neurais Artificiais #02: Perceptron - Part I

Supervised Learning: Crash Course AI #2

5.0 / 5 (0 votes)