Neural Networks Demystified [Part 5: Numerical Gradient Checking]

Summary

TLDRThis script discusses the importance of accurately calculating gradients in neural networks to avoid subtle errors that degrade performance. It suggests using numerical gradient checking as a testing method, akin to unit testing in software development. The script explains derivatives, their calculation using the definition, and how to apply this to a simple function and a neural network. It also introduces a method to quantify the similarity between computed and numerical gradients, ensuring gradient computations are correct before training the network.

Takeaways

- 🧮 Calculus is used to find the rate of change of cost with respect to parameters in neural networks.

- 🚩 Errors in gradient implementation can be subtle and hard to detect, leading to significant issues.

- 🔍 Testing gradient computation is crucial, similar to unit testing in software development.

- 📚 Derivatives are fundamental to understanding the slope or steepness of a function.

- 📐 The definition of a derivative involves the change in y values over an infinitely small change in x.

- 💻 Computers handle numerical estimates of derivatives effectively, despite not dealing well with infinitesimals.

- 📈 Numerical gradient checking involves comparing computed gradients with those estimated using a small epsilon.

- 🔢 For a simple function like x^2, numerical gradient checking can validate the correctness of symbolic derivatives.

- 🤖 The same numerical approach can be applied to neural networks to evaluate the gradient of the cost function.

- 📊 Comparing the numerical and official gradient vectors can help ensure gradient calculations are correct, with results typically around 10^-8 or less.

Q & A

What is the main concern when implementing calculus for finding the rate of change of cost with respect to parameters in a neural network?

-The main concern is the potential for making mistakes in the calculus steps, which can be hard to detect since the code may still appear to function correctly even with incorrect gradient implementations.

Why are big, in-your-face errors considered less problematic than subtle errors when building complex systems?

-Big errors are immediately noticeable and it's clear they need to be fixed, whereas subtle errors can hide in the code, causing slow degradation of performance and wasting hours of debugging time.

What is the suggested method to test the gradient computation part of neural network code?

-The suggested method is to perform numerical gradient checking, similar to unit testing in software development, to ensure the gradients are computed and coded correctly.

What is the definition of a derivative and how does it relate to the slope of a function?

-The derivative is defined as the slope of a function at a specific point, calculated as the limit of the ratio of the infinitesimal change in the function's output over the change in its input.

Why is it important to understand the definition of a derivative even after learning calculus shortcuts like the power rule?

-Understanding the definition of a derivative provides a deeper insight into what a derivative represents and is crucial for solving problems where numerical methods are required, such as gradient checking.

How does the concept of 'epsilon' play a role in numerical gradient checking?

-Epsilon is a small value used to perturb the input around a test point to estimate the derivative numerically. It helps in calculating the slope of the function at that point by finding the function values just above and below the test point.

What is the symbolic derivative of the function x squared?

-The symbolic derivative of x squared is 2x, which is derived using the power rule of differentiation.

How can numerical gradient checking be applied to evaluate the gradient of a neural network's cost function?

-By perturbing each weight with a small epsilon, computing the cost function for the perturbed values, and calculating the slope between these values, one can numerically estimate the gradient of the cost function.

What is meant by 'perturb' in the context of gradient checking?

-To 'perturb' in gradient checking means to slightly alter the weights by adding or subtracting a small epsilon to compute the cost function and estimate the gradient.

How can you quantify the similarity between the numerical gradient vector and the official gradient calculation?

-The similarity can be quantified by dividing the norm of the difference between the two vectors by the norm of their sum. A result on the order of 10^-8 or less indicates a correct gradient computation.

What is the next step after verifying the gradient computations in a neural network?

-The next step after verifying the gradient computations is to train the neural network using the confirmed correct gradients.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

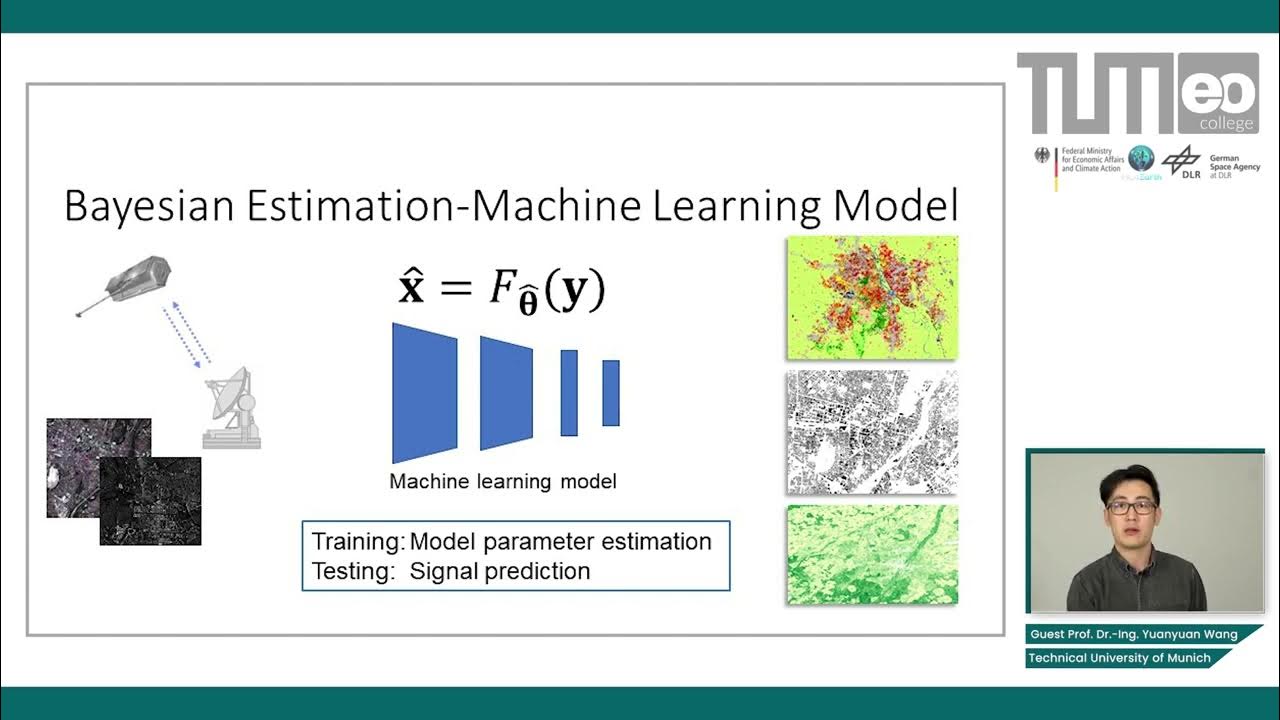

Bayesian Estimation in Machine Learning - Training and Uncertainties

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

Bayesian Estimation in Machine Learning - Maximum Likelihood and Maximum a Posteriori Estimators

Solved Example Multi-Layer Perceptron Learning | Back Propagation Solved Example by Mahesh Huddar

Mihail Bogojeski - Message passing neural networks for atomistic systems: Molecules - IPAM at UCLA

Why Neural Networks can learn (almost) anything

5.0 / 5 (0 votes)