Solved Example Multi-Layer Perceptron Learning | Back Propagation Solved Example by Mahesh Huddar

Summary

TLDRIn this video, the concept of Multi-Layer Perceptron (MLP) in artificial neural networks is explored using a simple example. The video walks through the process of training an MLP, starting from calculating the inputs and outputs of the layers, followed by the computation of net inputs, activations, and errors. The training process is explained step-by-step, including error propagation and weight updates, to reduce the error through iterations. The video provides a comprehensive explanation of how MLP works, focusing on practical calculations and the importance of updating weights and biases for improved accuracy in neural network predictions.

Takeaways

- 😀 The video discusses the working of a Multi-Layer Perceptron (MLP) network with a simple example.

- 😀 The MLP network consists of an input layer with four neurons, one hidden layer with two neurons, and an output layer with one neuron.

- 😀 Inputs and weights are provided, and the goal is to train the network by updating the weights and biases.

- 😀 The first step in training involves calculating the input and output of the input layer neurons, which remain unchanged.

- 😀 Net input and output for hidden layer neurons (X5 and X6) as well as the output layer neuron (X7) are calculated based on the inputs, weights, and biases.

- 😀 The net input at each neuron is calculated by multiplying inputs by corresponding weights and adding the bias term.

- 😀 After calculating net inputs, the output of each neuron is obtained using the logistic sigmoid activation function.

- 😀 The error at the output layer is computed as the difference between the desired output and the calculated output.

- 😀 If the error is not satisfactory, it is propagated backwards through the network to update the weights and biases, reducing the error.

- 😀 The weight and bias updates are performed using the learning rate, the error, and the output values from the previous layer.

- 😀 The process is repeated iteratively to reduce the error further, and after each iteration, the error value is checked to determine if training should stop.

Q & A

What is a multi-layer perceptron (MLP)?

-A multi-layer perceptron (MLP) is a type of artificial neural network that consists of multiple layers: an input layer, at least one hidden layer, and an output layer. It is commonly used for supervised learning tasks such as classification and regression.

How many neurons are there in the input, hidden, and output layers in this example?

-In this example, the input layer has four neurons (X1, X2, X3, X4), the hidden layer has two neurons (X5, X6), and the output layer has one neuron (X7).

What is the significance of the bias in a multi-layer perceptron?

-The bias in a multi-layer perceptron is an additional input that helps the network make better predictions. It is multiplied by a weight and added to the weighted sum of inputs before passing through the activation function. It allows the model to adjust the output even when all input values are zero.

What is the activation function used in this example to calculate the output of neurons?

-The activation function used in this example is the sigmoid function, which is defined as 1 / (1 + e^(-x)), where x is the net input to the neuron. This function outputs values between 0 and 1.

How do you calculate the net input to a neuron in a multi-layer perceptron?

-The net input to a neuron is calculated by taking the weighted sum of inputs to that neuron, including the bias. For example, for a hidden layer neuron X5, the net input is calculated as: X1*W15 + X2*W25 + X3*W35 + X4*W45 + X0*Theta5.

What is the error at the output layer and how is it calculated?

-The error at the output layer is calculated as the difference between the desired output and the actual output of the network. It is computed using the formula: Error = Desired Output - Calculated Output.

Why do we propagate errors backward in the network?

-We propagate errors backward (backpropagation) to update the weights and biases in the network. This helps reduce the error over time, improving the model's predictions by adjusting the weights to better align with the desired output.

How is the error at the hidden layer neurons calculated?

-The error at the hidden layer neurons is calculated using the formula: Error = Output * (1 - Output) * Sum of (Weight * Error at Output Layer). This accounts for the influence of the output layer on the hidden layer neurons.

What is the role of the learning rate in the weight update process?

-The learning rate determines the size of the step taken when updating the weights. It controls how much the weights are adjusted in response to the error at each iteration. A higher learning rate leads to larger adjustments, while a lower learning rate results in smaller updates.

How are the weights updated during training?

-The weights are updated using the formula: New Weight = Old Weight + (Learning Rate * Error * Output). This adjusts the weights in the direction that reduces the error, allowing the network to learn from the data.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Feedforward and Feedback Artificial Neural Networks

Taxonomy of Neural Network

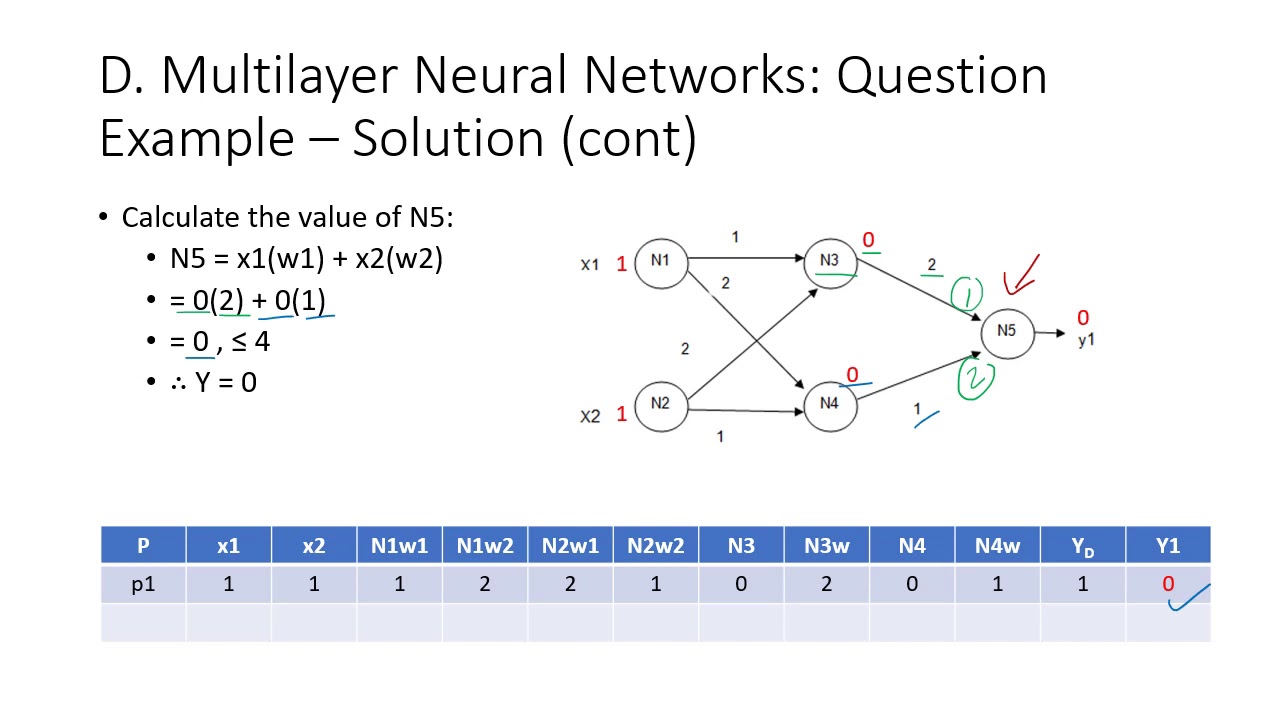

Topic 3D - Multilayer Neural Networks

Redes Neurais Artificiais #02: Perceptron - Part I

Jaringan Syaraf Tiruan [1] : Konsep Dasar JST

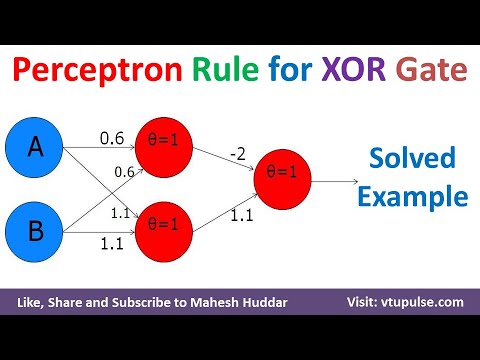

Perceptron Rule to design XOR Logic Gate Solved Example ANN Machine Learning by Mahesh Huddar

5.0 / 5 (0 votes)