100+ Docker Concepts you Need to Know

Summary

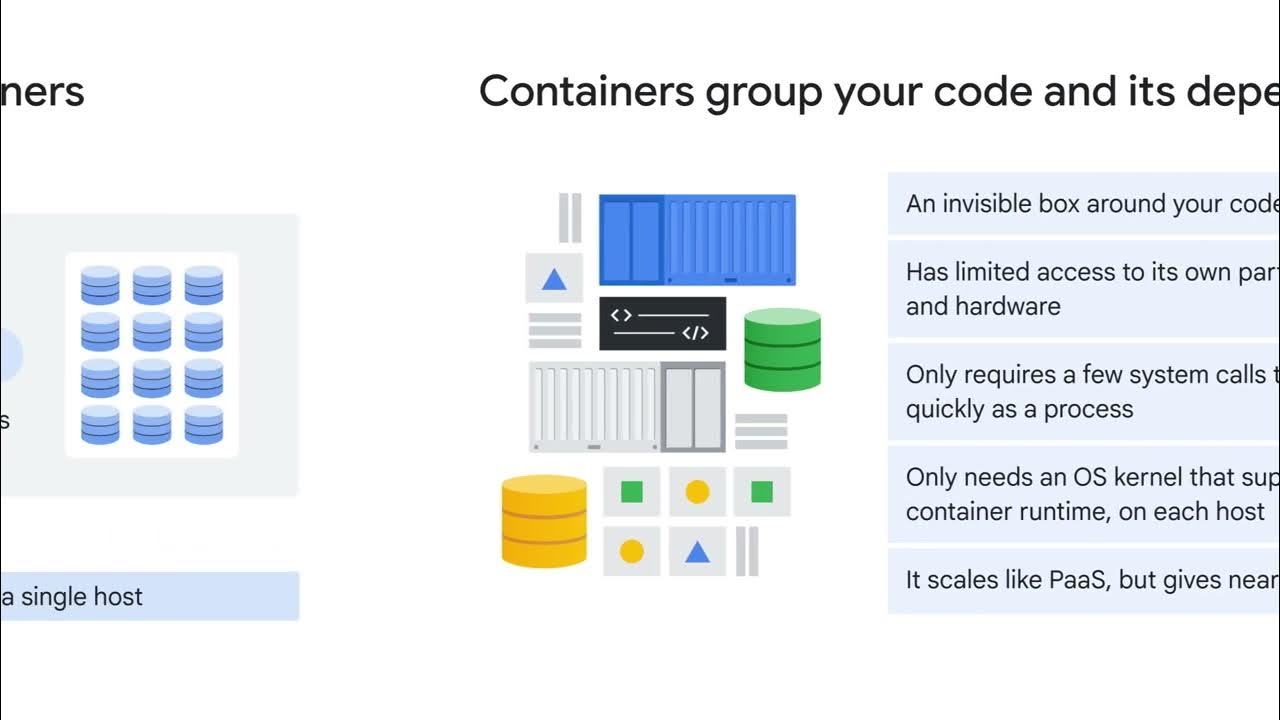

TLDRDocker 101 introduces containerization as a powerful solution for software deployment challenges, such as 'it works on my machine' and scaling issues in the cloud. The video covers the basics of computer components, operating systems, networking, and scaling strategies. It explains how Docker enables OS-level virtualization, allowing applications to share the same kernel and allocate resources dynamically. The script walks through creating a Dockerfile, building an image, and running it as a container, emphasizing Docker's portability and scalability. It also touches on Docker Compose for multi-container applications and introduces orchestration tools like Kubernetes for large-scale deployments, concluding with a nod to Docker's role in simplifying complex infrastructure management.

Takeaways

- 📦 Docker is a powerful tool for containerization, which helps solve the 'it works on my machine' problem and improves scalability in the cloud.

- 💻 The basic components of a computer include a CPU, RAM, and a disc, with the operating system's kernel providing the foundation for software applications to run.

- 🌐 Networking plays a crucial role in modern software delivery, where clients receive data from servers, which can face challenges as user numbers grow.

- ⚙️ Scaling infrastructure can be done vertically by increasing server resources or horizontally by distributing the load across multiple servers or microservices.

- 🔧 Virtual machines offer a way to run multiple operating systems on a single machine using hypervisors, but Docker provides a more efficient solution with dynamic resource allocation.

- 🛠️ Docker uses a Dockerfile as a blueprint to configure the environment for an application, which is then built into an image containing the OS, dependencies, and code.

- 📜 The Dockerfile includes instructions like FROM, WORKDIR, RUN, COPY, ENV, EXPOSE, USER, and CMD to set up the container environment.

- 🏷️ Docker allows for adding labels and health checks to the Dockerfile, and the use of volumes for persistent storage across containers.

- 🔄 Docker images are built in layers, with changes only rebuilding the affected layers, improving efficiency and workflow for developers.

- 🔍 Docker Desktop provides tools to inspect images, identify security vulnerabilities, and manage running containers with commands like docker run, docker stop, and docker kill.

- 🌐 Beyond local development, Docker images can be pushed to remote registries and run on various cloud platforms or serverless environments.

- 🔄 Docker Compose simplifies the management of multi-container applications by defining and running multiple services with a single command.

- 🤖 For large-scale deployments, orchestration tools like Kubernetes are used to manage and scale containers across multiple nodes and machines.

Q & A

What is the main problem that containerization aims to solve?

-Containerization aims to solve the problem of software compatibility and scalability issues, such as 'it works on my machine' during local development and difficulties in scaling architecture in the cloud.

What are the three main components of a computer as mentioned in the script?

-The three main components of a computer are the CPU for calculations, random access memory (RAM) for the applications currently in use, and a disc for storing data that might be used later.

What does the kernel do in an operating system?

-The kernel in an operating system sits on top of the bare metal hardware and provides a layer that allows software applications to run on the system.

What are the two ways a server can scale?

-A server can scale either vertically by increasing its RAM and CPU or horizontally by distributing the code to multiple smaller servers, often broken down into microservices.

What is a Dockerfile and what is its purpose?

-A Dockerfile is a blueprint that contains a collection of instructions to configure the environment that runs an application. It is used to build an image which is a template for running the application.

How does Docker enable applications to share the same host operating system kernel?

-Docker uses a daemon or persistent process that provides OS-level virtualization, allowing applications to share the same host operating system kernel and use resources dynamically based on their needs.

What is the significance of the 'FROM' instruction in a Dockerfile?

-The 'FROM' instruction is usually the first in a Dockerfile and points to a base image, often a Linux distribution, which serves as the starting point for building the application environment.

What is the role of the 'RUN' instruction in a Dockerfile?

-The 'RUN' instruction is used to execute any command in the Docker container, similar to running commands from the command line, and is commonly used to install dependencies.

What does 'Docker push' do and why is it important for cloud deployment?

-Docker push uploads the Docker image to a remote registry, making it accessible for deployment on cloud platforms like AWS or serverless platforms like Google Cloud Run.

What is Docker Compose and how does it help with multi-container applications?

-Docker Compose is a tool for managing multi-container applications. It allows you to define multiple applications and their Docker images in a single YAML file and start or stop all containers simultaneously with the 'up' and 'down' commands.

What is Kubernetes and why is it used for container orchestration at scale?

-Kubernetes is an orchestration tool used for running and managing containers at scale. It has a control plane that exposes an API to manage the cluster, which consists of multiple nodes or machines, each containing a kubelet and multiple pods. It is effective for describing the desired state of the system and automatically scaling or healing the system as needed.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)