AI Video Tools Are Exploding. These Are the Best

Summary

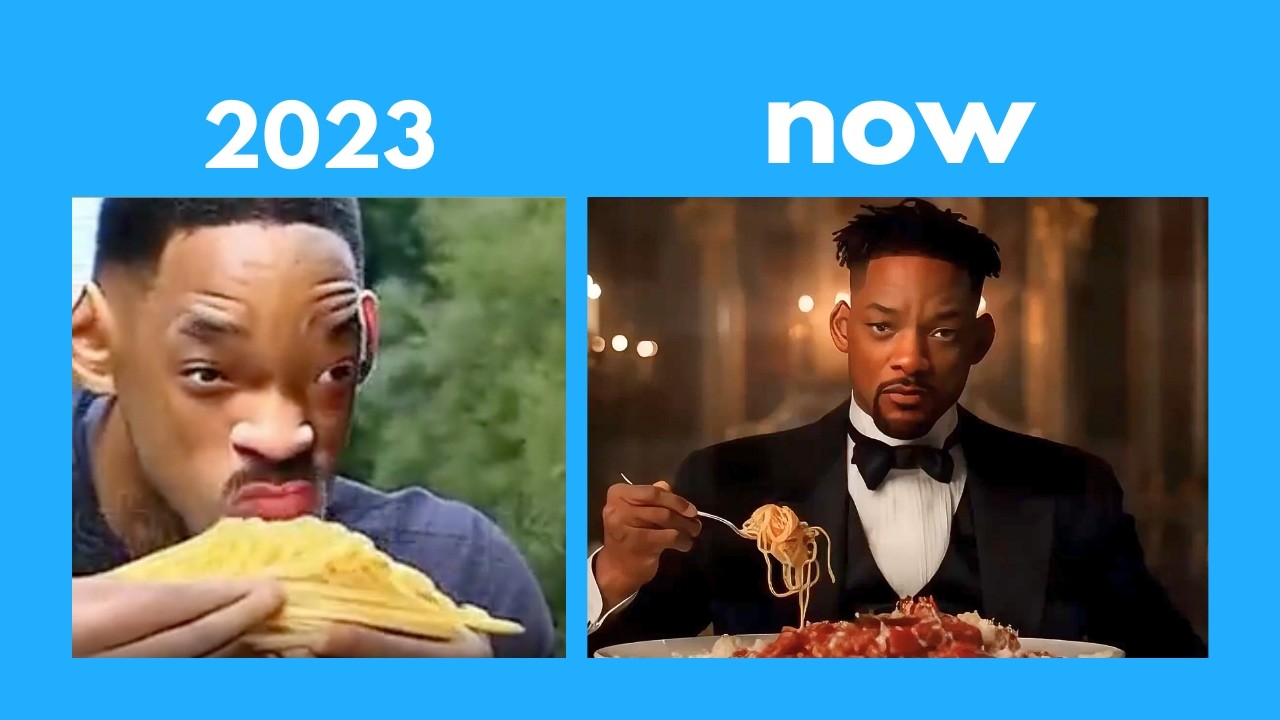

TLDRThis video script explores the exciting advancements in AI video generation tools, highlighting Runway Gen 3 and Luma Labs' Dream Machine for their text-to-video and image-to-video capabilities. The script covers various platforms, including LTX Studio for detailed control and Kaa for abstract animations, and showcases the potential of AI in creating unique content. It also touches on lip-syncing tools and open-source models, emphasizing the current fun and creative possibilities in AI video production.

Takeaways

- 😀 The video discusses the exciting advancements in AI video generation tools and their real-world applications.

- 🎥 The speaker's favorite AI video tool not in the headlines is Runway Gen 3, which is considered the best text-to-video model available.

- 🌟 Runway Gen 3 excels at creating title sequences with dynamic movement and intricate neon circuitry patterns.

- 📝 The video provides examples of how to structure prompts for Runway Gen 3 to achieve better results, emphasizing the importance of camera movement and scene details.

- 🔄 Runway Gen 3 can transform between scenes effectively, but the video also notes the potential for misses and the need for rerolls to get desired outcomes.

- 🌋 Dream Machine from Luma Labs is praised for being the best image-to-video tool, especially with the use of keyframes for logical transitions.

- 🎨 LTX Studio offers the most control and speed, allowing users to create short films from a script or simple prompt with various styles and customization options.

- 🔄 The video showcases the style reference feature in LTX Studio, which regenerates every shot in a new style based on an uploaded image.

- 🎭 The platform Kaa is highlighted for its focus on abstract and trippy animations, offering a unique creative avenue for video generation.

- 🤖 Hedra and Live Portrait are mentioned as accessible platforms for lip-syncing, with Hedra noted for its expressive talking avatars and Live Portrait for its mapping capabilities.

- 🌐 The open-source community is acknowledged for pioneering tools and workflows that form the foundation of many paid AI video generation platforms.

Q & A

What is the main theme of the video script?

-The main theme of the video script is exploring AI video tools, particularly focusing on Runway Gen 3, Luma Labs' Dream Machine, LTX Studio, and Kaa, and showcasing their capabilities in creating various types of video content.

Why is Runway Gen 3 considered the best text-to-video model according to the script?

-Runway Gen 3 is considered the best text-to-video model because of its ability to generate impressive title sequences and fluid simulation physics, which are particularly useful for creating dynamic video content.

What is the significance of using a prompt structure when using Runway Gen 3?

-Using a prompt structure helps to guide the AI in generating content that aligns with the creator's vision, reducing the number of attempts needed to achieve satisfactory results.

How does Luma Labs' Dream Machine differ from Runway Gen 3 in terms of video generation?

-Dream Machine from Luma Labs excels in image-to-video generation and keyframe-based animations, offering high-quality results that are often good with just one or two tries.

What is the advantage of using keyframes in Luma Labs' Dream Machine?

-Keyframes allow for more control over the video generation process, enabling the creation of smooth transitions and complex animations between two defined frames.

How does LTX Studio differ from other platforms mentioned in the script?

-LTX Studio offers the most control and fastest speed among the platforms, allowing users to build out an entire short film in a few minutes with a high level of customization and flexibility.

What is the purpose of the style reference feature in LTX Studio?

-The style reference feature in LTX Studio allows users to upload an image that sets the visual style for the entire video, ensuring consistency across all scenes.

What kind of content is Kaa particularly good for creating?

-Kaa is particularly good for creating abstract, trippy, and morphing type animations, which are ideal for music videos or opening sequences.

How does Kaa's video upscaler work differently from traditional upscalers?

-Kaa's video upscaler performs a creative upscale, reimagining the content with AI while staying close to the original video, rather than just increasing the resolution.

What are the limitations of using non-human characters in Hedra's lip-syncing feature?

-Hedra's lip-syncing feature can struggle with non-human characters, especially if they appear less human, making it harder to map expressions accurately.

What is the significance of the open-source community in the development of AI video tools mentioned in the script?

-The open-source community has been foundational in developing tools and workflows that have influenced the creation of paid platforms, offering more customization and control at a higher complexity level.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Hollywood is so over: The INSANE progress of AI videos

GEN-3: The Ultimate Prompting Guide

FREE AI Tools for AI Creators | Image to Video Generator | Hybrid Animal AI | AI Voice

Best AI Video Generator in 2024 (Top 5 Tools We Recommend!)

AI Generated Animations Just Changed Forever | Dream Machine

AI News: Everything You Missed This Week!

5.0 / 5 (0 votes)