Introduction to Decision Trees

Summary

TLDRThe script introduces the decision tree algorithm, a popular model-building technique in supervised learning for binary classification. It explains the concept of decision trees, which involve a series of yes/no questions leading to predictions, and emphasizes the importance of selecting the best question at each node to maximize information gain. The script delves into the measure of impurity using entropy and how it guides the tree-building process, aiming to create a model that can predict outcomes efficiently without needing the original dataset.

Please replace the link and try again.

Q & A

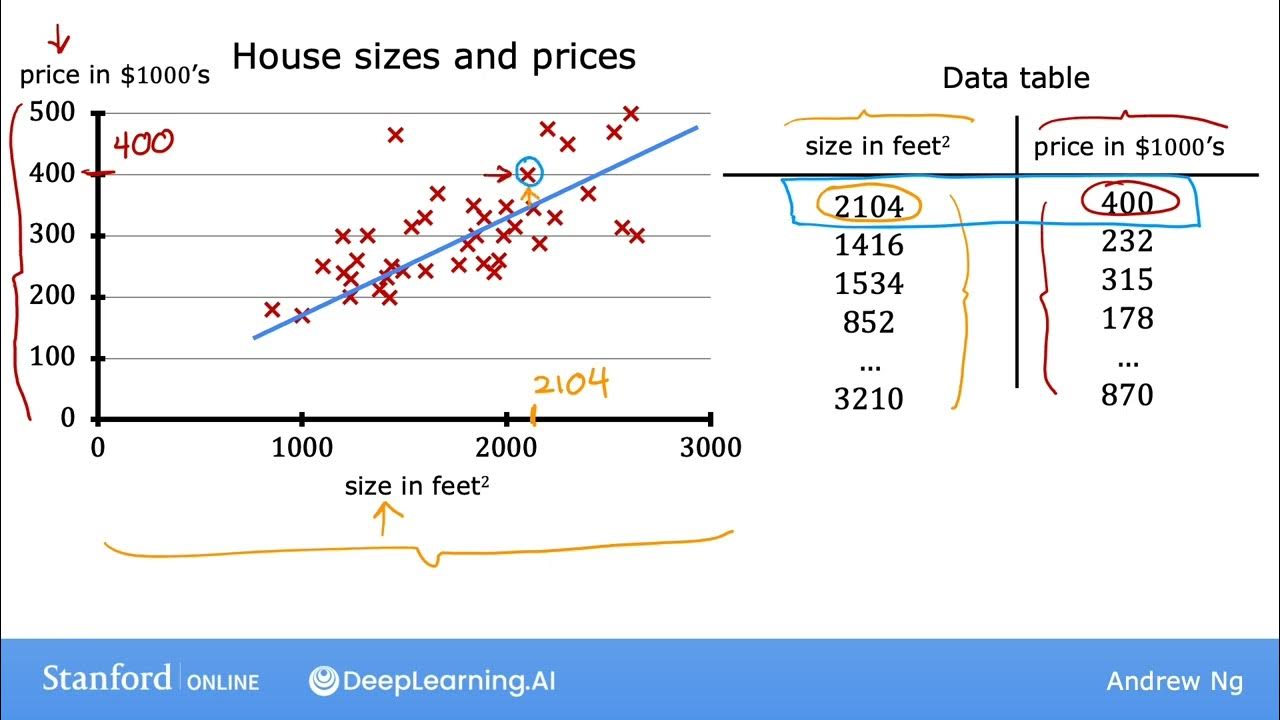

What is the main problem with the K nearest neighbors algorithm in the context of binary classification?

-The main problem with the K nearest neighbors algorithm is that it does not learn a model from the data. It requires the dataset to be present every time a prediction is made, which is not efficient for making predictions on test data points.

What is a decision tree algorithm and how does it differ from K nearest neighbors?

-A decision tree algorithm is a method used for classification and regression in machine learning. Unlike K nearest neighbors, a decision tree learns a model from the dataset. Once the model (the tree) is learned, the dataset can be discarded, and the tree can be used to make predictions on new data points.

What is the input to a decision tree algorithm?

-The input to a decision tree algorithm is a training dataset consisting of data points in the form of (x1, y1), ..., (xn, yn), where xi is a d-dimensional feature vector and yi is the binary classification label, either +1 or -1.

What does the output of a decision tree algorithm represent?

-The output of a decision tree algorithm is a decision tree itself. This tree is used for making predictions on new data points by traversing the tree based on the answers to a series of questions derived from the data.

Can you describe the structure of a decision tree?

-A decision tree is a binary tree with a root node, internal nodes, and leaf nodes. Each internal node represents a question, and each path from the root to a leaf node represents a series of questions that lead to a prediction.

What is the purpose of the questions in a decision tree?

-The questions in a decision tree are used to partition the dataset into subsets based on feature values. These questions help in making decisions at each node of the tree, ultimately leading to a prediction at the leaf nodes.

What is a prediction or leaf node in a decision tree?

-A prediction or leaf node in a decision tree is the final node in a path where a decision is made. It does not ask further questions but provides a prediction, which is the label (1 or 0) for the data points that reach this node.

How does the prediction process work in a decision tree?

-The prediction process in a decision tree involves traversing the tree from the root node to a leaf node based on the answers to the questions asked at each internal node. The label at the reached leaf node is the predicted label for the input data point.

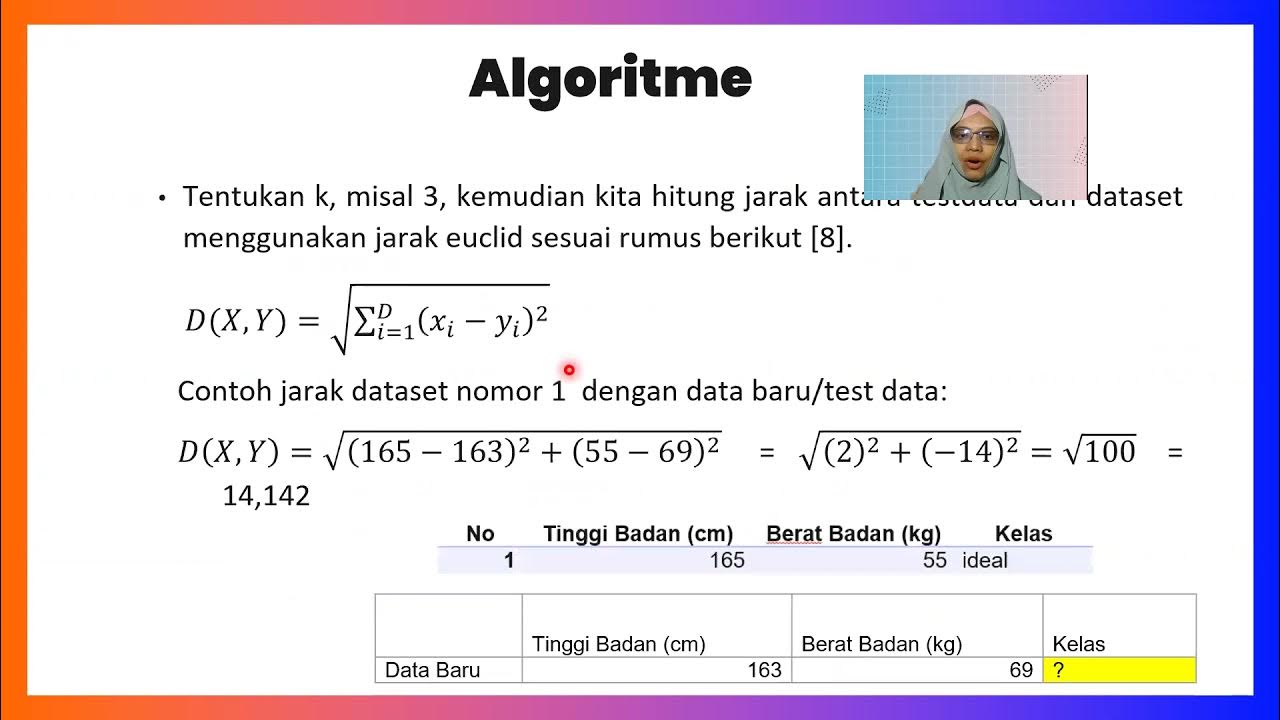

What is a feature value pair in the context of a decision tree?

-A feature value pair in a decision tree is a question that compares a feature of the data (e.g., height) with a threshold value (e.g., 180 cm). The question typically asks whether the feature is less than or equal to the threshold value.

Why is it important to measure the goodness of a question in a decision tree?

-Measuring the goodness of a question is important because it helps in selecting the best question to ask at each node of the tree. This selection process is crucial for building an efficient and accurate decision tree that can make good predictions.

What is entropy and how is it used in decision trees?

-Entropy is a measure of impurity for a set of labels. It is used in decision trees to quantify the uncertainty or impurity of the labels in a dataset after a question has been asked. It helps in evaluating how good a question is by comparing the entropy before and after the question is asked.

What is information gain and how does it relate to question selection in decision trees?

-Information gain is a measure of how much impurity is reduced by asking a question. It is calculated as the difference between the original entropy of the dataset and the weighted sum of entropies of the subsets created by the question. A higher information gain indicates a better question, as it results in a more significant reduction in impurity.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)