Machine Learning vs. Deep Learning vs. Foundation Models

Summary

TLDRThis script clarifies the relationship between AI terms like machine learning, deep learning, foundation models, and generative AI. It explains that AI simulates human intelligence, machine learning involves algorithms learning from data, deep learning uses multi-layer neural networks, foundation models are pre-trained neural networks for various applications, and large language models (LLMs) process and generate human-like text. Generative AI focuses on creating new content using these models.

Takeaways

- 🤖 Artificial Intelligence (AI) refers to the simulation of human intelligence in machines, enabling them to perform tasks typically requiring human thinking.

- 📚 Machine Learning (ML) is a subfield of AI that focuses on developing algorithms for computers to learn from data and make decisions without explicit programming.

- 🔍 Machine Learning encompasses a range of techniques including supervised learning, unsupervised learning, and reinforcement learning.

- 🧠 Deep Learning is a subset of ML that specifically focuses on artificial neural networks with multiple layers, excelling at handling unstructured data like images or natural language.

- 🚫 Not all machine learning is deep learning; traditional ML methods like linear regression and decision trees still play pivotal roles in many applications.

- 🏗️ Foundation models, popularized in 2021, are large-scale neural networks trained on vast data, serving as a base for various applications, allowing for pre-trained models to be fine-tuned.

- 🌐 Foundation models represent a shift towards more generalized, adaptable, and scalable AI solutions, trained on diverse datasets and adaptable to tasks like language translation and image recognition.

- 📚 Large Language Models (LLMs) are a specific type of foundation model designed to process and generate human-like text, with capabilities in understanding grammar, context, and cultural references.

- 👀 Vision models, scientific models, and audio models are examples of other foundation models, each specialized in interpreting and generating content in their respective domains.

- 🎨 Generative AI pertains to models and algorithms crafted to generate new content, harnessing the knowledge of foundation models to produce creative expressions.

Q & A

What is the common factor among terms like machine learning, deep learning, foundation models, generative AI, and large language models?

-They all relate to the field of artificial intelligence (AI).

What is artificial intelligence (AI)?

-AI refers to the simulation of human intelligence in machines, enabling them to perform tasks that typically require human thinking.

What is machine learning and how does it fit within AI?

-Machine learning is a subfield of AI focused on developing algorithms that allow computers to learn from and make decisions based on data, rather than being explicitly programmed for specific tasks.

What are the core categories within machine learning?

-The core categories are supervised learning, unsupervised learning, and reinforcement learning.

How is deep learning different from traditional machine learning?

-Deep learning is a subset of machine learning that focuses on artificial neural networks with multiple layers, which excel at handling vast amounts of unstructured data and discovering intricate structures within them.

What are foundation models and where do they fit in?

-Foundation models are large-scale neural networks trained on vast amounts of data. They serve as a base for multiple applications and primarily fit within the realm of deep learning.

What are large language models (LLMs) and how do they relate to foundation models?

-LLMs are a type of foundation model centered around processing and generating human-like text. They are large in scale, designed to understand and interact using human languages, and consist of a series of algorithms and parameters.

What are some examples of tasks that large language models (LLMs) can handle?

-LLMs can handle tasks like answering questions, translating languages, and creative writing.

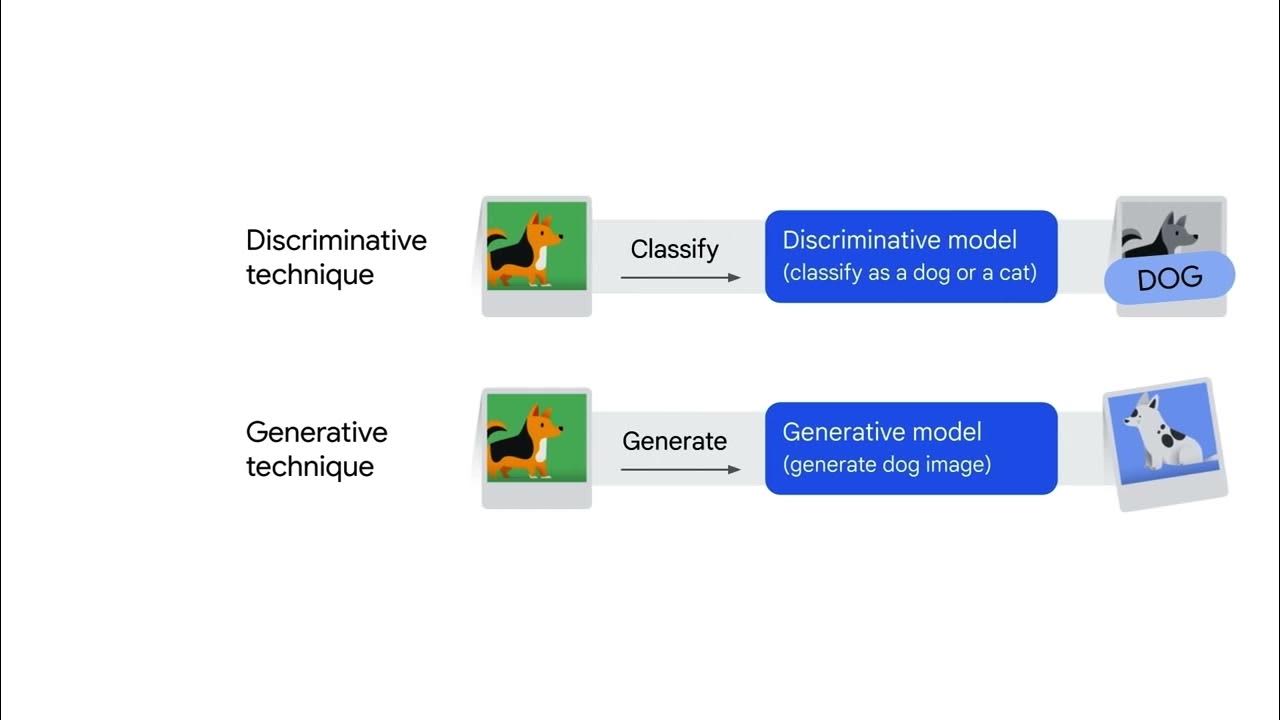

What is generative AI and how does it differ from foundation models?

-Generative AI pertains to models and algorithms specifically crafted to generate new content. While foundation models provide the underlying structure and understanding, generative AI focuses on producing new and creative expressions based on that knowledge.

What are some other types of foundation models apart from large language models?

-Other types include vision models for image interpretation and generation, scientific models for predicting protein folding in biology, and audio models for generating human-sounding speech or music.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)