Google’s AI Course for Beginners (in 10 minutes)!

Summary

TLDRThis video script offers a concise introduction to artificial intelligence (AI), clarifying misconceptions and explaining the relationship between AI, machine learning, and deep learning. It distinguishes between supervised and unsupervised learning models, delves into deep learning's use of artificial neural networks, and differentiates between discriminative and generative models. The script also highlights the role of large language models (LLMs) in AI applications, such as ChatGPT and Google Bard, and their fine-tuning for specific industries. The content is designed to be accessible for beginners, providing a practical understanding of AI's foundational concepts.

Takeaways

- 📚 Artificial Intelligence (AI) is a broad field of study, with machine learning as a subfield, similar to how thermodynamics is a subfield of physics.

- 🤖 Machine Learning involves training a model with input data to make predictions on unseen data, with common types being supervised and unsupervised learning models.

- 🔍 Supervised learning uses labeled data to train models, allowing for predictions based on historical data points, while unsupervised learning identifies patterns in unlabeled data.

- 🧠 Deep Learning is a subset of machine learning that utilizes artificial neural networks, inspired by the human brain, to create more powerful models.

- 🔧 Semi-supervised learning combines a small amount of labeled data with a large amount of unlabeled data for training deep learning models, such as fraud detection in banking.

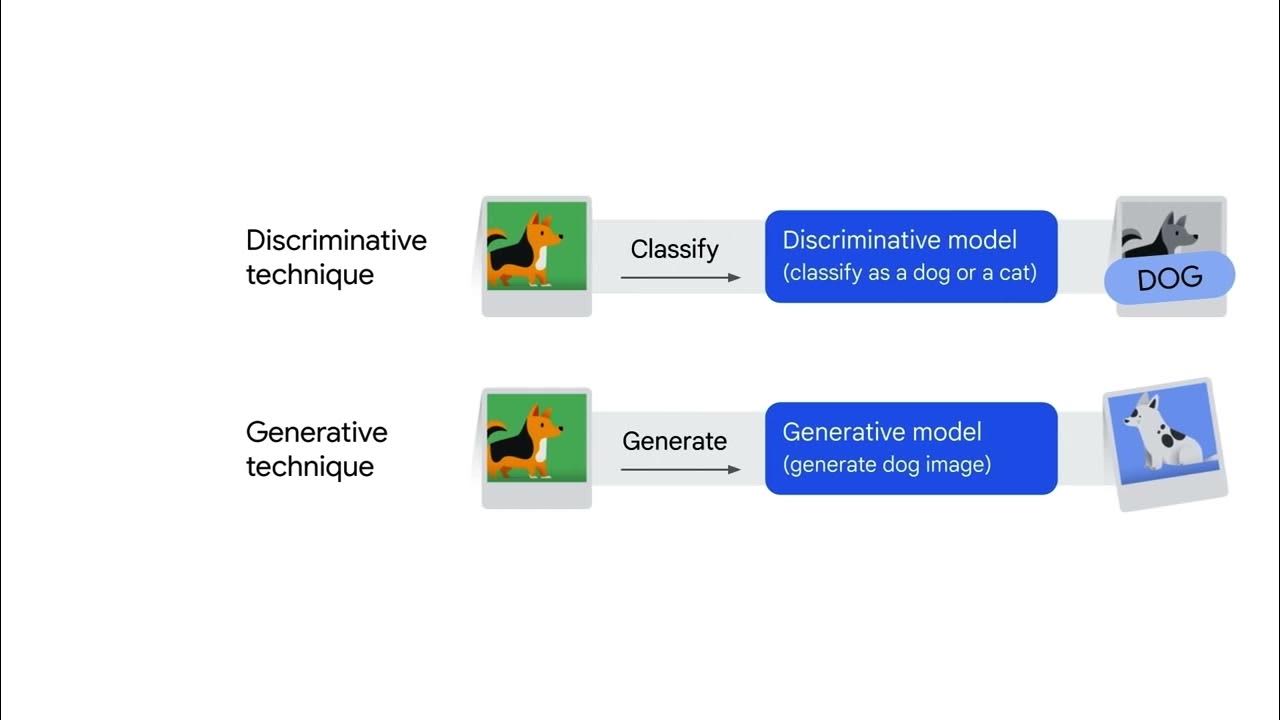

- 📈 Discriminative models classify data points based on their labels, whereas generative models learn patterns from data and generate new outputs based on those patterns.

- 🖼️ Generative AI can output natural language text, speech, images, or audio, creating new samples similar to the data it was trained on.

- 📖 Large Language Models (LLMs) are a type of deep learning model pre-trained on vast datasets and fine-tuned for specific tasks, like improving diagnostic accuracy in healthcare.

- 🔗 LLMs and Generative AI are not identical; LLMs are generally fine-tuned for specific purposes after pre-training, unlike general generative AI models.

- 🎓 Google offers a free 4-hour AI course for beginners, which provides a comprehensive understanding of AI concepts and practical applications.

- 📝 Taking notes from the course? Use the video URL feature to easily navigate back to specific parts of the video for review.

Q & A

What is the main purpose of distilling Google's 4-Hour AI course into a 10-minute summary?

-The main purpose is to provide a concise and practical overview of the basics of artificial intelligence, making it accessible to beginners who may not have a technical background.

How does the speaker describe their initial skepticism about the AI course?

-The speaker was skeptical because they thought the course might be too conceptual and not focused on practical tips, which is the focus of their channel.

What misconception did the speaker have about AI before taking the course?

-The speaker mistakenly believed that AI, machine learning, and large language models were all the same thing, not realizing that AI is a broad field with machine learning as a subfield, and deep learning as a subset of machine learning.

What are the two main types of machine learning models mentioned in the script?

-The two main types of machine learning models mentioned are supervised and unsupervised learning models.

How does a supervised learning model make predictions?

-A supervised learning model uses labeled historical data to train a model, which can then make predictions on new, unseen data based on the patterns it has learned from the training data.

What is the key difference between supervised and unsupervised learning models?

-The key difference is that supervised models use labeled data, while unsupervised models use unlabeled data and try to find natural groupings or patterns within the data.

What is semi-supervised learning in the context of deep learning?

-Semi-supervised learning is a type of deep learning where a model is trained on a small amount of labeled data and a large amount of unlabeled data, allowing it to learn basic concepts from the labeled data and apply those to the unlabeled data for making predictions.

How do discriminative and generative models differ in deep learning?

-Discriminative models learn the relationship between data point labels and classify new data points based on those labels, while generative models learn patterns in the training data and generate new data samples based on those patterns.

What is the role of large language models (LLMs) in AI applications?

-Large language models are a subset of deep learning that are pre-trained with a vast amount of data and then fine-tuned for specific purposes, such as text classification, question answering, and text generation, in various industries.

How can smaller institutions benefit from large language models developed by big tech companies?

-Smaller institutions can purchase pre-trained LLMs from big tech companies and fine-tune them with their domain-specific data sets to solve specific problems, without having to develop their own large language models from scratch.

What is the significance of the course's structure for learners?

-The course is structured into five modules, with a badge awarded after completing each module. This structure helps learners track their progress and provides a sense of accomplishment, while the theoretical content is balanced with practical applications.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade Now5.0 / 5 (0 votes)