Simplify data integration with Zero-ETL features (L300) | AWS Events

Summary

TLDRこのビデオスクリプトでは、データ分析における多岐にわたるデータ源を統合するZL(Zero ETL)ソリューションが紹介されています。リレーショナルデータベースやNoSQLデータベースからデータウェアハウスやデータレイクへのデータ移動を自動化し、分析のためのリアルタイムデータアクセスを提供します。スピーカーであるTasとAmanは、AWSサービス間のデータ統合の課題と、ZLが提供する解決策、その価値提案、および顧客事例について詳述しています。

Takeaways

- 🗃️ ZL(Zero ETL)は、データ分析のためのデータ統合を簡素化し、異なるデータベース間でのデータ移動を支援する一連の統合です。

- 🔍 ビジネスにとってデータは全てで、より多くのデータを持つほど分析が可能で洞察を得られますが、データは異なる場所に分散しています。

- 🤝 タスとアマンは、リレーショナルデータベースとNoSQLデータベースの専門家で、それぞれがZL統合の異なる側面について議論します。

- 🔄 ZLは、ストリーミングデータのコピーをサポートし、複数のソースから複数の宛先にデータを移動させることが可能です。

- 🚀 Amazon Aurora MySQLとRedshiftの間のZL統合により、リアルタイムで分析を実行できるようになります。

- 🛠️ ZLは、データパイプラインの構築、維持、監視、セキュリティ確保の複雑さを軽減し、ビジネスの分析ニーズに応えるための「分かりにくい作業」を削減します。

- 📈 Redshiftでの分析により、より迅速で高度な分析操作が可能となり、ビジネスの意思決定プロセスを強化します。

- 🔗 ZLは、DynamoDBからOpenSearchへのデータ移動をサポートし、NoSQLデータベースと検索サービスの間の同期を容易にします。

- 📊 Data Prepperというオープンソースのサーバーレス技術を活用して、ZLはETLプロセスを簡素化し、データの抽出、変換、ロードを自動化します。

- 📈 ZL統合は、DynamoDBからRedshiftへのデータのインポートもサポートしており、データウェアハウスでの分析が可能になります。

- 🔑 ZLはAWSが積極的に投資しているプロジェクトであり、将来的にはより多くのデータストアやデータソースとの統合が期待されています。

Q & A

ZLとはどのようなソリューションですか?

-ZLは、複数のソースから複数の宛先にストリーミングデータをコピーするための統合セットです。データのコピー、セキュリティ確保、および効率化を目的としています。

ZLが解決しようとしている問題とは何ですか?

-ZLは、ビジネスの分析面と操作面の間でデータを移動する際に必要となる、多数のパイプラインの構築、維持、監視、セキュリティ確保の複雑さを軽減することを目指しています。

Amazon Aurora MySQLとRedshift間のデータ転送に関して、ZLはどのように機能しますか?

-ZLは、Amazon Aurora MySQLからRedshiftへのデータ転送をリアルタイムで自動化し、操作データベースに追加の負荷をかけることなく、分析を可能にします。

データの低レイテンシ移転を実現するためにZLはどのような技術を使用していますか?

-ZLはAuroraのストレージ機能を最大限活用し、変更されたデータのみをキャプチャしてRedshiftに送信することで、レイテンシを数桁の秒間に抑えることを目標としています。

ZLが提供する統合の種類には何がありますか?

-ZLは、現在、Amazon Aurora MySQLとRedshift、Amazon DynamoDBとOpenSearchの間での統合を提供しています。他の統合はプレビュー段階または準備中です。

DynamoDBとOpenSearch間のZL統合にはどのような利点がありますか?

-DynamoDBとOpenSearch間のZL統合により、データの同期を維持しながら、検索機能を強化し、ユーザーエクスペリエンスを向上させることができます。

Data Prepperとは何であり、ZL統合でどのような役割を果たしていますか?

-Data PrepperはオープンソースのサーバーレスETLツールで、ZL統合でデータを抽出、変換、およびロードするプロセスを自動化します。

ZL統合を使用することでデータエンジニアにどのような利点がありますか?

-ZL統合を使用することで、データエンジニアはデータパイプラインのコードの維持やインフラの設定などの煩わしい作業から解放され、より迅速かつスケーラブルなデータストア間のセットアップが可能になります。

AWSは今後ZLにどのような投資を続ける予定ですか?

-AWSはZLへの投資を続けており、他のデータベースやデータソースとの統合も計画中です。AWSはZLのサポートを拡大し、より多くのユーザーニーズに対応する予定です。

ZL統合のフィードバックはどのように提供できますか?

-セッションの後またはAWSアカウントチームに連絡することで、ZL統合に関するフィードバックや要望を提供できます。AWSはユーザーからのフィードバックを活用してサービスを改善しています。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

京都大学 数学・数理科学5研究拠点合同市民講演会「源氏香はクラスタリング~ベル数とその周辺~」間野修平(情報・システム研究機構 統計数理研究所 数理・推論研究系 教授)2021年11月6日

Hannover Messe 2024: Generate business value from your SAP data

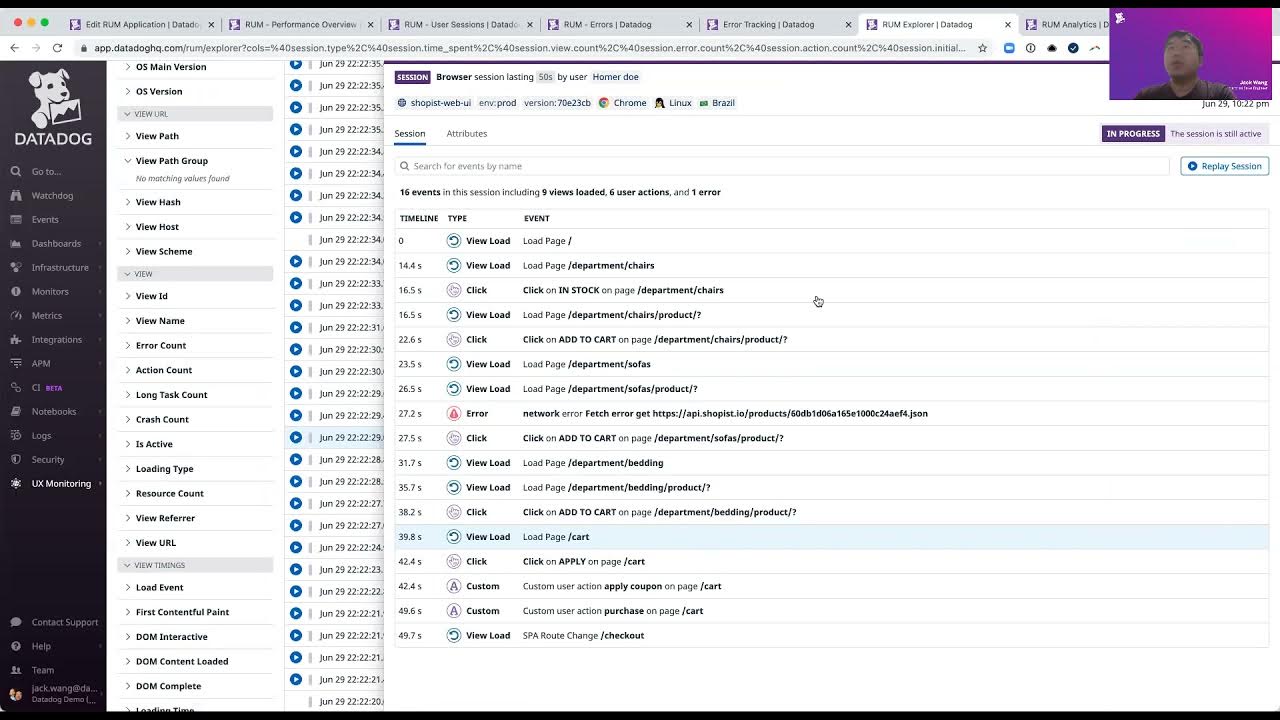

51_Datadogで実現するリアルユーザーモニタリング

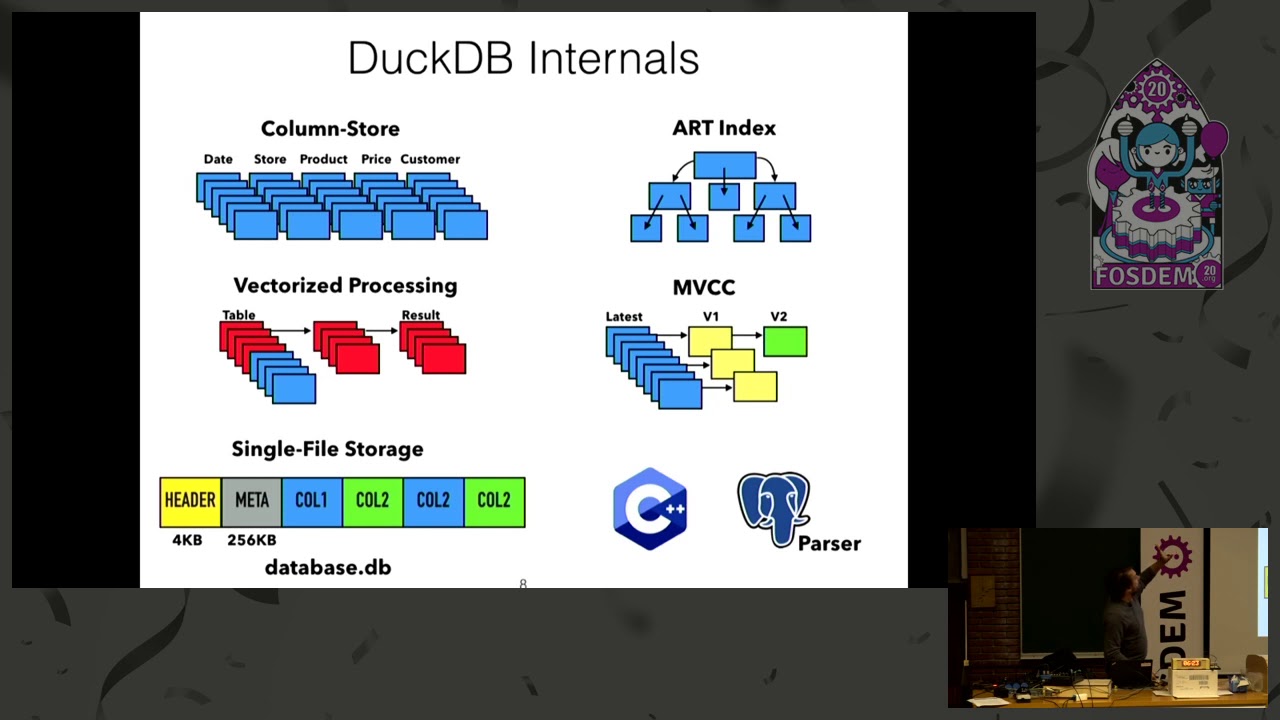

DuckDB An Embeddable Analytical Database

【忙しいあなたへ】ChatGPTとExcelで業務効率化!驚きのエクセル自動化テクニック7選【永久保存版】

【データサイエンスの基礎解説】国語+数学の総合格闘技/仮説の重要性/データ分析の本質/数字はデータではない/データの隠れた意味/世界報道自由度ランキングを読み解く【グロースX・松本健太郎】

5.0 / 5 (0 votes)